Cost Control: Don't Blow Your Budget

Dear Engineering,

Please explain the $47,000 charge from 'Anthropic' on this month's AWS bill. We don't have anyone named Anthropic on staff.

Regards,

Finance

Table of Contents

- The Actual Cost Breakdown

- The Real Cost Killers

- Caching: Your Best Friend

- Rate Limits: The Invisible Walls

- Local Models: The Alternative

- Building Cost-Conscious Architecture

- The Awkward Conversation with Your Manager

- The Cost Control Checklist

- The Uncomfortable Truth

- What You’ve Learned

Let me tell you about the time I watched a junior developer burn through $800 in API costs during a single afternoon.

He was building a “smart” search feature. Every keystroke triggered an API call. Every API call used GPT-4. Every response was immediately discarded because the user was still typing. By the time someone noticed the billing alerts, we’d processed roughly 2 million tokens of pure waste.

The feature never shipped. The developer learned an expensive lesson. Finance sent a very pointed email.

This chapter is about not being that developer.

The Actual Cost Breakdown

Before you can control costs, you need to understand where the money goes. It’s not as simple as “API calls cost money.”

Token Costs: The Obvious One

Every API call is priced per token. But here’s what catches people:

Input tokens cost less than output tokens. With Claude, you might pay $3 per million input tokens but $15 per million output tokens. With GPT-4, the ratio is similar.

This means a chatbot that writes long responses costs way more than one that writes short ones. That verbose, helpful AI personality? It’s expensive.

// This costs more than you think

const expensivePrompt = `

Please provide a comprehensive, detailed explanation of ${topic}.

Include examples, edge cases, and potential pitfalls.

Be thorough and leave nothing out.

`;

// This is cheaper

const cheaperPrompt = `

Explain ${topic} in 2-3 sentences. Be concise.

`;The difference in a production system handling thousands of requests? Hundreds or thousands of dollars per month.

That “system prompt” you’re sending with every request? It counts as input tokens. If your system prompt is 2,000 tokens and you make 10,000 requests per day, that’s 20 million tokens just for the system prompt. At $3/million, that’s $60/day or $1,800/month—before the actual user messages.

Compute Costs: The Sneaky One

If you’re running local models or fine-tuning, you’re paying for compute. GPU time isn’t cheap:

- Cloud GPUs: $1-4 per hour for decent inference hardware

- Fine-tuning: Can run into hundreds or thousands of dollars depending on dataset size

- Self-hosted inference: Cheaper per request, but you’re paying even when idle

The math often surprises people. “I’ll save money by self-hosting!” they say, before realizing their GPU server costs $2,000/month whether they use it or not.

Storage Costs: The Forgotten One

Vector databases, embedding storage, conversation history, cached responses—it all takes space.

- Pinecone: $70/month for the starter tier, scales up fast

- Conversation history: Grows forever if you’re not careful

- Embedding storage: A million embeddings at 1536 dimensions = ~6GB

Not huge individually, but it adds up.

The Real Cost Killers

After watching dozens of teams blow their budgets, I’ve identified the patterns that cause 90% of cost problems:

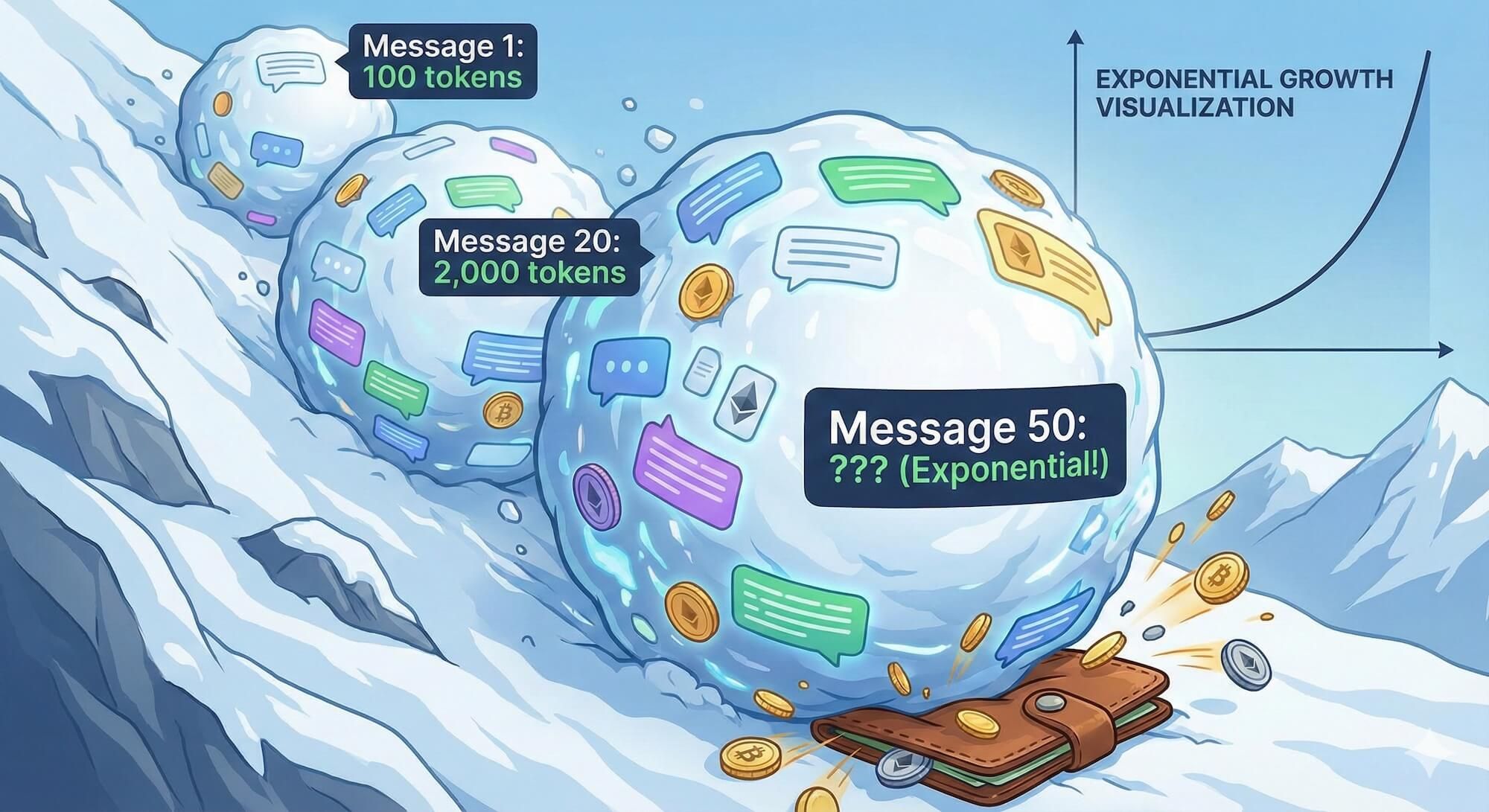

1. The Conversation History Trap

Every chatbot tutorial shows you how to include conversation history in your prompts. None of them mention that this creates exponentially growing costs.

// The tutorial approach (expensive)

async function chat(userMessage: string, history: Message[]) {

const response = await client.messages.create({

model: "claude-sonnet-4-20250514",

messages: [

...history, // This grows forever

{ role: "user", content: userMessage }

]

});

history.push({ role: "user", content: userMessage });

history.push({ role: "assistant", content: response.content });

return response;

}After 20 messages, you’re sending 20 previous messages with every new request. After 100 messages, you’re sending 100. Each message might be hundreds of tokens.

The fix: Summarize or truncate history. Keep the last N messages. Use a sliding window.

// Better approach

const MAX_HISTORY_MESSAGES = 10;

async function chat(userMessage: string, history: Message[]) {

// Keep only recent history

const recentHistory = history.slice(-MAX_HISTORY_MESSAGES);

const response = await client.messages.create({

model: "claude-sonnet-4-20250514",

messages: [

// Optional: include a summary of older context

...(history.length > MAX_HISTORY_MESSAGES

? [{ role: "system", content: conversationSummary }]

: []),

...recentHistory,

{ role: "user", content: userMessage }

]

});

return response;

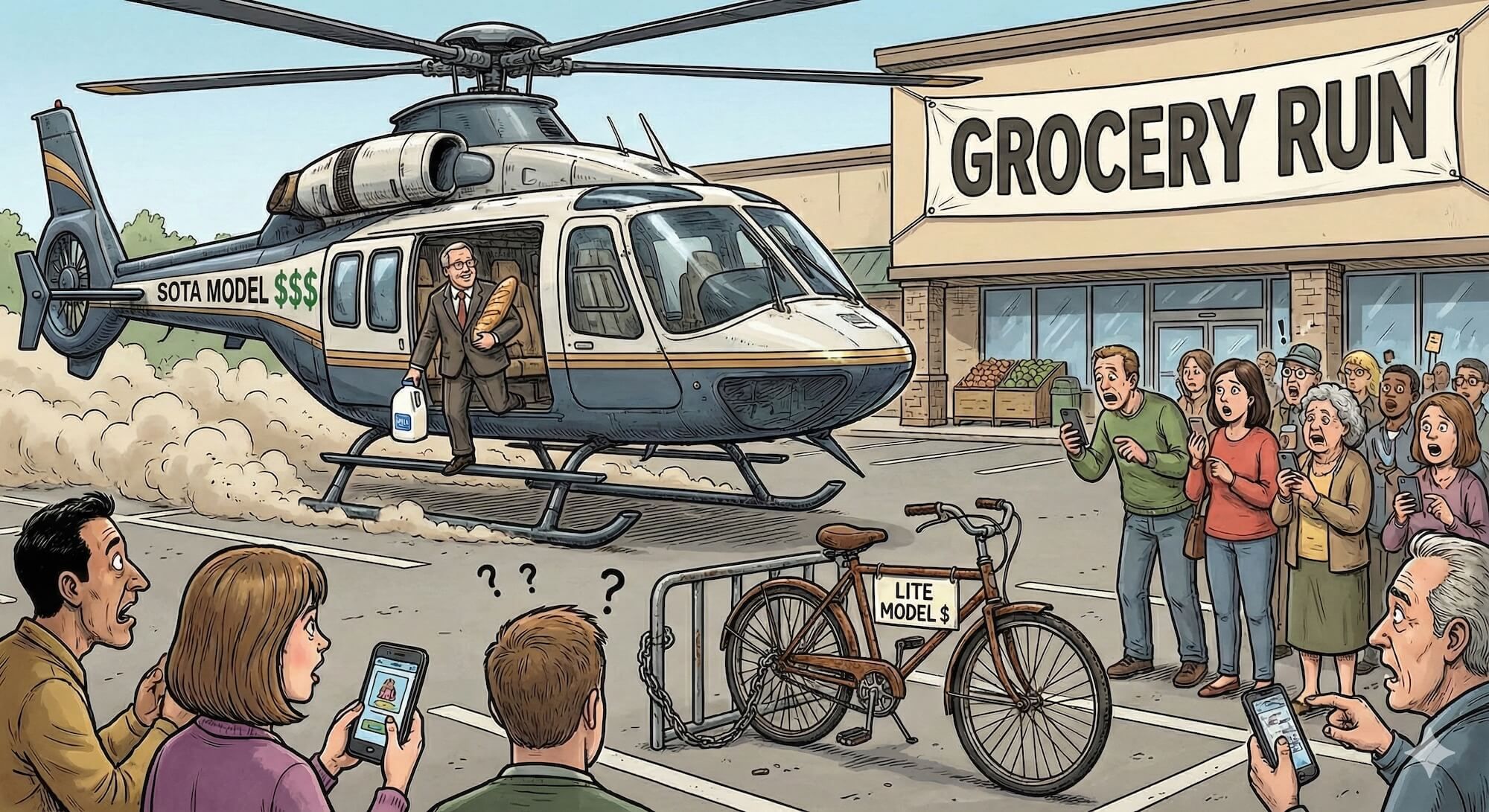

}2. The “Use the Best Model” Mistake

Not every task needs GPT-4 or Claude Opus. Most tasks don’t.

| Task | Model You Need | Model People Use |

|---|---|---|

| Classification | Haiku/GPT-3.5 | Opus/GPT-4 |

| Simple extraction | Haiku/GPT-3.5 | Opus/GPT-4 |

| Summarization | Sonnet/GPT-4-mini | Opus/GPT-4 |

| Complex reasoning | Opus/GPT-4 | Opus/GPT-4 ✓ |

Using Opus for everything is like taking a helicopter to get groceries. It works, but you’re wasting money.

// Route to appropriate model based on task

function selectModel(task: TaskType): string {

switch (task) {

case 'classification':

case 'extraction':

case 'simple_qa':

return 'claude-3-5-haiku-20241022'; // Fast and cheap

case 'summarization':

case 'code_review':

case 'moderate_reasoning':

return 'claude-sonnet-4-20250514'; // Good balance

case 'complex_analysis':

case 'novel_problems':

case 'critical_decisions':

return 'claude-opus-4-20250514'; // When you actually need it

default:

return 'claude-sonnet-4-20250514'; // Safe default

}

}In most applications, 80% of requests can be handled by the cheapest model. Route those correctly and you’ll cut costs dramatically without sacrificing quality where it matters.

3. The Retry Storm

When API calls fail, the instinct is to retry. When retries fail, the instinct is to retry harder. This can turn a $0.01 failed request into a $10 incident.

// Dangerous: Unlimited retries with no backoff

async function dangerousRequest(prompt: string) {

while (true) {

try {

return await client.messages.create({ /* ... */ });

} catch (e) {

console.log('Retrying...');

// This can run forever, burning money

}

}

}

// Better: Limited retries with exponential backoff

async function saferRequest(prompt: string, maxRetries = 3) {

for (let attempt = 0; attempt < maxRetries; attempt++) {

try {

return await client.messages.create({ /* ... */ });

} catch (e) {

if (attempt === maxRetries - 1) throw e;

// Exponential backoff: 1s, 2s, 4s

await sleep(Math.pow(2, attempt) * 1000);

}

}

}4. The Streaming Overhead

Streaming responses feels more responsive, but it’s not free. Each streaming chunk has overhead. For short responses, non-streaming can actually be cheaper.

Use streaming for: Long responses, user-facing chat, real-time applications

Skip streaming for: Backend processing, batch jobs, short responses

Caching: Your Best Friend

The cheapest API call is the one you don’t make. Caching is the single most effective cost reduction strategy.

Response Caching

If the same question gets asked repeatedly, cache the answer:

import { Redis } from 'ioredis';

const redis = new Redis();

const CACHE_TTL = 3600; // 1 hour

async function cachedCompletion(prompt: string): Promise<string> {

const cacheKey = `ai:${hashString(prompt)}`;

// Check cache first

const cached = await redis.get(cacheKey);

if (cached) {

return cached; // Free!

}

// Cache miss - make the API call

const response = await client.messages.create({

model: "claude-sonnet-4-20250514",

messages: [{ role: "user", content: prompt }]

});

const result = response.content[0].text;

// Cache for next time

await redis.setex(cacheKey, CACHE_TTL, result);

return result;

}A well-designed cache can handle 30-70% of requests in typical applications. For FAQ bots or documentation search, hit rates can exceed 90%. That’s a 90% cost reduction with minimal effort.

Semantic Caching

Exact string matching misses similar questions. “What is React?” and “What’s React?” are the same question but different strings.

Semantic caching uses embeddings to find similar cached responses:

async function semanticCache(query: string): Promise<string | null> {

const queryEmbedding = await getEmbedding(query);

// Find similar cached queries

const similar = await vectorStore.search(queryEmbedding, {

threshold: 0.95, // High similarity required

limit: 1

});

if (similar.length > 0) {

return similar[0].cachedResponse;

}

return null;

}More complex to implement, but catches more cache hits.

Embedding Caching

Embeddings are deterministic—the same text always produces the same embedding. Cache them:

const embeddingCache = new Map<string, number[]>();

async function getCachedEmbedding(text: string): Promise<number[]> {

const cacheKey = hashString(text);

if (embeddingCache.has(cacheKey)) {

return embeddingCache.get(cacheKey)!;

}

const embedding = await openai.embeddings.create({

model: "text-embedding-3-small",

input: text

});

const vector = embedding.data[0].embedding;

embeddingCache.set(cacheKey, vector);

return vector;

}Rate Limits: The Invisible Walls

Every API has rate limits. Hit them and your application breaks. The limits vary by provider and tier:

| Provider | Requests/min (free tier) | Requests/min (paid) |

|---|---|---|

| OpenAI | 3 | 500-10,000 |

| Anthropic | 5 | 50-4,000 |

| Google AI | 60 | 360-1,000 |

Handling Rate Limits Gracefully

class RateLimitedClient {

private queue: Array<() => Promise<any>> = [];

private processing = false;

private requestsThisMinute = 0;

private readonly maxRequestsPerMinute = 50;

async request<T>(fn: () => Promise<T>): Promise<T> {

return new Promise((resolve, reject) => {

this.queue.push(async () => {

try {

const result = await fn();

resolve(result);

} catch (e) {

reject(e);

}

});

this.processQueue();

});

}

private async processQueue() {

if (this.processing) return;

this.processing = true;

while (this.queue.length > 0) {

if (this.requestsThisMinute >= this.maxRequestsPerMinute) {

// Wait until the next minute

await this.waitForRateLimit();

}

const task = this.queue.shift()!;

this.requestsThisMinute++;

await task();

}

this.processing = false;

}

private async waitForRateLimit() {

const waitTime = 60000 - (Date.now() % 60000);

await sleep(waitTime);

this.requestsThisMinute = 0;

}

}Local Models: The Alternative

Cloud APIs aren’t your only option. Local models trade money for hardware:

When Local Makes Sense

- High volume, simple tasks: Thousands of classification requests per day

- Privacy requirements: Data can’t leave your servers

- Predictable costs: Fixed hardware cost vs variable API costs

- Latency sensitive: No network round trip

When Local Doesn’t Make Sense

- Occasional use: The GPU sits idle most of the time

- Quality critical: Local models are generally worse than frontier APIs

- Rapid iteration: Switching models means new deployments

- Peak handling: Can’t scale up for sudden demand

The Math

Let’s do the actual calculation:

Cloud API (Claude Sonnet):

- 1 million tokens/day = ~$50/day = ~$1,500/month

Self-hosted (Llama 3.1 70B):

- GPU server: ~$2,000/month (or $30K+ to buy)

- Electricity: ~$200/month

- Maintenance: Your time

- Total: ~$2,200/month (but unlimited tokens)

The crossover point is around 1.5 million tokens per day. Below that, cloud is cheaper. Above that, local might make sense—if you can accept the quality trade-off.

Everyone remembers the GPU cost. They forget: setup time (days to weeks), maintenance, monitoring, failover, updates, and the opportunity cost of not working on your actual product. Factor in at least 10-20 hours per month of engineering time.

Building Cost-Conscious Architecture

The best cost control is built into your architecture from the start.

1. Budget Per Request

Set a maximum cost per request and enforce it:

interface CostTracker {

inputTokens: number;

outputTokens: number;

estimatedCost: number;

}

const MAX_COST_PER_REQUEST = 0.10; // 10 cents

async function budgetedRequest(prompt: string): Promise<string> {

const estimatedInputTokens = estimateTokens(prompt);

const estimatedCost = calculateCost(estimatedInputTokens, 500); // Assume 500 output

if (estimatedCost > MAX_COST_PER_REQUEST) {

// Truncate prompt or use cheaper model

return fallbackRequest(prompt);

}

return makeRequest(prompt);

}2. User-Level Budgets

Don’t let one user bankrupt you:

class UserBudget {

private usage = new Map<string, number>();

private readonly dailyLimit = 1.00; // $1 per user per day

async checkBudget(userId: string, estimatedCost: number): Promise<boolean> {

const today = new Date().toISOString().split('T')[0];

const key = `${userId}:${today}`;

const currentUsage = this.usage.get(key) || 0;

if (currentUsage + estimatedCost > this.dailyLimit) {

return false; // Budget exceeded

}

return true;

}

recordUsage(userId: string, cost: number) {

const today = new Date().toISOString().split('T')[0];

const key = `${userId}:${today}`;

const current = this.usage.get(key) || 0;

this.usage.set(key, current + cost);

}

}3. Monitoring and Alerts

You can’t control what you don’t measure:

// Track every request

async function trackedRequest(prompt: string, metadata: RequestMetadata) {

const startTime = Date.now();

const response = await client.messages.create({

model: metadata.model,

messages: [{ role: "user", content: prompt }]

});

// Log for analysis

await analytics.track('ai_request', {

model: metadata.model,

inputTokens: response.usage.input_tokens,

outputTokens: response.usage.output_tokens,

latencyMs: Date.now() - startTime,

userId: metadata.userId,

feature: metadata.feature,

estimatedCost: calculateCost(response.usage)

});

// Alert if costs spike

await checkCostAlerts(metadata.feature);

return response;

}Set up alerts for:

- Daily spend exceeding threshold

- Single request exceeding cost limit

- Unusual usage patterns

- Error rate spikes (which might indicate retry storms)

The Awkward Conversation with Your Manager

At some point, you’ll need to explain AI costs to someone who doesn’t understand tokens. Here’s how:

Frame It Correctly

Don’t say: “We spent $3,000 on API calls.”

Do say: “We processed 50,000 customer requests at $0.06 each, which is 90% cheaper than the human support cost.”

Have the Numbers Ready

Before the conversation:

- Cost per user action

- Comparison to alternatives (human labor, other services)

- Trend over time (hopefully downward)

- ROI if applicable

Propose Solutions, Not Problems

“We’re spending too much” is a problem.

“We’re spending $X, but by implementing caching and model routing, we can reduce this to $Y within 2 weeks” is a solution.

Remember the email at the start of this chapter? That was a real team I consulted with. The actual conversation went like this:

Finance: “Why did you spend $47,000 on AI?” Team: “We processed 2 million support tickets automatically.” Finance: “That would have cost us $400,000 in support staff time.” Finance: “…carry on.”

Context matters. Always have the comparison ready.

The Cost Control Checklist

Before you deploy any AI feature:

- Model selection: Are you using the cheapest model that works?

- Caching: Are you caching responses that don’t need to be fresh?

- History management: Is conversation history bounded?

- Retry limits: Do failed requests have limited retries?

- Budget limits: Are there per-request and per-user limits?

- Monitoring: Can you see costs in real-time?

- Alerts: Will you know before the bill arrives?

- Fallbacks: What happens when you hit rate limits?

The Uncomfortable Truth

Here’s what nobody wants to admit: AI features are expensive to run. The “demo” cost is always way lower than the production cost. That cool prototype that costs $5/day will cost $500/day at scale.

The companies telling you AI is cheap are either:

- Not actually using it at scale

- Subsidizing it as a loss leader

- Lying

Budget accordingly. Build cost control into your architecture. Monitor obsessively. And always, always have an answer ready for when Finance sends that email.

Because they will send that email.

What You’ve Learned

After this chapter, you should be able to:

- Understand where AI costs actually come from

- Implement caching to reduce API calls

- Route requests to appropriate model tiers

- Build budget limits into your architecture

- Have productive conversations about AI costs with non-technical stakeholders

- Not be the developer who burns through $800 in an afternoon

The junior developer from the opening story? He’s now a tech lead who reviews every AI feature for cost implications before it ships. Some lessons you only need to learn once.

But learning from other people’s expensive mistakes is cheaper.