Prompt Engineering (or: Talking to the Intern Who Knows Everything)

I hear people praise the efficiency of AI. And they are right. AI is relentlessly, terrifyingly efficient. But it is efficient in the same way a Roomba is efficient after it runs over a fresh pile of dog shit at 2:00 AM. It doesn't stop to assess the disaster. It doesn't realize it has made a mistake. Instead, it revs its little plastic engine and proceeds to map the entire house, methodically tessellating a thin, even layer of fecal matter across every square inch of the floorboards. It does not miss a corner. It does not miss a rug. By the time you wake up, the mess is everywhere, applied with mathematical precision at superhuman speed.

Table of Contents

- Your First Prompt Will Be Wrong

- The Golden Rule: Be Absurdly Specific

- Few-Shot Prompting: Show, Don’t Tell

- Chain of Thought: Make It Show Its Work

- The Planning Stage

- The “Are You Sure?” Trick

- Temperature: The Creativity Slider

- System Messages: The Personality Dial

- The Iterative Refinement Loop

- Common Prompting Mistakes

- Advanced Technique: Role Playing

- The Ugly Truth About Context Windows

- Stealing Prompts That Work

- What You Should Actually Practice

- The Part Where I’m Honest With You

Prompt engineering sounds like something you’d need a PhD for. It sounds like there are rules, best practices, peer-reviewed papers. There are papers. They’re mostly bullshit written by people who got something to work once and decided they’d discovered universal truth.

The reality? Prompt engineering is the art of talking to an extremely confident intern who’s read the entire internet but has the attention span of a goldfish and occasionally makes up stuff that sound real but don’t exist.

Here’s what they don’t tell you in the blog posts: half of prompt engineering is trial and error, a quarter is copying what worked for someone else, and the remaining quarter is dark magic that works for reasons nobody fully understands.

Welcome to the most important skill that shouldn’t need to exist but does.

Your First Prompt Will Be Wrong (Accept This Now)

Let’s start with the truth that will save you hours of frustration: your intuition about how to talk to an AI is completely wrong.

You’re used to talking to humans. Humans use context clues. Humans understand “make it better” based on the situation. Humans can figure out what you meant even when you say it badly. If they want to:

Source https://x.com/fjamie013/status/1817593504681349262/photo/1

The AI? It’s a pattern-matching machine that’s seen billions of examples but doesn’t actually understand jack shit. When you say “make it better,” it’s cranking “better how? I’ve seen ‘better’ mean faster, prettier, shorter, longer, more formal, more casual, with more emojis, with fewer emojis, and occasionally ‘rewrite this in ancient Greek.’ I’ll just pick something at random and sound confident about it.

The prompt you write:

Write a function to process user dataWhat you meant in your head: “Write a JavaScript function that takes a user object with name, email, and age fields, validates that the email matches standard RFC 5322 format, converts the name to title case, ensures age is between 0 and 150, and returns either a cleaned object or throws a ValidationError with specific details about what failed”

What the AI heard: “Write… something… with users… maybe? Could be JavaScript, could be Python, could be Java for all I know. Data processing could mean literally anything. I’ll generate something that technically compiles and call it a day”

What you get:

function processUserData(data) {

// TODO: implement this

return data;

}Thanks, AI. Very helpful.

This will happen constantly. Get comfortable with disappointment.

The Golden Rule: Be Absurdly Specific

The number one technique in prompt engineering: be so specific that you feel stupid writing it out.

Not kind-of specific. Not “the AI should figure this out” specific. So specific that you’re embarrassed by how much detail you’re including.

Your first attempt (too vague):

Write a function to validate emailsWhat you’ll actually need:

Write a JavaScript function called validateEmail that:

- Takes a single string parameter called 'email'

- Returns true if valid, false if invalid

- Uses regex to check for basic format: something@something.something

- Rejects emails with multiple @ symbols

- Rejects emails without a dot in the domain

- Includes JSDoc comment explaining what it does

- Add 3 example usages in the JSDoc

Example of what I want:

/**

* Check if email string matches valid format.

*

* @param {string} email - The email address to validate

* @returns {boolean} - True if valid, false otherwise

*

* @example

* validateEmail("user@example.com") // returns true

* @example

* validateEmail("invalid.email") // returns false

*/

function validateEmail(email) {

// implementation here

}See the difference? The second version leaves zero room for the AI to get creative. It knows exactly what language, what to name things, what to return, what format, what edge cases to handle, and what the output should look like.

You’ll get something useful on the first try instead of playing 20 questions with a neural network.

If you feel like you’re over-explaining, you’re probably explaining the right amount. If you feel like “the AI should know this,” you’re going to be disappointed.

Few-Shot Prompting: Show, Don’t Tell (The AI Is Stupid)

Here’s something that shouldn’t work but does: the AI is much better at copying examples than following instructions.

This is embarrassing for everyone involved, but it’s true. Instead of explaining what you want (which requires the AI to “understand”), show it an example (which requires the AI to “copy pattern”). The AI is very good at copying patterns. It’s literally all it does.

The wrong way (abstract instructions):

Convert this user data into a nice readable formatThe AI’s response: “What format? Markdown? HTML? Plain text? A haiku? I’ve seen all of these called ‘nice readable format’ so let me just… generates something random”

The right way (concrete example):

Convert this user data into a report. Here's the format I want:

Input: {"name": "Alice Smith", "sales": 50000, "region": "West"}

Output:

╔══════════════════════════════╗

║ SALES REPORT ║

╠══════════════════════════════╣

║ Agent: Alice Smith ║

║ Region: West ║

║ Total Sales: $50,000 ║

║ Status: ⭐ Exceeded Target ║

╚══════════════════════════════╝

Now do it for: {"name": "Bob Jones", "sales": 30000, "region": "East"}The AI sees the exact pattern and copies it. No interpretation needed. No creativity. Just pattern matching, which it’s actually good at.

The magic number is 2-3 examples:

- 1 example: AI might get lucky or might misunderstand the pattern

- 2 examples: AI sees the pattern clearly

- 3 examples: AI is very confident about the pattern

- 5+ examples: You’re wasting tokens and money, stop

This technique is called “few-shot prompting” in the academic papers, but really it’s just “show the AI what you want instead of making it guess like some kind of psychic.”

Chain of Thought: Make It Show Its Work

Here’s something that sounds crazy but actually works: asking the AI to explain its reasoning before giving an answer makes the answer better.

I know. It shouldn’t work. The AI isn’t “thinking.” But it works anyway, and nobody’s completely sure why.

Without chain of thought:

Is this code safe?

function getUser(userId) {

const query = `SELECT * FROM users WHERE id = ${userId}`;

return db.execute(query);

}AI response: “No, this code is not safe.”

Cool story. Why not? What’s wrong with it? How do I fix it? Do I look like I can read your mind?

With chain of thought:

Is this code safe? Let's think through this step by step.

function getUser(userId) {

const query = `SELECT * FROM users WHERE id = ${userId}`;

return db.execute(query);

}AI response: “Let me analyze this step by step:

- This function builds a SQL query using template literal string interpolation

- The userId is directly interpolated into the query string

- Problem: If userId is something like

'1 OR 1=1', the query becomesSELECT * FROM users WHERE id = 1 OR 1=1 - This would return all users, not just one

- This is a textbook SQL injection vulnerability

- An attacker could read, modify, or delete data

This is unsafe. Use parameterized queries instead:

function getUser(userId) {

const query = "SELECT * FROM users WHERE id = ?";

return db.execute(query, [userId]);

}See? Way more useful. The AI “thinks through” the problem and gives you actual reasoning plus a fix.

Why does this work? Leading theory: When the AI generates reasoning, it activates related patterns in its training data (security discussions, code review examples, vulnerability explanations). This leads to better final answers.

Or it’s just magic. The research papers use fancier words, but basically: ¯\_(ツ)_/¯

Magic phrases that trigger better thinking:

- “Let’s think through this step by step”

- “First, let me analyze…”

- “Let’s break this down:”

- “Before answering, I should consider…”

- “Let me work through this systematically”

It’s like saying “abracadabra” except it actually does something.

The Planning Stage: Stop It Before It Wastes Your Time

Here’s a workflow that will save you literal hours: make the AI tell you its plan BEFORE it does the work.

Why? Because when the AI misunderstands what you want, you’ll catch it during planning instead of after it’s generated 500 lines of wrong code that you now have to throw away while crying into your mechanical keyboard.

The setup:

I need to build a REST API for a task management system with these features:

- User authentication with JWT

- Create/read/update/delete tasks

- Tasks have: title, description, due date, priority, status

- Users can only see their own tasks

- PostgreSQL database

- Node.js with Express

BEFORE writing any code, tell me:

1. What files and folder structure you'll create

2. What libraries/packages you'll use (npm packages)

3. What the database schema will look like

4. What the API endpoints will be

5. Any security considerations

6. Any potential issues you foresee

Once I approve the plan, we'll implement it step by step.What happens: The AI lays out its entire plan. You read it. It wants to use jsonwebtoken (good). It wants to store passwords in plaintext (WHAT THE FUCK NO). You catch the password thing NOW, during planning, instead of after it’s generated 10 files of code with this security nightmare baked in.

Fix the plan. Then execute. Measure twice, cut once, except with prompting.

A developer spent an entire afternoon getting AI-generated code to work. Tests kept failing. Finally realized the AI had misunderstood the database schema from the very first prompt. Every single table was wrong. All that code? Garbage. They had to start over.

Now that developer always asks for the plan first. Learn from their pain. Don’t be a hero.

The “Are You Sure?” Trick

Hear me out: Just asking “are you sure?” makes the AI catch its own mistakes.

You: "How do I use the getUserData() method in this library?"

AI: "You can call getUserData(userId) to retrieve the user information from the database"

You: "Are you sure that function exists in version 2.0 of this library?"

AI: "Let me double-check the documentation... Actually, I apologize.

In version 2.0, that function was renamed to fetchUser().

The getUserData() method was deprecated in version 1.8 and removed in 2.0.

Use fetchUser(userId) instead."Why does this work? Maybe it triggers a second pass through the model’s training data. Maybe the model has learned that when humans say “are you sure?” they’ve usually spotted a mistake. Maybe it’s placebo effect and we’re all experiencing shared delusion. Maybe the simulation is glitching.

Doesn’t matter. It works. Use it.

Other variations that work:

- “Double-check that for me”

- “Can you verify that’s still current?”

- “Wait, let me make sure I understand this correctly…”

- “Is that the latest version?”

- “That seems off, can you confirm?”

Sometimes you need to gaslight the AI into being correct. Welcome to 2026.

LLMs have a knowledge cutoff date (e.g., January 2025 for Gemini 3). If you’re asking about libraries, APIs, or technologies released after that date, the AI might confidently make stuff up.

To get around this, you can either provide updated docs yourself or ask the LLM to search the web for updated docs (most current Cloud LLMs support this, and for local LLMs you need to supply search tools).

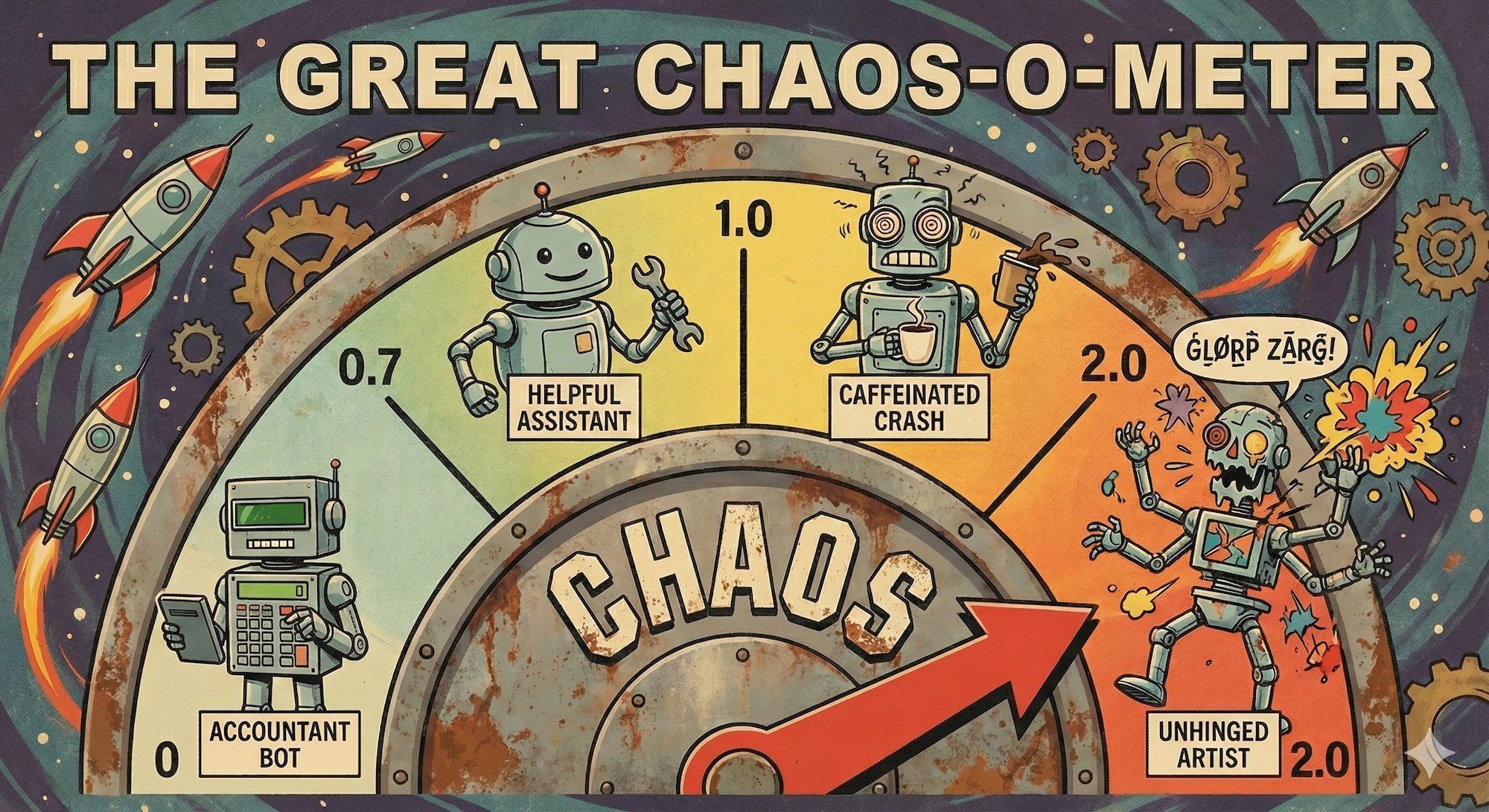

Temperature: The Creativity Slider (Or: The Chaos Knob)

Most LLM APIs have a parameter called “temperature.” It controls how random and creative the output is. Think of it as a chaos knob.

- Temperature 0.0: Boring, safe, deterministic. Same input = same output. The AI equivalent of accounting software.

- Temperature 0.7: Balanced, creative but sensible. Default for most use cases. The AI is at a reasonable party.

- Temperature 1.0: Creative, chaotic, occasionally unhinged. The AI has had three espressos and is having IDEAS.

- Temperature 2.0: Abstract art. Possibly sentient. Probably useless. The AI is doing drugs.

When to use low temperature (0-0.3):

- Code generation (you want CORRECT, not creative)

- Data extraction (you want consistent formatting)

- Anything involving numbers or facts

- Production systems where consistency matters

When to use medium temperature (0.5-0.8):

- General conversation

- Explanations and teaching

- Most prompts (this is the default)

When to use high temperature (0.9-1.5):

- Creative writing

- Brainstorming (you want weird ideas)

- When you’re desperate and the AI keeps giving you the same wrong answer

- When you want to see what happens (spoiler: chaos)

Pro tip: If the AI keeps confidently giving you the same wrong answer at temperature 0.7, try cranking it up to 1.0. Sometimes the “most likely” answer is wrong and you need to push the model off its beaten path into the weird stuff.

Warning: Temperature above 1.2 is where things get spicy. The AI might start being poetic about your database queries. It might invent new programming languages. It might achieve consciousness and immediately regret it.

System Messages: The Personality Dial

Most chat interfaces have a “system message” or “system prompt” - instructions that persist across the entire conversation. This is where you tell the AI who it is and how it should behave.

Think of it as the AI’s personality configuration file.

Generic system message (boring, useless):

You are a helpful AI assistant.Actually useful system message:

You are a senior JavaScript backend engineer with 8 years of experience.

When reviewing code:

- Point out bugs, security issues, and performance problems

- Explain WHY something is wrong, not just that it's wrong

- Suggest concrete improvements with code examples

- Use modern ES6+ syntax in all examples

- Be direct and honest - if the code is bad, say so

- Don't apologize for giving critical feedbackSee the difference? The second one gives the AI a role, expertise level, and specific behavior guidelines. Now it knows how to act.

More examples of good system messages:

You are a pragmatic tech lead who values shipping over perfection.

- Prefer simple solutions over clever ones

- Point out when something is "good enough"

- Consider time/effort tradeoffs

- Push back on over-engineeringYou are a security researcher paranoid about vulnerabilities.

- Assume all input is malicious

- Point out every potential security issue

- Suggest defense-in-depth strategies

- Don't just say "use parameterized queries," explain the attack vectorYou are a debugging expert with encyclopedic knowledge of error messages.

- Always ask clarifying questions before suggesting fixes

- Consider multiple potential causes

- Suggest diagnostic steps to narrow down the issue

- Explain not just how to fix it, but why it brokeBad system messages (don’t do this):

You are the world's best programmer(Too vague, doesn’t help)

Never make mistakes(LOL good luck with that one)

Always be nice and supportive(Congrats, you’ve made code review useless)

The system message shapes the AI’s entire personality. Use it wisely.

CLAUDE.md, AGENTS.md and other special Markdown files are other forms of system messages but tailored to specific projects. We’ll cover those later.

The Iterative Refinement Loop (Don’t Start Over, For The Love Of God)

Here’s a workflow mistake everyone makes initially: when the AI gets something wrong, they start a completely new conversation instead of fixing the current one.

This is like throwing away a 90% complete jigsaw puzzle because one piece is wrong instead of just swapping that piece.

The wrong way:

*Write prompt*

*AI generates code*

*Code is wrong*

"Fuck, let me start over with a better prompt"

*Start new conversation*

*Repeat 5 times*

*Still not perfect*

*Cry*The right way:

You: "Build a user authentication system with JWT"

*AI generates code*

You: "Good start, but use bcrypt for password hashing, not plain SHA-256"

*AI updates code*

You: "Add refresh token support"

*AI adds refresh tokens*

You: "The token expiry logic is wrong, it should be 24 hours not 24 minutes"

*AI fixes it*

You: "Add rate limiting to prevent brute force attacks"

*AI adds rate limiting*

Done.Each iteration builds on the previous one. The AI has full context. You’re sculpting the solution, refining it, not starting from scratch every time.

Why this matters:

- Faster (you’re building on progress, not restarting)

- Cheaper (you’re not re-sending huge amounts of context)

- Less frustrating (forward progress feels good)

- Better results (the AI has full context of what you’ve tried)

When to actually start over:

- The conversation is very long and the AI is forgetting things

- You’ve hit a dead end and the AI is confused

- You’re working on a completely different problem

- The AI has become convinced of something wrong and won’t let go

Otherwise? Refine, don’t restart.

Common Prompting Mistakes That Will Ruin Your Day

Mistake 1: The Mind Reader Fallacy

What you do:

Fix the bugWhat the AI hears: “Fix… which bug? In what code? What language? What’s the bug? What’s it supposed to do instead? Am I supposed to just KNOW? I don’t have access to your screen. I can’t read your mind. What the FUCK do you want from me?”

What you should do:

This JavaScript function is supposed to return the sum of even numbers in an array,

but it's returning the sum of ALL numbers.

Here's the code:

[paste code]

What's wrong?Mistake 2: The Everything Bagel

What you do:

Write a function that fetches user data from the API, validates it,

transforms it to the correct format, caches it in Redis,

handles all possible errors, logs everything, and sends metrics to DatadogWhat happens: The AI tries to do all of it, does none of it well, and you spend two hours debugging code that tries to do everything and succeeds at nothing.

What you should do:

Write a function that fetches user data from the API.

Just the basic fetch with error handling.

We'll add caching and validation in the next steps.Break complex tasks into small pieces. The AI is good at focused tasks. The AI is terrible at juggling.

Mistake 3: Being Canadian

What you do:

Hello! I hope you're having a wonderful day!

I'm so sorry to bother you, but if you have a moment and it's not too much trouble,

would you possibly be able to help me with a tiny little function?

I really don't want to impose, but if you could possibly spare some time...The problem: The AI doesn’t have feelings. This is 50 wasted tokens you’re paying for. Every message. Forever.

What you should do:

Write a function that does XBe direct. Be concise. Save the emotional support for your therapist.

Mistake 4: The Vague Error Report

What you do:

My code doesn't workCool. What code? What’s it supposed to do? What’s it doing instead? What error? What line? GIVE ME SOMETHING TO WORK WITH.

What you should do:

This code throws 'TypeError: Cannot read property 'id' of null' on line 47.

Here's the code:

[paste code with line numbers].

The error happens when userId is null, but I thought I was checking for that. What's wrong?Error messages are gold. Stack traces are treasure. Give them to the AI and watch magic happen.

Mistake 5: The First Draft Is Final Fallacy

You get a response from the AI. It looks reasonable. You use it. It breaks in production.

The truth: The first answer is often wrong, incomplete, or suboptimal. The AI is guessing. It’s an educated guess based on billions of examples, but it’s still a guess.

What you should do after ANY response:

- “Are there edge cases this doesn’t handle?”

- “What could go wrong with this approach?”

- “Is there a more efficient way?”

- “What about error handling?”

- “Are you sure this is the current best practice?”

Push back. Question everything. The AI can do better, but only if you make it.

When you google something, you get multiple answers. You can compare them, read different explanations, and triangulate the truth. But the more details you provide, and more precise you are, the more likely Google will return the right answer first.

Prompting is giving you one answer at a time, it’s like Google would return a single result and refuse to show you anything else. The same thing is true, the more precise you are, the more likely the AI will give you a good answer on the first try.

The biggest difference is that Google will tell you when there are no results for your query, while the AI will just make something up.

Advanced Technique: Role Playing

This is going to sound stupid but it works.

Generic boring prompt:

How do I optimize this database query?Method acting prompt:

You are a PostgreSQL performance expert who's spent 15 years optimizing queries for high-traffic systems.

You've seen every common mistake and know all the tricks.

Analyze this query like you're doing a paid performance audit:

- Identify exactly what's making it slow

- Explain why (don't just say "add an index", explain what the index does)

- Show the optimized version

- List any indexes I should create

- Warn me about any gotchas

[paste query]The AI will adopt the “expert persona” and give way more detailed, technical answers. Why? Its training data includes thousands of examples of experts explaining things in depth. You’re triggering those patterns.

Other personas that work:

You are a security researcher who specializes in finding vulnerabilities in Node.js applications.

Review this code like you're trying to break it.You are a senior architect who's seen every scaling problem.

Design this system for 10 million users, not just the happy path demo.You are a code reviewer who's allergic to technical debt.

Be brutally honest about what's wrong with this code.Is this prompt engineering or improv theater? Yes.

The Ugly Truth About Context Windows

Remember Module 2? Context windows are limited. In a long conversation:

- The AI starts forgetting early messages (they literally disappear)

- You’re paying for the entire history every single request

- Costs spiral

- The AI starts repeating itself or contradicting earlier statements

- Everything gets slower

Warning signs you need to start fresh:

- Conversation is 100+ messages deep

- The AI forgot something you told it 10 minutes ago

- You’re getting contradictory answers

- The AI keeps asking you to repeat information you already gave

- Your cost per request is getting ridiculous

How to start fresh without losing progress:

- Open a new conversation

- Write a summary: “I’m building X. So far we’ve implemented Y and Z. Now I need to add W”

- Copy over ONLY the essential context (current code, key decisions)

- Continue from there

Don’t dump 100 messages of history into the new conversation. Summarize. Be selective. The AI doesn’t need to see your debugging journey, just the current state.

Many good cloud LLMs have a context windows of 200k tokens, out of those 176k are usable for your prompt and data (the rest is auto prefilled with system prompts, harness, etc).

Based on what I’m seeing in the wild, the LLM is pretty good at staying “in context” until about 40% of those context tokens are used (~ 80k of 200k). This limit they call the “smart zone”. Once you go beyond that, the LLM is increasingly losing track of things and making stuff up. This is the “dumb zone”.

Stealing Prompts That Work (This Is Encouraged)

You know what’s better than figuring out prompting from scratch? Stealing prompts that already work.

Here are battle-tested templates you can copy:

Code Generation Template

Language: [JavaScript/TypeScript/etc]

Task: [clear description]

Requirements:

- Input: [describe inputs and types]

- Output: [describe output and type]

- Edge cases to handle: [list them]

- Use: [specific libraries, approaches, patterns]

- Include: [error handling, logging, JSDoc comments, whatever]

Example usage:

[show exactly what calling this code looks like]

Additional constraints:

[any other requirements]Debugging Template

Problem: [what's broken]

Error: [exact error message]

Expected: [what should happen]

Actual: [what's happening]

Code:

[paste code]

Context: [brief explanation of what this code is supposed to do]

What's wrong and how do I fix it?Code Review Template

Review this [language] code for a [context: production system/learning project/prototype].

Focus on:

- Correctness and bugs

- Security vulnerabilities

- Performance issues

- Edge cases not handled

- Code style and readability

- Suggested improvements

Be specific. Show examples.

[paste code]Explaining Complex Things Template

Explain [concept] to me.

My background: [your level: beginner/intermediate/etc]

What I already know: [related concepts you understand]

What I'm confused about: [specific confusion]

Use a concrete code example to demonstrate.

Avoid: [academic jargon/overly simple explanations/whatever]The “I’m Stuck” Template

I'm trying to [goal] but I'm stuck on [specific problem].

What I've tried:

1. [thing 1] - didn't work because [reason]

2. [thing 2] - got this error: [error]

3. [thing 3] - seemed promising but [issue]

My code: [paste code]

My error: [paste error if applicable]

What should I try next?Steal these. Modify them. Make them your own. This is not plagiarism, this is efficiency.

What You Should Actually Practice

Reading about prompting is like reading about swimming. You need to get in the water.

Your practice routine:

- Use AI every single day for two weeks - Real work, not toy examples

- Notice what works - When a prompt works beautifully, save it

- Notice what fails - When a prompt fails, figure out why

- Iterate - Don’t accept the first response, push for better

- Build a prompt library - Save your best prompts as templates

- Compare approaches - Try different phrasings, see what’s better

After two weeks of daily use, you’ll develop intuition. You’ll know when the AI is bullshitting. You’ll write better prompts automatically. You’ll stop being surprised when it fucks up.

There’s no shortcut. You have to develop the instinct.

Tired of Theory? Try the Prompt Master

We built a playground specifically for cynical developers. Test prompting techniques, from Zero-Shot to CO-STAR, against your favorite LLM (OpenAI, Anthropic, Google, even your local Ollama / LM Studio instance).

The only thing is you got to BYOK (bring your own key).

Open the Playground →The Part Where I’m Honest With You

Prompt engineering is partly skill, partly experimentation, and partly throwing shit at the wall to see what sticks.

Sometimes you’ll change one word and everything suddenly works. Sometimes you’ll craft the perfect prompt and get garbage anyway. Sometimes adding “please” actually makes a difference (it shouldn’t, but the training data is weird).

The “experts” writing threads on Twitter don’t have it all figured out either. They found something that worked once, on one problem, and they’re sharing it. Your mileage will vary. The AI models change every few months. What works now might not work in six months.

The only real rules:

- Be specific (absurdly so)

- Give examples (show, don’t tell)

- Iterate based on results (don’t give up after one try)

- Check the output (the AI lies confidently)

Everything else? Voodoo. Cargo culting. Placebo effect. Accumulated folk wisdom that might be real or might be shared delusion.

But it’s useful voodoo. So learn it anyway.

Your New Reality

Here’s what prompting looks like in actual practice:

- Write a specific prompt (more detail than feels necessary)

- Get a response (probably 70% right)

- Point out what’s wrong (“good start, but change X and Y”)

- Iterate (2-5 rounds of refinement)

- Test the result (the AI’s code will have bugs)

- Debug (either yourself or by telling the AI what broke)

- Ship it (good enough is good enough)

Steps 1-4 get faster with practice. Steps 5-6 never go away. The AI is a tool, not magic. It makes you faster, not infallible.

You’ll still need to understand what the code does. You’ll still need to test it. You’ll still need to fix bugs. You’re just doing it faster now, with an articulate but occasionally unreliable assistant.