Managing AI Expectations: Dealing With Coworkers

According to this LinkedIn article, AI can now write entire applications. Why is our project taking six months?

Table of Contents

- The AI Believer Spectrum

- Setting Expectations Without Crushing Dreams

- The “Quick AI Prototype” Trap

- When Your Junior Dev Becomes “An AI Expert”

- Protecting Your Codebase

- The Uncomfortable Conversation About Code Review

- Building AI Literacy (Without Being Preachy)

- Organizational AI Maturity

- The Politics of AI Projects

- The Long Game

- What You’ve Learned

The technology isn’t the hard part.

After everything in this course—the prompting, the RAG systems, the agents, the cost control—the hardest part of working with AI is working with humans who have opinions about AI.

Your manager read an article and now wants “AI everything.” Your junior developer thinks Copilot makes them a 10x engineer. Your product manager saw a demo at a conference and promised customers something impossible. Your designer wants to “leverage generative AI” without knowing what that means.

This chapter is about surviving these people without losing your job or your mind.

The AI Believer Spectrum

Not everyone is the same kind of wrong about AI. Understanding the species helps you handle them.

The Breathless Enthusiast

Characteristics: Shares AI articles daily. Uses phrases like “paradigm shift” and “unprecedented opportunity.” Has strong opinions about tools they’ve never used. Probably has “AI” somewhere in their LinkedIn headline now.

What they say: “Did you see that demo? AI can basically do our jobs now!”

What they mean: “I’m anxious about being left behind and I’m overcompensating.”

How to handle: Redirect enthusiasm toward specific, achievable projects. “That’s interesting—want to help me prototype something small to test it?” Either they’ll get hands-on experience (good) or their enthusiasm will find another outlet (also fine).

When someone’s excited about AI capabilities, channel that energy into a small proof-of-concept. Two things happen: either it works and you have a useful tool, or it doesn’t and they now understand the limitations firsthand. Both outcomes are better than endless theoretical arguments.

The Aggressive Skeptic

Characteristics: Dismisses all AI as hype. Calls it “fancy autocomplete” (okay, that one’s fair). Refuses to try tools because they “don’t need them.” Been burned by previous hype cycles.

What they say: “It’s just pattern matching. Real engineers don’t need this.”

What they mean: “I’ve invested decades in my skills and I’m scared they’re being devalued.”

How to handle: Don’t argue about capabilities. Instead, show specific, practical wins. “Look, it wrote all these test stubs in 30 seconds.” Focus on the tedious work AI handles well. Make it about saving time on the boring stuff, not replacing expertise.

The Executive Who Read an Article

Characteristics: Doesn’t understand implementation details. Has heard “AI” mentioned in board meetings. Wants to add “AI-powered” to everything for market positioning. Confuses demos with production systems.

What they say: “Our competitors are using AI. We need to be using AI. Yesterday.”

What they mean: “I need something to tell the board about our AI strategy.”

How to handle: Translate their goals into achievable technical projects. “If the goal is competitive positioning, here’s a feature we could realistically ship in six weeks that would give us something real to talk about.” Give them something concrete to point to.

The Overconfident Junior

Characteristics: Uses AI constantly. Ships fast. Doesn’t review output carefully. Has introduced several subtle bugs. Thinks velocity equals value.

What they say: “I finished that feature in two hours! Copilot is amazing.”

What they mean: “I wrote code quickly and didn’t realize I should have reviewed it more carefully.”

How to handle: This one’s tricky. See the dedicated section below.

The Paralyzed Middle Manager

Characteristics: Knows they should “do something with AI” but doesn’t know what. Afraid of making the wrong decision. Keeps scheduling meetings to discuss AI strategy without actually deciding anything.

What they say: “We should form a working group to evaluate our AI approach.”

What they mean: “I don’t understand this well enough to make a decision and I’m hoping someone else will.”

How to handle: Make small decisions for them. “I’d like to try using Copilot on the authentication refactor—low risk, clear scope, I’ll report back in two weeks.” Remove the need for big strategic decisions by showing results from small tactical ones.

Setting Expectations Without Crushing Dreams

When someone comes to you with an unrealistic AI idea, you have options:

Option 1: The Brutal Truth “That won’t work. The model can’t do that. We don’t have the data. The cost would be insane.”

Result: They think you’re negative and go around you.

Option 2: The Cowardly Agreement “Sure, we can try that!”

Result: You waste weeks building something that doesn’t work.

Option 3: The Constructive Redirect “That’s an interesting goal. Let me show you what’s actually achievable and we can work toward it.”

Result: You maintain credibility while keeping the conversation productive.

The Redirect Framework

When someone proposes something unrealistic:

-

Acknowledge the goal: “So you want customers to be able to ask questions about their account in natural language—that makes sense.”

-

Identify the real constraint: “The challenge is accuracy. If we get answers wrong about billing or account status, that’s worse than no feature at all.”

-

Propose a scoped version: “What if we started with just the top 10 most common questions? We can build a retrieval system that’s highly accurate for those, then expand from there.”

-

Set measurable success criteria: “We could measure success by comparing accuracy to our current FAQ—if we’re at 95%+ accuracy on those questions, we expand. If not, we iterate.”

You’ve preserved their idea, added realistic constraints, and given yourself room to succeed or fail gracefully.

Most AI project failures could have been prevented by having an honest conversation early: “This will probably work 80% of the time. Is that good enough, or do you need 99%?” Many stakeholders don’t realize they’re asking for 99% until you point out the gap.

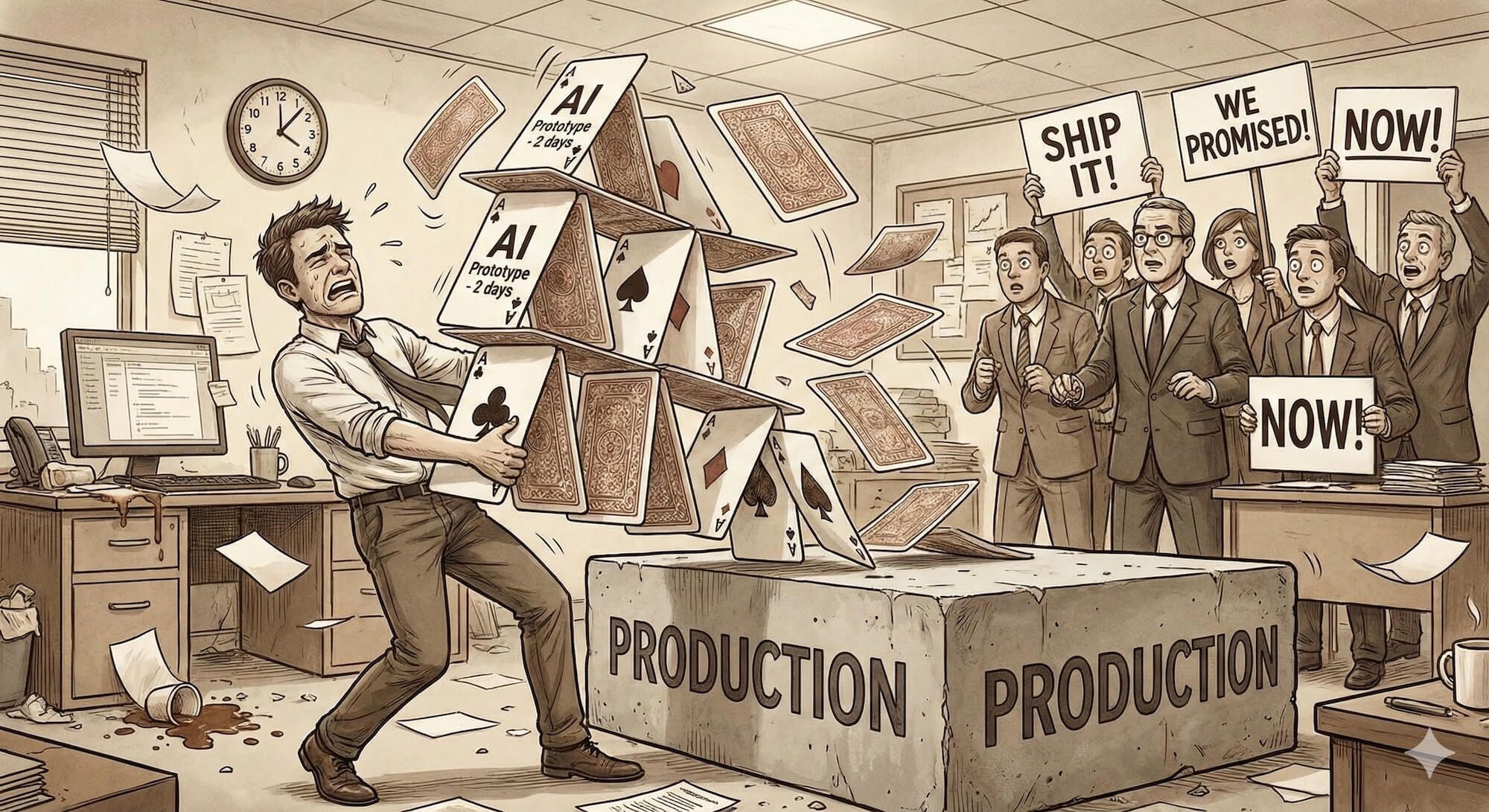

The “Quick AI Prototype” Trap

This is the most dangerous phrase in AI product development:

“Let’s just throw together a quick AI prototype and see how it goes.”

The prototype takes two days. It works on the demo data. Everyone gets excited. The prototype becomes the product. The product goes to production. Production has different data. Production has edge cases. Production has scale. Production breaks.

Then you spend six months fixing the “prototype.”

How to Avoid the Trap

When someone proposes a quick AI prototype:

Set expectations upfront: “I can build a demo in a few days, but that’s just to validate the concept. Production will take 6-8 weeks with proper error handling, testing, and monitoring.”

Document the gap: Keep a list of everything the prototype doesn’t handle. When the prototype “works,” show the list. “Before this goes to production, we need to handle: authentication, rate limiting, error messages, audit logging, cost controls, and graceful degradation.”

Resist the pressure: “The demo looked great, why can’t we just ship it?” Because the demo was designed to look great. Production is designed to work.

The Demo-to-Production Multiplier

A rough rule of thumb: production-ready takes 5-10x longer than demo-ready.

| What | Demo | Production |

|---|---|---|

| Happy path | ✓ | ✓ |

| Error handling | Maybe | ✓ |

| Edge cases | No | ✓ |

| Security review | No | ✓ |

| Monitoring | No | ✓ |

| Documentation | No | ✓ |

| Testing | Manual | Automated |

| Cost controls | No | ✓ |

| Graceful degradation | No | ✓ |

A 2-day demo is a 2-4 week production feature. Communicate this early and often.

When Your Junior Dev Becomes “An AI Expert”

This is a specific and common problem. Your junior developer uses Copilot constantly. They ship fast. Their code… kind of works. They’ve started calling themselves an AI expert on LinkedIn.

Meanwhile, you’re finding bugs in their code weekly. They don’t understand what the AI generated. When something breaks, they can’t debug it because they didn’t write it.

The Problem

AI tools make it easy to generate code without understanding code. For experienced developers, this is fine—we know enough to review and catch problems. For junior developers, they don’t know what they don’t know.

The AI writes a SQL query with an injection vulnerability. The junior doesn’t recognize it. The AI writes a recursive function with no base case for certain inputs. The junior doesn’t catch it. The AI uses a deprecated library. The junior has no idea it’s deprecated.

This isn’t the junior’s fault. They’re using the tools they’ve been given. But it creates a dangerous gap between apparent productivity and actual quality.

How to Handle It

1. Increase code review scrutiny (temporarily)

Not forever, but until they develop the critical eye to review their own AI-assisted code. Frame it as “let’s review together” not “I don’t trust you.”

2. Require explanations

“Walk me through this function. Why did you choose this approach?” If they can’t explain code they submitted, that’s a learning opportunity.

3. Pair on debugging

When AI-generated code breaks, don’t fix it for them. Debug together. “Why do you think this is failing? What would you check first?” Build the skills that AI assistance skipped.

4. Assign some AI-free work

Not as punishment, but as learning. “For this component, I want you to write it without Copilot, then compare your version to what AI suggests.” Builds foundational understanding.

A junior developer who leans entirely on AI for three years will not develop the same skills as one who had to struggle through problems. This isn’t anti-AI hysteria—it’s basic learning science. Struggle builds understanding. Consider this when mentoring.

5. Normalize asking questions

Make it safe to say “I don’t understand this code I generated.” The worst outcome is a junior who’s too embarrassed to admit they don’t understand what they shipped.

Protecting Your Codebase

AI-generated code isn’t inherently bad, but unreviewed AI-generated code is a risk. Here’s how to protect your codebase without banning AI tools.

Code Review Standards

Establish clear standards for AI-assisted PRs:

## AI-Assisted Code Review Checklist

- [ ] Developer can explain the logic without referring to the AI's explanation

- [ ] Error handling covers realistic failure modes

- [ ] No sensitive data is logged or exposed

- [ ] Dependencies are current and approved

- [ ] Security-sensitive code has been manually verified

- [ ] Tests cover edge cases, not just happy path

- [ ] Code follows team conventions (AI doesn't know your conventions)The “Explain It” Rule

Simple rule: if you can’t explain why the code works, don’t submit it.

This isn’t about being anti-AI. It’s about responsibility. You’re responsible for the code you submit, regardless of who—or what—wrote it.

Automated Checks

Let machines catch what humans miss:

- Linting: Catches style issues and common errors

- Security scanning: Catches known vulnerability patterns

- Dependency auditing: Catches outdated or vulnerable packages

- Type checking: Catches type errors that AI often introduces

These won’t catch everything, but they raise the floor.

Architecture Boundaries

Some parts of the codebase need extra protection:

// auth/README.md

# Authentication Module

This module handles authentication, authorization, and session management.

## AI Tool Policy

- AI-generated code in this module requires ADDITIONAL review by security team

- All changes require explanation of security implications

- No direct commits - all changes through PR with 2+ reviewers

## Why

Authentication bugs have disproportionate impact. A bug here can expose

user data, allow unauthorized access, or create compliance violations.

The extra friction is intentional.Designate sensitive areas. Make the review bar higher there. This isn’t about banning AI—it’s about proportionate caution.

The Uncomfortable Conversation About Code Review

At some point, you’ll have to tell someone their AI-generated code isn’t good enough. This is awkward.

How to Do It

Don’t say: “This AI code is garbage.”

Do say: “I found a few issues we should fix before merging. Let me walk through them.”

Don’t say: “You obviously didn’t review this.”

Do say: “I noticed the error handling doesn’t cover [case]. Let’s add that.”

Don’t say: “Stop using AI.”

Do say: “For this security-sensitive section, let’s write it manually so we understand every line.”

The goal is better code, not shame. Focus on the code, not the tool.

When They Push Back

“But the AI generated it this way!”

Response: “The AI doesn’t know our codebase, our requirements, or our standards. It generated something that might work generically. We need it to work specifically for us.”

“You’re just anti-AI.”

Response: “I use AI tools daily. I’m not anti-AI, I’m pro-quality. We review human-written code too.”

“This will slow us down.”

Response: “Review now or debug later. Your choice, but the bugs don’t disappear just because we merged fast.”

Building AI Literacy (Without Being Preachy)

You know more about AI than most of your team. How do you share that without being That Person?

What Doesn’t Work

- Sending articles with “thought this was interesting!” (nobody reads them)

- Long lectures about LLM internals (eyes glaze over)

- Correcting everyone’s misconceptions (exhausting for everyone)

- Creating mandatory training (resentment)

What Works

Show, don’t tell: “Hey, watch this—” [does something useful with AI in 30 seconds]. More effective than any explanation.

Office hours: “I’m available Thursdays 2-3pm if anyone wants help with AI tools.” Low pressure, self-selecting audience.

Pair problem-solving: When someone’s stuck, offer to try it together with AI. They learn by doing.

Document wins: Share concrete examples in Slack or your team wiki. “Used Claude to refactor the billing module—here’s the prompt that worked.”

Answer questions when asked: The best teaching happens when someone genuinely wants to learn.

One team I worked with created a shared doc called “AI Prompts That Actually Work.” Anyone could add prompts that saved them time. Within a month, it had 50+ entries and was one of the most-visited internal docs. No training required—just peer learning through shared experience.

The Meta-Skill

The most valuable thing you can teach isn’t any specific AI technique. It’s the habit of treating AI like a tool—useful for some things, not others, and always requiring verification.

That mindset is more durable than any specific technique.

Organizational AI Maturity

Different organizations are at different stages. Know where yours is.

Stage 1: Denial

“We don’t need AI. Our developers are good enough.”

What to do: Start using AI tools yourself. Build small wins. Wait for curiosity.

Stage 2: Chaos

“Everyone’s using AI but there’s no consistency or guidelines.”

What to do: Propose simple standards. “Can we agree on a code review checklist for AI-assisted PRs?”

Stage 3: Over-Correction

“We’ve banned all AI tools because of security/quality/legal concerns.”

What to do: Advocate for measured policies. “What if we allowed specific tools with specific guidelines?”

Stage 4: Integration

“We have clear policies, tools, and practices for AI-assisted development.”

What to do: Help maintain and evolve the practices. Share what’s working and what isn’t.

Stage 5: Optimization

“We’re measuring the impact and continuously improving our AI practices.”

What to do: Contribute to the metrics and discussions. This is the goal state—help keep it there.

Most organizations are at Stage 2 or 3. Progress is slow. Be patient.

The Politics of AI Projects

Let’s get cynical for a moment.

AI projects are politically charged. They’re visible. They’re associated with innovation. They make executives feel cutting-edge. This creates perverse incentives.

The Visibility Trap

AI projects get attention. Attention means scrutiny. Scrutiny means pressure. Pressure means shortcuts. Shortcuts mean problems.

Be careful what you volunteer for.

The Success Attribution Problem

AI project succeeds → “Leadership’s visionary AI strategy pays off!”

AI project fails → “Engineering couldn’t execute.”

This isn’t fair. Navigate accordingly.

The Realistic Play

If you’re asked to lead an AI project:

- Get requirements in writing: What does success look like? Be specific.

- Document constraints: What’s the timeline? Budget? What resources do you have?

- Set explicit expectations: “Based on these constraints, here’s what we can realistically deliver.”

- Create checkpoints: “At week 2, we’ll know if the approach is viable.”

- Build in an exit ramp: “If the accuracy isn’t there by week 4, we’ll pivot to [alternative].”

CYA isn’t cynicism. It’s survival.

The Long Game

Here’s the uncomfortable truth: most of the AI chaos in your organization will sort itself out eventually.

The unrealistic expectations will meet reality. The over-enthusiastic will become more measured. The skeptics will gradually adopt tools that prove useful. The executives will move on to the next shiny thing.

Your job isn’t to fix everyone’s misconceptions. Your job is to:

- Do good work with AI where it helps

- Avoid disasters where it doesn’t

- Build credibility through results

- Stay sane while everyone else figures it out

The developers who’ll thrive aren’t the AI evangelists or the AI skeptics. They’re the ones who quietly use these tools effectively, ship quality work, and let the results speak.

Be that person.

What You’ve Learned

After this chapter, you should be able to:

- Identify different types of AI believers and adapt your approach

- Set realistic expectations without killing good ideas

- Avoid the “quick prototype” trap

- Handle the overconfident junior developer situation

- Protect your codebase with reasonable standards

- Build AI literacy in your team without being preachy

- Navigate the politics of AI projects

- Stay sane while your organization figures this out

This is the last chapter. If you’ve made it this far, you have everything you need to use AI tools effectively, build real features, and survive the organizational chaos around you.

Now close this course and go build something.

Good luck. You probably won’t need it anymore.