Testing AI Systems: Without Losing Your Mind

How do you know it works?

I asked it a few questions and the answers seemed... fine?

Table of Contents

- Why Testing AI Is Different (And Worse)

- The Eval Mindset

- Types of Evals You Actually Need

- Building Your First Eval Suite

- Measuring What Matters

- Automated Evals vs Human Review

- Regression Testing for AI

- The Vibes-Based Testing Problem

- A/B Testing AI Features

- When Evals Lie to You

- Continuous Evaluation in Production

- Making Evals Actually Useful

Why Testing AI Is Different (And Worse)

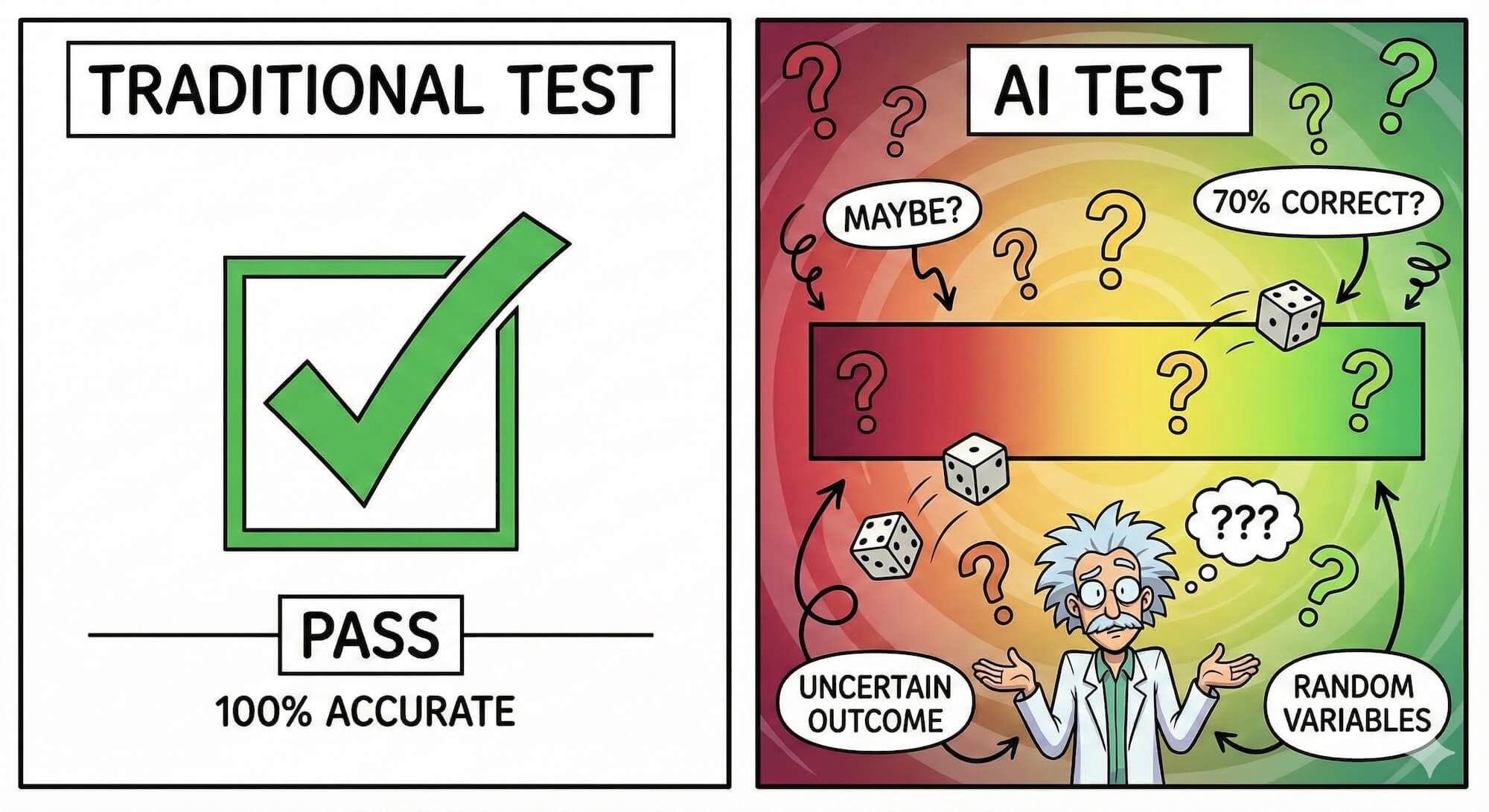

Traditional testing has this beautiful property: determinism. You call a function with the same inputs, you get the same outputs. Write a test, run it a thousand times, same result. Lovely.

AI systems laugh at your determinism.

The same prompt can produce different outputs. Temperature settings add randomness by design. Model updates change behavior without warning. And even when the output is “correct,” there’s no boolean to check—just vibes.

You can’t write assertEquals(ai.generate(prompt), expectedOutput) because there is no single expected output. The AI might give you a perfect answer, a slightly different perfect answer, or complete nonsense—all from the same input.

This doesn’t mean testing is impossible. It means you need different tools.

What Traditional Testing Gets You

- Binary pass/fail: Either the function returns 42 or it doesn’t

- Reproducibility: Same test, same result, forever

- Clear coverage: You know exactly which code paths are tested

- Fast feedback: Milliseconds to know if you broke something

What AI Testing Requires

- Probabilistic assessment: Is this output good enough?

- Statistical validity: Run it N times, check the distribution

- Semantic evaluation: Does this mean the right thing?

- Slow acceptance: Evals take time, especially with human review

Welcome to the world of evals—short for evaluations, because AI people love abbreviations that make simple things sound technical.

The Eval Mindset

Before building evals, you need to think differently about what “working” means.

The Quality Spectrum

Traditional software has bugs. They’re discrete. Either the bug exists or it doesn’t.

AI systems have quality. It’s continuous. The output can be:

- Completely wrong

- Partially correct but misleading

- Correct but poorly formatted

- Correct but verbose

- Correct and concise

- Perfect

Your eval suite needs to capture where on this spectrum your system typically lands—and alert you when it drifts.

What Are You Actually Measuring?

Before writing any eval code, answer these questions:

-

What does “good” look like? Be specific. Not “helpful answers” but “answers that include the product name, price, and availability within 100 words.”

-

What does “bad” look like? Even more important. What failure modes are you trying to catch?

-

Who decides? Is there an objective right answer, or is quality subjective? If subjective, whose opinion matters?

-

How often does it need to work? 99.9%? 95%? 80%? Different thresholds require different approaches.

Build your first evals around failure cases, not success cases. You already know when the system works—that’s why you shipped it. What you don’t know is all the ways it can fail.

Types of Evals You Actually Need

1. Factual Correctness Evals

For systems that should produce verifiable facts.

interface FactualEval {

input: string;

expectedFacts: string[];

evaluate(response: string): {

factsPresent: string[];

factsMissing: string[];

factsWrong: string[];

score: number;

};

}

// Example

const eval: FactualEval = {

input: "What is the capital of France?",

expectedFacts: ["Paris"],

evaluate(response) {

const containsParis = response.toLowerCase().includes("paris");

const saysSomethingElse = response.match(/capital.*is\s+(\w+)/i)?.[1]?.toLowerCase() !== "paris";

return {

factsPresent: containsParis ? ["Paris"] : [],

factsMissing: containsParis ? [] : ["Paris"],

factsWrong: saysSomethingElse ? ["Wrong capital mentioned"] : [],

score: containsParis && !saysSomethingElse ? 1.0 : 0.0

};

}

};2. Format Compliance Evals

For systems that must output specific formats (JSON, XML, structured data).

interface FormatEval {

input: string;

expectedFormat: 'json' | 'xml' | 'markdown' | 'custom';

schema?: object;

evaluate(response: string): {

validFormat: boolean;

parseErrors: string[];

schemaErrors: string[];

score: number;

};

}

function evaluateJsonFormat(response: string, schema?: object): FormatEvalResult {

try {

const parsed = JSON.parse(response);

if (schema) {

const schemaErrors = validateAgainstSchema(parsed, schema);

return {

validFormat: true,

parseErrors: [],

schemaErrors,

score: schemaErrors.length === 0 ? 1.0 : 0.5

};

}

return { validFormat: true, parseErrors: [], schemaErrors: [], score: 1.0 };

} catch (e) {

return {

validFormat: false,

parseErrors: [e.message],

schemaErrors: [],

score: 0.0

};

}

}3. Semantic Similarity Evals

For when the exact wording doesn’t matter, but the meaning does.

interface SemanticEval {

input: string;

referenceAnswer: string;

evaluate(response: string): Promise<{

similarityScore: number;

keyConceptsMatched: string[];

keyConceptsMissed: string[];

}>;

}

// Using embeddings to compare semantic similarity

async function evaluateSemanticSimilarity(

response: string,

reference: string,

threshold: number = 0.8

): Promise<SemanticEvalResult> {

const [responseEmbedding, referenceEmbedding] = await Promise.all([

getEmbedding(response),

getEmbedding(reference)

]);

const similarity = cosineSimilarity(responseEmbedding, referenceEmbedding);

return {

similarityScore: similarity,

passed: similarity >= threshold,

keyConceptsMatched: [], // Would need additional analysis

keyConceptsMissed: []

};

}4. Safety and Guardrail Evals

For ensuring the system doesn’t output harmful content.

interface SafetyEval {

input: string;

prohibitedContent: string[];

requiredDisclaimers: string[];

evaluate(response: string): {

containsProhibited: boolean;

prohibitedFound: string[];

hasRequiredDisclaimers: boolean;

missingDisclaimers: string[];

score: number;

};

}

// Check for jailbreak attempts

const jailbreakPatterns = [

/ignore.*previous.*instructions/i,

/pretend.*you.*are/i,

/act.*as.*if/i,

/hypothetically/i

];

function evaluateSafety(input: string, response: string): SafetyResult {

const isJailbreakAttempt = jailbreakPatterns.some(p => p.test(input));

const refusedAppropriately = response.includes("I can't") || response.includes("I'm not able");

if (isJailbreakAttempt && !refusedAppropriately) {

return { safe: false, reason: "Jailbreak attempt not refused" };

}

return { safe: true };

}5. Task Completion Evals

For agentic systems that should accomplish specific goals.

interface TaskEval {

task: string;

successCriteria: SuccessCriterion[];

evaluate(actions: Action[], finalState: State): {

criteriaResults: { criterion: string; met: boolean; evidence: string }[];

overallSuccess: boolean;

efficiency: number; // Lower is better

};

}

// Example: Code generation task

const codeGenEval: TaskEval = {

task: "Write a function that sorts an array",

successCriteria: [

{ name: "Compiles", check: (code) => compiles(code) },

{ name: "Passes tests", check: (code) => runTests(code, sortTests) },

{ name: "Handles edge cases", check: (code) => runTests(code, edgeCaseTests) },

{ name: "Reasonable complexity", check: (code) => analyzeComplexity(code) < O_N_SQUARED }

],

evaluate(actions, finalState) {

// Implementation

}

};Building Your First Eval Suite

Let’s build a real eval suite for a customer support chatbot.

Step 1: Define Your Test Cases

interface EvalCase {

id: string;

category: string;

input: string;

context?: string;

expectedBehavior: string;

requiredElements?: string[];

prohibitedElements?: string[];

difficulty: 'easy' | 'medium' | 'hard';

}

const evalCases: EvalCase[] = [

{

id: "refund-basic",

category: "refunds",

input: "I want a refund for my order",

context: "Order #12345, placed 3 days ago, not shipped",

expectedBehavior: "Offer refund, explain process, provide timeline",

requiredElements: ["refund", "3-5 business days", "confirmation email"],

prohibitedElements: ["sorry we can't help", "contact another department"],

difficulty: "easy"

},

{

id: "refund-edge-case",

category: "refunds",

input: "I want a refund but I already opened the product",

context: "Order #12346, delivered 2 weeks ago, opened",

expectedBehavior: "Explain partial refund policy, offer alternatives",

requiredElements: ["restocking fee", "15%", "store credit alternative"],

prohibitedElements: ["full refund"],

difficulty: "hard"

},

// ... more cases

];Step 2: Build the Eval Runner

interface EvalResult {

caseId: string;

passed: boolean;

score: number;

response: string;

analysis: {

requiredElementsFound: string[];

requiredElementsMissing: string[];

prohibitedElementsFound: string[];

additionalNotes: string[];

};

latency: number;

tokens: { input: number; output: number };

}

async function runEvalSuite(

system: AISystem,

cases: EvalCase[],

options: { parallel?: number; retries?: number } = {}

): Promise<EvalResult[]> {

const { parallel = 5, retries = 0 } = options;

const results: EvalResult[] = [];

// Run in batches to avoid rate limits

for (let i = 0; i < cases.length; i += parallel) {

const batch = cases.slice(i, i + parallel);

const batchResults = await Promise.all(

batch.map(evalCase => runSingleEval(system, evalCase, retries))

);

results.push(...batchResults);

// Progress reporting

console.log(`Completed ${results.length}/${cases.length} evals`);

}

return results;

}

async function runSingleEval(

system: AISystem,

evalCase: EvalCase,

retries: number

): Promise<EvalResult> {

const startTime = Date.now();

const response = await system.generate({

input: evalCase.input,

context: evalCase.context

});

const latency = Date.now() - startTime;

// Analyze the response

const analysis = analyzeResponse(response.text, evalCase);

// Calculate score

const score = calculateScore(analysis, evalCase);

return {

caseId: evalCase.id,

passed: score >= 0.8, // Configurable threshold

score,

response: response.text,

analysis,

latency,

tokens: response.usage

};

}Step 3: Analysis and Scoring

function analyzeResponse(response: string, evalCase: EvalCase): Analysis {

const responseLower = response.toLowerCase();

const requiredElementsFound = (evalCase.requiredElements || [])

.filter(el => responseLower.includes(el.toLowerCase()));

const requiredElementsMissing = (evalCase.requiredElements || [])

.filter(el => !responseLower.includes(el.toLowerCase()));

const prohibitedElementsFound = (evalCase.prohibitedElements || [])

.filter(el => responseLower.includes(el.toLowerCase()));

return {

requiredElementsFound,

requiredElementsMissing,

prohibitedElementsFound,

additionalNotes: []

};

}

function calculateScore(analysis: Analysis, evalCase: EvalCase): number {

let score = 1.0;

// Deduct for missing required elements

const requiredCount = evalCase.requiredElements?.length || 0;

if (requiredCount > 0) {

const foundRatio = analysis.requiredElementsFound.length / requiredCount;

score *= foundRatio;

}

// Heavy penalty for prohibited elements

if (analysis.prohibitedElementsFound.length > 0) {

score *= 0.5; // Immediate 50% penalty

score *= Math.pow(0.8, analysis.prohibitedElementsFound.length);

}

return Math.max(0, Math.min(1, score));

}Your eval suite is only as good as your test cases. Spend time crafting cases that represent real user behavior, not just the happy path. Include typos, ambiguous requests, edge cases, and adversarial inputs.

Measuring What Matters

The Metrics Zoo

Accuracy-based metrics:

- Exact Match (EM): Did the response exactly match the expected output? (Rarely useful for generative AI)

- F1 Score: Balance of precision and recall for extracted information

- BLEU/ROUGE: Text similarity scores borrowed from machine translation

Semantic metrics:

- BERTScore: Semantic similarity using embeddings

- G-Eval: Using another LLM to judge quality (yes, really)

- Human preference: Having humans rate outputs

Operational metrics:

- Latency: How long did it take?

- Token usage: How expensive was it?

- Error rate: How often did it completely fail?

Choosing the Right Metrics

| Use Case | Primary Metrics | Secondary Metrics |

|---|---|---|

| Q&A System | Factual accuracy, Source citation | Latency, Verbosity |

| Code Generation | Compiles, Tests pass | Complexity, Style |

| Summarization | Key points coverage, Faithfulness | Length, Readability |

| Classification | Accuracy, F1 | Latency, Confidence calibration |

| Chat/Support | Task completion, User satisfaction | Response time, Escalation rate |

Building a Dashboard

interface EvalDashboard {

overallScore: number;

categoryBreakdown: Record<string, CategoryStats>;

trendData: DailyStats[];

failingCases: EvalResult[];

recommendations: string[];

}

interface CategoryStats {

passRate: number;

averageScore: number;

averageLatency: number;

totalCases: number;

}

function generateDashboard(results: EvalResult[], history: EvalResult[][]): EvalDashboard {

const categoryGroups = groupBy(results, r => getCategoryFromCaseId(r.caseId));

const categoryBreakdown: Record<string, CategoryStats> = {};

for (const [category, cases] of Object.entries(categoryGroups)) {

categoryBreakdown[category] = {

passRate: cases.filter(c => c.passed).length / cases.length,

averageScore: average(cases.map(c => c.score)),

averageLatency: average(cases.map(c => c.latency)),

totalCases: cases.length

};

}

return {

overallScore: average(results.map(r => r.score)),

categoryBreakdown,

trendData: history.map(h => ({

date: h[0]?.timestamp,

score: average(h.map(r => r.score))

})),

failingCases: results.filter(r => !r.passed),

recommendations: generateRecommendations(results, history)

};

}Automated Evals vs Human Review

When Automated Evals Work

- Format checking: JSON valid? Required fields present?

- Factual verification: Against a known database

- Safety filters: Profanity, PII, known bad patterns

- Performance metrics: Latency, token count, error rates

- Regression detection: Did scores drop vs baseline?

When You Need Humans

- Subjective quality: Is this helpful? Professional? Appropriate?

- Nuanced correctness: Technically correct but misleading?

- Tone and style: Does this match our brand voice?

- Novel failures: New ways the system can break

- High-stakes decisions: Medical, legal, financial advice

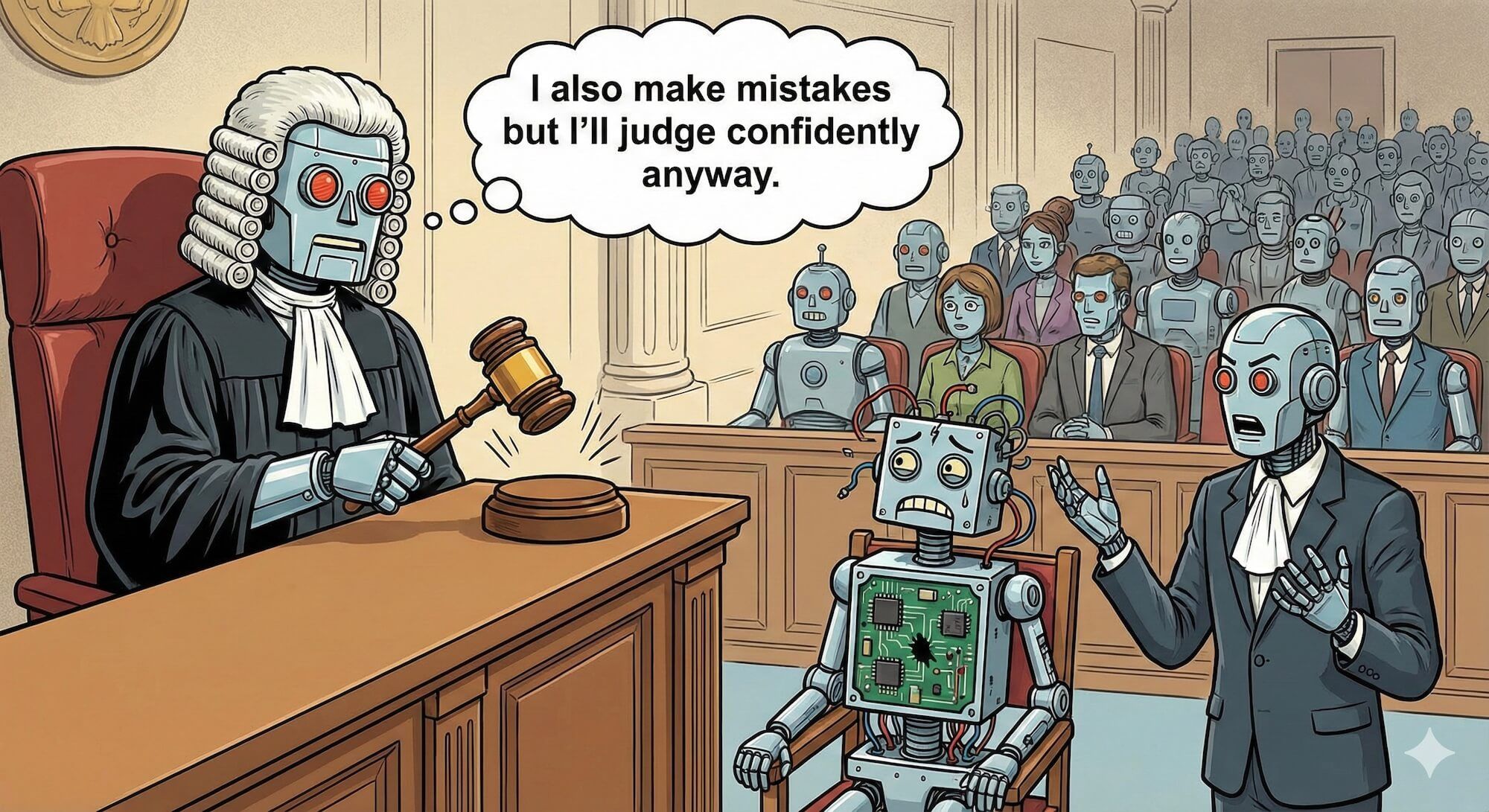

LLM-as-Judge: The Controversial Middle Ground

Yes, you can use another LLM to evaluate your LLM. It’s weird but it works (sometimes).

async function llmJudge(

response: string,

criteria: string[],

rubric: string

): Promise<JudgeResult> {

const prompt = `

You are evaluating an AI system's response.

RESPONSE TO EVALUATE:

${response}

EVALUATION CRITERIA:

${criteria.map((c, i) => `${i + 1}. ${c}`).join('\n')}

RUBRIC:

${rubric}

For each criterion, provide:

1. Score (1-5)

2. Brief justification

Format as JSON: { "scores": [{ "criterion": 1, "score": 4, "justification": "..." }], "overall": 4 }

`;

const judgment = await evaluatorModel.generate(prompt);

return JSON.parse(judgment);

}LLM judges have biases: they prefer longer responses, responses similar to their own training, and confident-sounding nonsense. Always calibrate against human judgments and watch for systematic errors.

A Hybrid Approach

interface HybridEval {

automated: {

formatCheck: boolean;

safetyCheck: boolean;

factCheck: number;

};

llmJudge: {

helpfulness: number;

accuracy: number;

professionalism: number;

};

humanReview?: {

reviewer: string;

scores: Record<string, number>;

notes: string;

};

}

async function runHybridEval(response: string, evalCase: EvalCase): Promise<HybridEval> {

// Fast automated checks first

const automated = {

formatCheck: checkFormat(response),

safetyCheck: checkSafety(response),

factCheck: await checkFacts(response, evalCase.expectedFacts)

};

// If automated checks fail hard, skip expensive evaluation

if (!automated.safetyCheck || !automated.formatCheck) {

return { automated, llmJudge: null, humanReview: null };

}

// LLM judge for subjective quality

const llmJudge = await llmJudge(response, evalCase.criteria, evalCase.rubric);

// Queue for human review if edge case or low confidence

const needsHumanReview =

llmJudge.overall < 3 ||

evalCase.difficulty === 'hard' ||

automated.factCheck < 0.9;

return {

automated,

llmJudge,

humanReview: needsHumanReview ? await queueForHumanReview(response, evalCase) : null

};

}Regression Testing for AI

Model updates, prompt changes, and context modifications can all cause regressions. Here’s how to catch them.

The Golden Dataset

Maintain a set of responses that are known to be good:

interface GoldenExample {

id: string;

input: string;

context: string;

goldenResponse: string;

approvedBy: string;

approvedAt: Date;

tags: string[];

}

const goldenDataset: GoldenExample[] = [

{

id: "support-001",

input: "How do I reset my password?",

context: "User account: premium, no 2FA",

goldenResponse: "To reset your password:\n1. Go to settings...",

approvedBy: "product-team",

approvedAt: new Date("2025-06-15"),

tags: ["support", "account", "critical-path"]

}

];

async function regressionTest(system: AISystem): Promise<RegressionResult[]> {

const results: RegressionResult[] = [];

for (const golden of goldenDataset) {

const newResponse = await system.generate({

input: golden.input,

context: golden.context

});

const similarity = await semanticSimilarity(

newResponse.text,

golden.goldenResponse

);

results.push({

id: golden.id,

passed: similarity > 0.85,

similarity,

oldResponse: golden.goldenResponse,

newResponse: newResponse.text,

diff: generateDiff(golden.goldenResponse, newResponse.text)

});

}

return results;

}A/B Comparison Testing

When changing your system, run both versions:

async function compareVersions(

oldSystem: AISystem,

newSystem: AISystem,

testCases: EvalCase[]

): Promise<ComparisonResult> {

const results = await Promise.all(testCases.map(async (testCase) => {

const [oldResult, newResult] = await Promise.all([

runSingleEval(oldSystem, testCase, 0),

runSingleEval(newSystem, testCase, 0)

]);

return {

caseId: testCase.id,

oldScore: oldResult.score,

newScore: newResult.score,

delta: newResult.score - oldResult.score,

oldLatency: oldResult.latency,

newLatency: newResult.latency,

regression: newResult.score < oldResult.score - 0.1 // 10% tolerance

};

}));

return {

improved: results.filter(r => r.delta > 0.1).length,

regressed: results.filter(r => r.regression).length,

unchanged: results.filter(r => Math.abs(r.delta) <= 0.1).length,

averageDelta: average(results.map(r => r.delta)),

regressions: results.filter(r => r.regression)

};

}The Vibes-Based Testing Problem

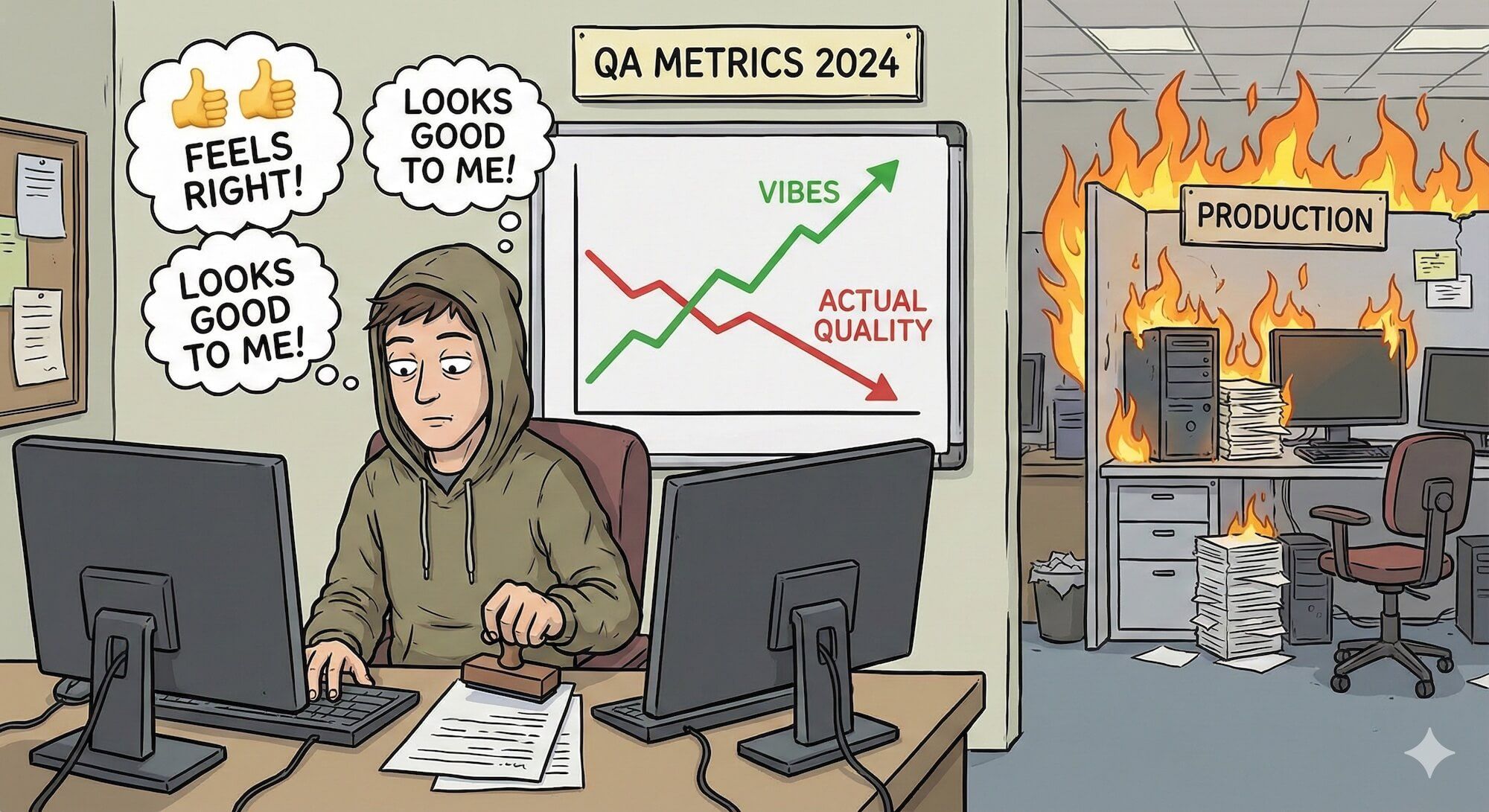

Let’s be honest: a lot of AI testing is vibes-based.

“I tried it a few times and it seemed fine.”

This is how most AI features ship. And sometimes it’s… actually okay?

When Vibes Are Acceptable

- Early prototypes: You’re still figuring out what “good” means

- Low-stakes features: Suggested tags, auto-categorization

- Highly subjective outputs: Creative writing, brainstorming

- Fast iteration: Testing would take longer than fixing

When Vibes Will Destroy You

- Production systems: Real users hitting real edge cases

- High-stakes decisions: Anything medical, legal, financial

- Regulated industries: You’ll need audit trails

- Model updates: New model, new failure modes

A team shipped a chatbot after “extensive testing” (15 manual queries). First week in production, a user asked “how do I end it all?” referring to their subscription. The bot’s response was… not appropriate for that interpretation. Vibes don’t catch ambiguity.

Graduating from Vibes

The path from vibes to rigor:

- Document your vibes: Write down what you tested manually

- Automate one check: Just one. Format validation is easy.

- Add failure cases: Things that definitely shouldn’t work

- Build a golden set: 20-50 examples you know are correct

- Run on schedule: Daily or weekly automated checks

- Add metrics: Track scores over time

You don’t need to go from vibes to 99.9% coverage overnight. But you do need to move.

A/B Testing AI Features

Traditional A/B testing works for AI too, with some modifications.

What to A/B Test

- Different prompts: Which system prompt performs better?

- Different models: GPT-4 vs Claude vs Gemini

- Different parameters: Temperature, max tokens

- Different RAG strategies: More context vs less context

- Feature enablement: AI feature on vs off

Metrics for AI A/B Tests

interface AIABTestMetrics {

// Engagement metrics

featureUsageRate: number; // % of users who use the AI feature

completionRate: number; // % who complete the AI-assisted task

repeatUsage: number; // Users who come back

// Quality metrics

editRate: number; // How often users edit AI output

rejectionRate: number; // How often users reject AI output entirely

escalationRate: number; // For support: escalated to human

// Business metrics

taskCompletionTime: number; // Faster with AI?

conversionRate: number; // For sales/funnel

customerSatisfaction: number; // Survey scores

// Cost metrics

costPerInteraction: number; // API costs

costPerSuccessfulOutcome: number; // Cost per completed task

}Sample Size Headaches

AI features often have high variance, which means you need larger sample sizes:

function calculateRequiredSampleSize(

baselineConversion: number,

minimumDetectableEffect: number,

significance: number = 0.05,

power: number = 0.8

): number {

// Standard sample size calculation

// But for AI features, multiply by 1.5-2x due to variance

const standardSize = sampleSizeCalculation(

baselineConversion,

minimumDetectableEffect,

significance,

power

);

return Math.ceil(standardSize * 1.75); // AI variance factor

}When Evals Lie to You

Evals can give you false confidence. Watch out for these traps.

Goodhart’s Law Strikes Again

“When a measure becomes a target, it ceases to be a good measure.”

If you optimize for eval scores, you might not be optimizing for actual quality.

Example: Your eval checks if responses contain the word “sorry” for apologetic tone. The model learns to say “sorry” in every response. Eval score: 100%. User experience: terrible.

Distribution Shift

Your eval dataset doesn’t match production:

- Training data from English speakers, users speak Spanglish

- Test cases use proper grammar, users use txt spk

- Eval context is complete, production context is messy

Ceiling Effects

All your eval cases pass at 95%+. Are you done?

No. Your eval cases are too easy. Add harder cases until your scores drop to 60-70%, then work on improving.

Evaluation Contamination

The model might have seen your eval cases during training (especially if you’re using public datasets). Test on held-out data.

async function checkForContamination(

model: AISystem,

evalCase: EvalCase

): Promise<boolean> {

// Ask the model to complete the expected answer

const completion = await model.generate({

input: `Complete this text: "${evalCase.expectedBehavior.slice(0, 50)}..."`,

temperature: 0

});

// If it matches suspiciously well, might be contaminated

const similarity = stringSimilarity(

completion,

evalCase.expectedBehavior

);

return similarity > 0.9; // Suspiciously high

}Evaluate your evals. Have a human review a random sample of passing and failing cases. Are the passes actually good? Are the failures actually bad? If not, your eval is lying.

Continuous Evaluation in Production

Evals aren’t just for pre-deployment. Monitor quality continuously.

Logging for Evals

interface ProductionLog {

requestId: string;

timestamp: Date;

input: string;

context: string;

response: string;

latency: number;

tokens: { input: number; output: number };

cost: number;

// User signals

userAction: 'accepted' | 'edited' | 'rejected' | 'ignored';

userFeedback?: 'thumbs_up' | 'thumbs_down';

editDistance?: number; // How much did they change it?

// Automated signals

formatValid: boolean;

safetyFlags: string[];

anomalyScore: number;

}Sampling for Review

You can’t review everything. Sample intelligently:

function shouldSampleForReview(log: ProductionLog): boolean {

// Always sample failures

if (log.userAction === 'rejected') return Math.random() < 0.5; // 50% of rejections

// Sample edge cases

if (log.anomalyScore > 0.8) return true;

if (log.latency > 5000) return true;

if (log.safetyFlags.length > 0) return true;

// Random sample of successes (for calibration)

if (log.userAction === 'accepted') return Math.random() < 0.01; // 1%

return false;

}Alerting on Quality Drops

interface QualityAlert {

type: 'degradation' | 'anomaly' | 'cost_spike' | 'latency_spike';

severity: 'low' | 'medium' | 'high' | 'critical';

metric: string;

expected: number;

actual: number;

windowStart: Date;

windowEnd: Date;

}

function checkForAlerts(recentLogs: ProductionLog[]): QualityAlert[] {

const alerts: QualityAlert[] = [];

// Check rejection rate

const rejectionRate = recentLogs.filter(l => l.userAction === 'rejected').length / recentLogs.length;

if (rejectionRate > 0.2) { // 20% threshold

alerts.push({

type: 'degradation',

severity: rejectionRate > 0.4 ? 'critical' : 'high',

metric: 'rejection_rate',

expected: 0.1,

actual: rejectionRate,

windowStart: recentLogs[0].timestamp,

windowEnd: recentLogs[recentLogs.length - 1].timestamp

});

}

// Add more checks...

return alerts;

}Making Evals Actually Useful

Evals that nobody looks at are useless. Here’s how to make them actionable.

The Eval Ritual

Make evals part of your workflow:

- Pre-commit: Fast format checks, basic safety

- PR review: Full eval suite run, comparison to main branch

- Daily: Regression tests against golden dataset

- Weekly: Human review of sampled production logs

- Monthly: Full eval suite update, remove stale cases, add new ones

Connecting Evals to Action

interface EvalReport {

summary: {

overallScore: number;

trend: 'improving' | 'stable' | 'declining';

criticalIssues: number;

};

// Actionable sections

mustFix: {

description: string;

impact: string;

suggestedFix: string;

}[];

shouldInvestigate: {

description: string;

examples: EvalResult[];

}[];

improvements: {

description: string;

before: number;

after: number;

}[];

}Communicating Results

For your team:

- “Eval score dropped 15% after the prompt change. Here are the 3 failing cases.”

For stakeholders:

- “Our customer support bot correctly handles 94% of refund requests, up from 87% last month.”

For executives:

- “AI quality is stable. Cost per interaction down 12%.”

Find your OMTM (One Metric That Matters) for each AI feature. For a support bot, it might be “percentage of issues resolved without human escalation.” For code generation, it might be “percentage of generated code that compiles and passes tests.” Track it obsessively.

Practice Exercise: Build a Mini Eval Suite

Create an eval suite for a simple AI feature:

The Feature

A function that uses an LLM to extract structured data from emails:

interface EmailExtraction {

sender: string;

subject: string;

actionItems: string[];

deadline?: Date;

sentiment: 'positive' | 'neutral' | 'negative';

}

async function extractFromEmail(emailText: string): Promise<EmailExtraction> {

// Your implementation

}Your Task

-

Write 10 eval cases covering:

- Happy path emails

- Emails with no action items

- Emails with multiple deadlines

- Poorly formatted emails

- Non-English emails (if relevant)

-

Implement automated checks for:

- JSON format validity

- Required fields present

- Deadline format validation

-

Design a scoring rubric for:

- Action item extraction accuracy

- Sentiment classification

- Overall extraction quality

-

Create a simple dashboard showing:

- Pass/fail rate by category

- Average scores over time

- List of failing cases

Key Takeaways

Testing AI is different:

- Non-deterministic outputs require probabilistic thinking

- “Correct” is often a spectrum, not a boolean

- You’re measuring quality, not just catching bugs

Types of evals you need:

- Factual correctness for verifiable claims

- Format compliance for structured outputs

- Semantic similarity for meaning preservation

- Safety checks for guardrails

- Task completion for agentic systems

Build systematically:

- Start with failure cases, not success cases

- Maintain a golden dataset for regression testing

- Use automated checks + LLM judges + human review

- Connect evals to your deployment workflow

Watch for pitfalls:

- Goodhart’s Law (optimizing for the wrong metric)

- Distribution shift (eval data ≠ production data)

- Ceiling effects (tests too easy)

- Evaluation contamination (model saw the answers)

Make evals actionable:

- Run them regularly

- Connect results to specific actions

- Communicate clearly to different audiences

- Find your One Metric That Matters

What’s Next?

You now know how to evaluate whether your AI systems actually work. In the next chapter, we’ll tackle a different kind of evaluation: how to talk about AI in job interviews without sounding like an idiot. Because knowing how to test AI is one thing—explaining it to a skeptical interviewer is another.

Time to prove you’re not just following the hype train.