Agentic Workflows with PocketFlow

We spent three months learning LangChain. Then they released a new version and broke everything. Then we spent another month learning the new API. Then they deprecated that too.

Table of Contents

- The Framework Problem

- What PocketFlow Actually Is

- The Core Abstraction: Nodes

- Connecting Nodes: Flows

- Building an Agent: The Loop Pattern

- Batch Processing: When You Have Many Items

- RAG with PocketFlow

- When to Use What

- The Migration Path

- What You’ve Learned

The Framework Problem

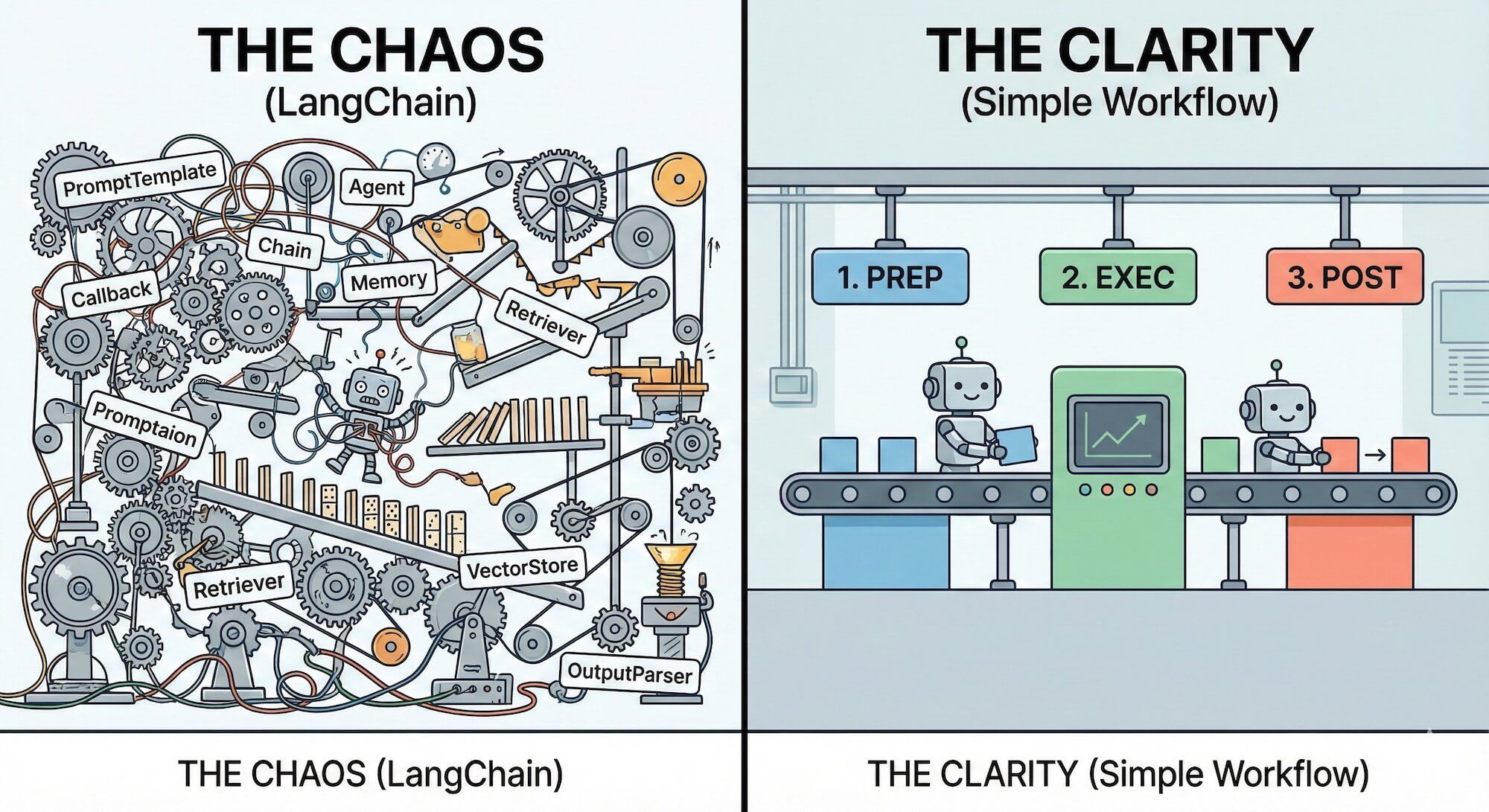

Let’s talk about the elephant in the room: AI frameworks.

You’ve probably heard of LangChain. Maybe LlamaIndex. Perhaps you’ve seen n8n or Flowise for visual workflow building. They all promise to make AI development easier.

Here’s what actually happens:

Week 1: “This is amazing! I built a chatbot in 20 lines!”

Week 2: “Why is there a ConversationalRetrievalChain AND a ConversationalChain AND a RetrievalQA? What’s the difference?”

Week 3: “The docs say to use AgentExecutor but Stack Overflow says to use create_react_agent. Neither works.”

Week 4: “I’ve written 500 lines of code to work around framework limitations. Maybe I should have just written 500 lines without the framework.”

Every framework adds abstractions. Abstractions have costs: learning time, debugging difficulty, version churn, and lock-in. The question isn’t “does this framework help?” It’s “does it help more than it costs?”

The Problems with Heavy Frameworks

1. Vendor Lock-in

LangChain has its own way of doing everything. LlamaIndex has another. Once you’re deep into either, switching costs months.

2. Abstraction Leakage

When something breaks (and it will), you need to understand both your code AND the framework’s internals. Good luck debugging why ConversationBufferMemory isn’t working when you don’t know what it’s actually doing.

3. Version Instability

LangChain has released breaking changes roughly every 3-4 months since launch. Your code from six months ago probably doesn’t work anymore.

4. Unnecessary Complexity

Most AI applications need: call an LLM, maybe do some retrieval, handle retries. That’s it. You don’t need 50,000 lines of framework for that.

What PocketFlow Actually Is

PocketFlow is the opposite of LangChain. It’s ~100 lines of code. Zero dependencies. Zero magic.

Here’s the entire mental model:

- Node: Does one thing. Has three methods:

prep(),exec(),post(). - Flow: Connects nodes. Runs them in sequence based on actions.

- Shared Store: A plain object that nodes read from and write to.

That’s it. No chains, no agents, no retrievers, no memory classes, no prompt templates, no output parsers. Just nodes, flows, and a shared object.

npm install pocketflowOr, because it’s ~100 lines, just copy the source code into your project. Seriously.

PocketFlow is MIT licensed and small enough to vendor directly. This means no dependency updates breaking your code, no version conflicts, and complete control. For production systems, this is often the right choice.

The Core Abstraction: Nodes

A Node does one thing in three steps:

import { Node } from 'pocketflow';

// Define your shared state type

type SharedState = {

input?: string;

output?: string;

};

class MyNode extends Node<SharedState> {

// Step 1: Read from shared state, prepare for execution

async prep(shared: SharedState): Promise<string> {

return shared.input || '';

}

// Step 2: Do the actual work (LLM call, API call, etc.)

async exec(prepResult: string): Promise<string> {

// This is where you'd call your LLM

return `Processed: ${prepResult}`;

}

// Step 3: Write results back, decide what happens next

async post(

shared: SharedState,

prepResult: string,

execResult: string

): Promise<string | undefined> {

shared.output = execResult;

return undefined; // "default" action - continue to next node

}

}Why Three Steps?

Separation of concerns.

prep(): Data access. Read from database, files, shared state.exec(): Computation. LLM calls, API calls, pure logic.post(): Side effects. Write results, decide next action.

This isn’t arbitrary. It makes testing easy (mock exec() without touching data), retries safe (only retry exec()), and debugging clear (which step failed?).

A Real Example: Summarization Node

type DocState = {

document: string;

summary?: string;

};

class SummarizeDocument extends Node<DocState> {

async prep(shared: DocState): Promise<string> {

return shared.document;

}

async exec(document: string): Promise<string> {

const response = await anthropic.messages.create({

model: 'claude-sonnet-4-20250514',

max_tokens: 500,

messages: [{

role: 'user',

content: `Summarize this document in 3 sentences:\n\n${document}`

}]

});

return response.content[0].text;

}

async post(shared: DocState, _prep: string, summary: string): Promise<undefined> {

shared.summary = summary;

return undefined;

}

}

// Use it

const node = new SummarizeDocument();

const shared: DocState = { document: 'Long document text...' };

await node.run(shared);

console.log(shared.summary);Built-in Retries and Fallbacks

Nodes handle failures gracefully:

class ReliableNode extends Node<SharedState> {

constructor() {

super(

3, // maxRetries: try up to 3 times

0.5 // wait: 0.5 seconds between retries

);

}

async exec(input: string): Promise<string> {

// If this throws, it will retry automatically

return await callFlakeyAPI(input);

}

async execFallback(input: string, error: Error): Promise<string> {

// If all retries fail, this runs instead

console.error('All retries failed:', error);

return 'Default response when everything fails';

}

}Connecting Nodes: Flows

Single nodes are useful. Connected nodes are powerful.

import { Node, Flow } from 'pocketflow';

// Node A: Get user input

class GetInput extends Node<SharedState> {

async post(shared: SharedState): Promise<string> {

shared.input = 'What is the capital of France?';

return 'default'; // Go to the next node

}

}

// Node B: Process with LLM

class ProcessWithLLM extends Node<SharedState> {

async prep(shared: SharedState): Promise<string> {

return shared.input || '';

}

async exec(question: string): Promise<string> {

return await callLLM(question);

}

async post(shared: SharedState, _: string, answer: string): Promise<undefined> {

shared.output = answer;

return undefined; // End the flow

}

}

// Connect them

const getInput = new GetInput();

const processLLM = new ProcessWithLLM();

getInput.next(processLLM); // default action goes to processLLM

// Create and run the flow

const flow = new Flow(getInput);

const shared: SharedState = {};

await flow.run(shared);

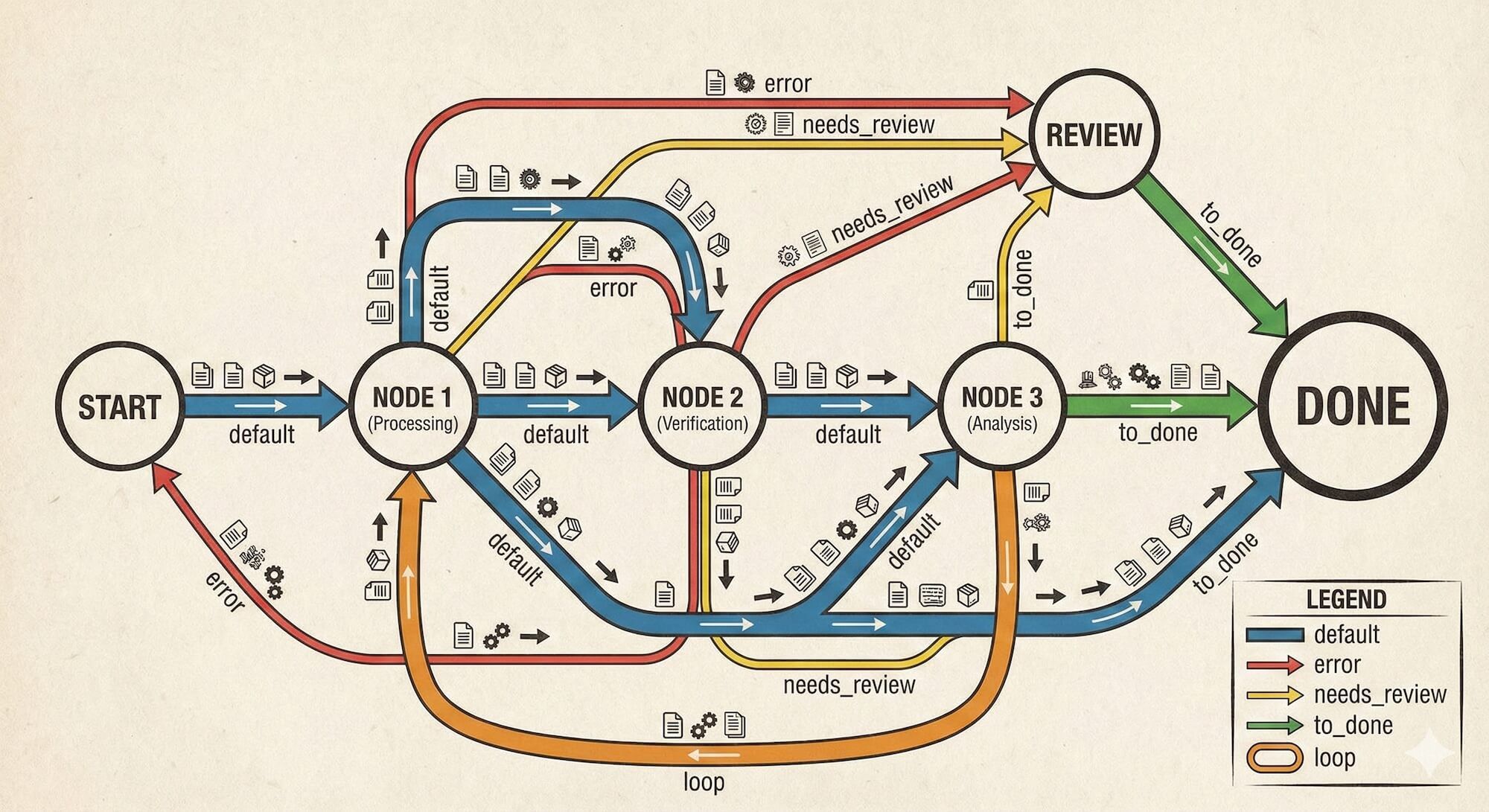

console.log(shared.output); // "The capital of France is Paris."Branching Based on Actions

The real power comes from conditional branching:

class ClassifyIntent extends Node<SharedState> {

async exec(input: string): Promise<string> {

const response = await callLLM(

`Classify this as "question" or "command": ${input}`

);

return response.toLowerCase().includes('question') ? 'question' : 'command';

}

async post(shared: SharedState, _: string, intent: string): Promise<string> {

shared.intent = intent;

return intent; // Return "question" or "command" as the action

}

}

class HandleQuestion extends Node<SharedState> { /* ... */ }

class HandleCommand extends Node<SharedState> { /* ... */ }

// Set up branching

const classify = new ClassifyIntent();

const questionHandler = new HandleQuestion();

const commandHandler = new HandleCommand();

classify.on('question', questionHandler);

classify.on('command', commandHandler);

const flow = new Flow(classify);The action returned by post() determines which node runs next. Return "default" (or undefined) for the normal path. Return any other string to branch. This is the entire routing system. No routers, no chains, no decision trees—just strings.

Building an Agent: The Loop Pattern

Remember agents from Chapter 08? Here’s how simple they are in PocketFlow:

type AgentState = {

query: string;

context: Array<{ term: string; result: string }>;

answer?: string;

};

// Node 1: Decide what to do

class DecideAction extends Node<AgentState> {

async prep(shared: AgentState): Promise<[string, string]> {

return [

shared.query,

JSON.stringify(shared.context || [])

];

}

async exec([query, context]: [string, string]): Promise<{action: string; search_term?: string}> {

const response = await callLLM(`

Query: ${query}

Context: ${context}

Decide: Should I "search" for more info or "answer" directly?

If search, what term?

Respond as JSON: {"action": "search"|"answer", "search_term": "..."}

`);

return JSON.parse(response);

}

async post(

shared: AgentState,

_: [string, string],

result: {action: string; search_term?: string}

): Promise<string> {

if (result.action === 'search') {

shared.searchTerm = result.search_term;

}

return result.action; // "search" or "answer"

}

}

// Node 2: Search the web

class SearchWeb extends Node<AgentState> {

async prep(shared: AgentState): Promise<string> {

return shared.searchTerm || '';

}

async exec(term: string): Promise<string> {

return await searchAPI(term);

}

async post(shared: AgentState, term: string, result: string): Promise<string> {

shared.context.push({ term, result });

return 'decide'; // Loop back to decide

}

}

// Node 3: Give final answer

class GiveAnswer extends Node<AgentState> {

async prep(shared: AgentState): Promise<[string, string]> {

return [shared.query, JSON.stringify(shared.context)];

}

async exec([query, context]: [string, string]): Promise<string> {

return await callLLM(`Based on: ${context}\nAnswer: ${query}`);

}

async post(shared: AgentState, _: any, answer: string): Promise<undefined> {

shared.answer = answer;

return undefined; // End

}

}

// Wire it up

const decide = new DecideAction();

const search = new SearchWeb();

const answer = new GiveAnswer();

decide.on('search', search);

decide.on('answer', answer);

search.on('decide', decide); // The loop!

const agent = new Flow(decide);

await agent.run({

query: 'Who won the Nobel Prize in Physics 2024?',

context: []

});That’s a complete agent. No AgentExecutor, no create_react_agent, no ZeroShotAgent. Just nodes and actions.

Batch Processing: When You Have Many Items

Processing multiple items? Use BatchNode:

import { BatchNode } from 'pocketflow';

type EmbedState = {

documents: string[];

embeddings?: number[][];

};

class EmbedDocuments extends BatchNode<EmbedState> {

// prep returns an ARRAY of items to process

async prep(shared: EmbedState): Promise<string[]> {

return shared.documents;

}

// exec is called ONCE PER ITEM

async exec(document: string): Promise<number[]> {

return await getEmbedding(document);

}

// post receives the ARRAY of all results

async post(

shared: EmbedState,

documents: string[],

embeddings: number[][]

): Promise<undefined> {

shared.embeddings = embeddings;

return undefined;

}

}

const node = new EmbedDocuments();

await node.run({

documents: ['doc1', 'doc2', 'doc3']

});For parallel processing (faster but uses more resources), use ParallelBatchNode:

import { ParallelBatchNode } from 'pocketflow';

class ParallelEmbedder extends ParallelBatchNode<EmbedState> {

// Same interface, but exec() runs in parallel

async prep(shared: EmbedState): Promise<string[]> {

return shared.documents;

}

async exec(document: string): Promise<number[]> {

return await getEmbedding(document);

}

async post(shared: EmbedState, _: string[], embeddings: number[][]): Promise<undefined> {

shared.embeddings = embeddings;

return undefined;

}

}RAG with PocketFlow

Let’s build a complete RAG system. Remember the portfolio project from Chapter 07? Here’s the PocketFlow version:

Stage 1: Indexing Pipeline

type IndexState = {

files: string[];

chunks?: string[];

embeddings?: number[][];

index?: VectorIndex;

};

// Step 1: Chunk all documents

class ChunkDocuments extends BatchNode<IndexState> {

async prep(shared: IndexState): Promise<string[]> {

return shared.files;

}

async exec(filepath: string): Promise<string[]> {

const content = await fs.readFile(filepath, 'utf-8');

return chunkText(content, { size: 500, overlap: 50 });

}

async post(shared: IndexState, _: string[], chunkArrays: string[][]): Promise<undefined> {

shared.chunks = chunkArrays.flat();

return undefined;

}

}

// Step 2: Embed all chunks

class EmbedChunks extends BatchNode<IndexState> {

async prep(shared: IndexState): Promise<string[]> {

return shared.chunks || [];

}

async exec(chunk: string): Promise<number[]> {

return await openai.embeddings.create({

model: 'text-embedding-3-small',

input: chunk

}).then(r => r.data[0].embedding);

}

async post(shared: IndexState, _: string[], embeddings: number[][]): Promise<undefined> {

shared.embeddings = embeddings;

return undefined;

}

}

// Step 3: Store in vector database

class StoreIndex extends Node<IndexState> {

async prep(shared: IndexState): Promise<{ chunks: string[]; embeddings: number[][] }> {

return {

chunks: shared.chunks || [],

embeddings: shared.embeddings || []

};

}

async exec(data: { chunks: string[]; embeddings: number[][] }): Promise<VectorIndex> {

const index = new VectorIndex();

for (let i = 0; i < data.chunks.length; i++) {

index.add(data.chunks[i], data.embeddings[i]);

}

return index;

}

async post(shared: IndexState, _: any, index: VectorIndex): Promise<undefined> {

shared.index = index;

return undefined;

}

}

// Connect the indexing pipeline

const chunk = new ChunkDocuments();

const embed = new EmbedChunks();

const store = new StoreIndex();

chunk.next(embed).next(store);

const indexingPipeline = new Flow(chunk);Stage 2: Query Pipeline

type QueryState = {

index: VectorIndex;

question: string;

context?: string[];

answer?: string;

};

// Step 1: Retrieve relevant chunks

class RetrieveContext extends Node<QueryState> {

async prep(shared: QueryState): Promise<{ index: VectorIndex; question: string }> {

return { index: shared.index, question: shared.question };

}

async exec(data: { index: VectorIndex; question: string }): Promise<string[]> {

const questionEmbedding = await getEmbedding(data.question);

return data.index.search(questionEmbedding, { limit: 5 });

}

async post(shared: QueryState, _: any, context: string[]): Promise<undefined> {

shared.context = context;

return undefined;

}

}

// Step 2: Generate answer

class GenerateAnswer extends Node<QueryState> {

async prep(shared: QueryState): Promise<{ question: string; context: string[] }> {

return { question: shared.question, context: shared.context || [] };

}

async exec(data: { question: string; context: string[] }): Promise<string> {

return await anthropic.messages.create({

model: 'claude-sonnet-4-20250514',

max_tokens: 1000,

messages: [{

role: 'user',

content: `Context:\n${data.context.join('\n\n')}\n\nQuestion: ${data.question}`

}]

}).then(r => r.content[0].text);

}

async post(shared: QueryState, _: any, answer: string): Promise<undefined> {

shared.answer = answer;

return undefined;

}

}

// Connect query pipeline

const retrieve = new RetrieveContext();

const generate = new GenerateAnswer();

retrieve.next(generate);

const queryPipeline = new Flow(retrieve);A developer told me they spent 3 weeks learning LangChain’s retrieval abstractions. Then they rewrote it in PocketFlow in an afternoon. “The PocketFlow version was 80 lines. The LangChain version was 200 lines and I still didn’t understand what half of it did.”

When to Use What

| Use Case | PocketFlow | LangChain/LlamaIndex | n8n/Flowise |

|---|---|---|---|

| Simple chatbot | ✅ Overkill but works | ✅ Fine | ✅ Visual is nice |

| RAG system | ✅ Clean and clear | ✅ More boilerplate | ⚠️ Limited customization |

| Custom agent | ✅ Full control | ⚠️ Fight the framework | ❌ Too rigid |

| Production system | ✅ No surprises | ⚠️ Version churn | ⚠️ Hard to test |

| Team project | ✅ Anyone can understand | ⚠️ Framework expertise needed | ⚠️ Visual doesn’t scale |

| Prototyping | ⚠️ More initial code | ✅ Faster start | ✅ Fastest start |

Use PocketFlow When:

- You need full control over the execution flow

- You’re building for production and hate surprises

- Your team doesn’t want to learn a massive framework

- You’ve been burned by breaking changes before

- You want to understand every line of your AI system

Maybe Use LangChain/LlamaIndex When:

- You’re prototyping and will rewrite later anyway

- You need specific integrations they’ve already built

- Your team already knows the framework well

- You’re building something that maps perfectly to their abstractions

Use Visual Tools (n8n, Flowise) When:

- Non-developers need to modify workflows

- You’re building internal tools that change frequently

- The visual representation genuinely helps your team

- You don’t need complex custom logic

The Migration Path

Already using LangChain? Here’s how to migrate:

Step 1: Identify Your Core Flow

What does your LangChain code actually DO? Strip away the abstractions:

# LangChain version (conceptually)

chain = ConversationalRetrievalChain.from_llm(

llm=ChatOpenAI(),

retriever=vectorstore.as_retriever(),

memory=ConversationBufferMemory()

)

response = chain({"question": question})What’s actually happening:

- Take a question

- Retrieve relevant documents

- Add conversation history

- Call LLM with context

- Store response in memory

Step 2: Write It As Nodes

// PocketFlow version

class RetrieveAndAnswer extends Node<ConversationState> {

async prep(shared: ConversationState) {

return {

question: shared.currentQuestion,

history: shared.history,

retriever: shared.retriever

};

}

async exec(data) {

const docs = await data.retriever.search(data.question);

const context = docs.join('\n');

const historyStr = data.history.map(h =>

`Human: ${h.human}\nAssistant: ${h.assistant}`

).join('\n');

return await callLLM(`

History:\n${historyStr}\n

Context:\n${context}\n

Question: ${data.question}

`);

}

async post(shared, _, answer) {

shared.history.push({

human: shared.currentQuestion,

assistant: answer

});

shared.lastAnswer = answer;

return undefined;

}

}Same functionality. No framework magic. You can see exactly what’s happening.

Step 3: Migrate Incrementally

You don’t have to rewrite everything at once:

- Identify the most problematic LangChain code

- Rewrite that piece in PocketFlow

- Have both systems coexist temporarily

- Migrate more pieces as needed

What You’ve Learned

After this chapter, you can:

-

Understand the framework trade-off: Heavy frameworks add abstractions that have costs. Sometimes those costs exceed the benefits.

-

Build with PocketFlow’s mental model: Nodes do work in three steps (prep, exec, post). Flows connect nodes via actions. Shared stores pass data.

-

Create agents without framework magic: An agent is just a flow with a loop. The “decide” node returns actions that determine the next step.

-

Process batches efficiently: BatchNode for sequential processing, ParallelBatchNode for concurrent processing.

-

Build complete RAG systems: Indexing and query pipelines are just flows of nodes.

-

Know when to use what: PocketFlow for control and production, LangChain for prototypes, visual tools for non-developers.

The meta-lesson: you don’t need 50,000 lines of framework to build AI applications. Sometimes 100 lines and a clear mental model is all you need.

Next chapter: We’ve covered the technical skills. Now let’s talk about the human element—surviving interviews and managing expectations.