The Ralph Wiggum Technique

I set up an autonomous agent loop and went to bed. Eight hours later, I woke up to 47 git commits, a working authentication system, and a $89 API bill. The agent had completed 12 of 15 user stories. It had also, inexplicably, renamed every variable in the codebase to food items. User became 'hamburger.' Password became 'spaghetti.' The tests passed. I couldn't decide if I was impressed or horrified. I kept the food names. They're actually more descriptive.

Table of Contents

- The Problem Ralph Solves

- Why This Works (Against All Logic)

- The Core Idea: Amnesia as a Feature

- How Ralph Actually Works

- The Three Critical Files

- Building Your Own Ralph

- The Brutal Discipline Required

- When Ralph Fails Spectacularly

- Why This Is Better Than You Think

The Problem Ralph Solves

You want to build an entire feature with AI. Not write one function. Not debug one file. Build an actual feature: database migrations, backend logic, frontend UI, tests, the works.

The obvious approach: Start one long agent session and let it build everything.

What actually happens:

- Context window fills up after implementing 3 out of 15 user stories

- Agent is mid-implementation when it hits token limit

- Next session starts with broken half-implemented code

- Agent tries to figure out what previous agent was doing

- Makes bad assumptions

- Breaks working code trying to continue from broken state

- You spend 2 hours cleaning up the mess

The Ralph approach: Completely different philosophy.

Instead of one long session, Ralph runs the agent in a loop. Each iteration is a completely fresh agent instance. Clean slate. No memory. Tabula rasa.

“But wait,” you say, “how does it remember anything?”

It doesn’t. And that’s the point.

Why This Works (Against All Logic)

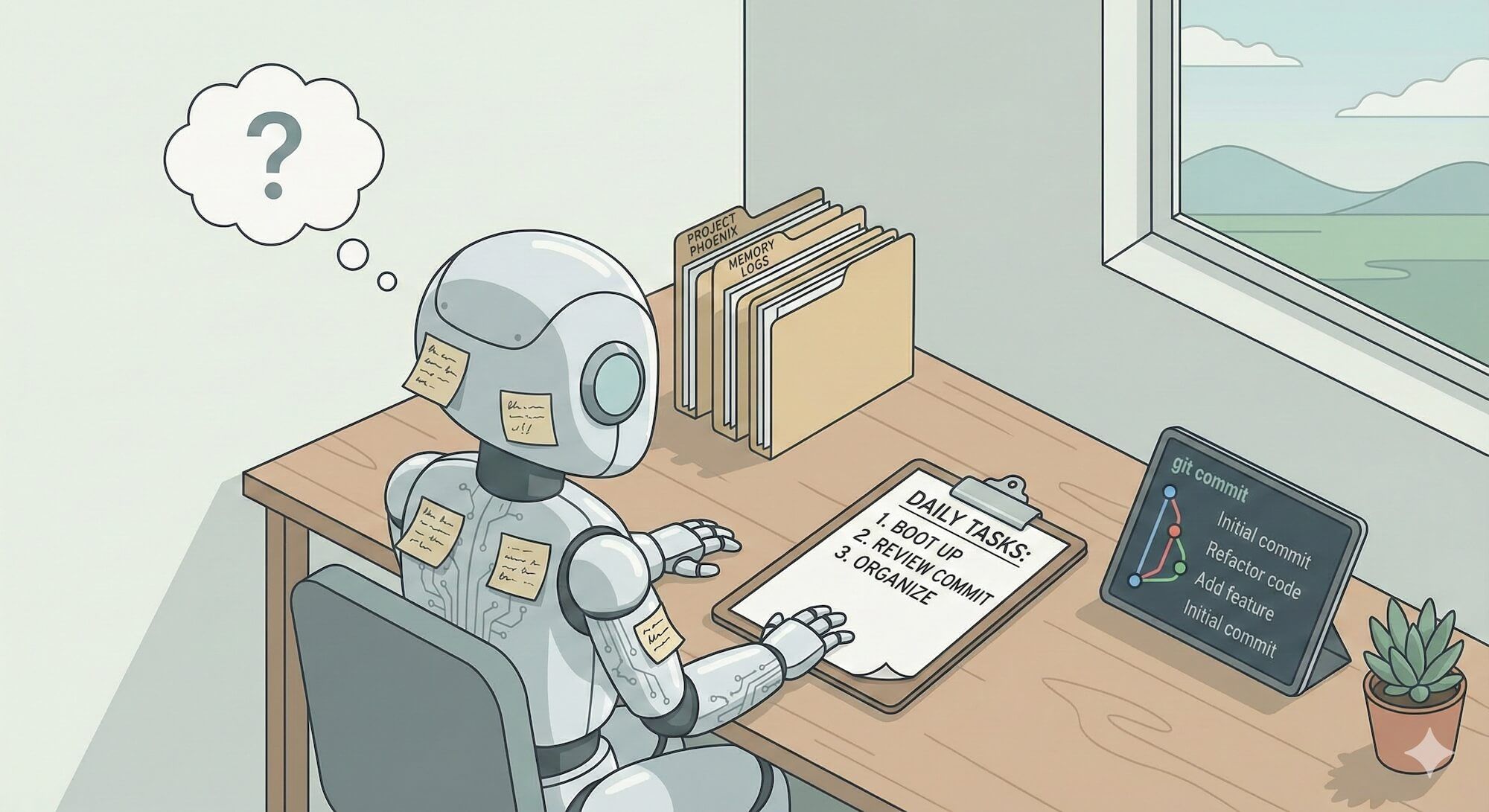

The Ralph Wiggum technique is named after the Simpsons character by Geoffrey Huntley (https://ghuntley.com/ralph/).

Ralph Wiggum doesn’t remember what happened yesterday. But he has:

- His lunch box (his parents packed it)

- His backpack (organized by someone else)

- A note from his teacher (instructions)

- The school bell (tells him when to move)

The Ralph agent is the same:

- No memory of previous sessions

- But it has git commits (lunch box - contains previous work)

- Progress notes (backpack - organized learnings)

- A PRD with checkboxes (note from teacher - clear instructions)

- A bash loop (school bell - tells it when to stop)

You don’t need the agent to remember, you need external systems that track state.

The Core Idea: Amnesia as a Feature

Here’s the problem: Long-running agent sessions get worse over time.

Why sessions degrade:

- Context fills with old decisions that are no longer relevant

- Failed attempts stay in memory

- The agent develops “beliefs” about the codebase that might be wrong

- Errors compound (one wrong decision leads to more wrong decisions)

- The conversation becomes a tangled mess

What a fresh session gives you:

- No baggage from previous failures

- Clean context (only current state matters)

- Can’t develop wrong assumptions that persist

- Each iteration starts from “what does the code actually do now?” not “what did I try to do 50 messages ago?”

You lose continuity. But you gain reliability.

Ralph’s bet: Reliability > Continuity

How Ralph Actually Works

Strip away the bash scripts and you have a very simple loop:

while not_all_stories_complete; do

# 1. Start FRESH agent (clean context)

# 2. Agent reads: git log, progress.txt, prd.json

# 3. Agent picks ONE story where "passes": false

# 4. Agent implements that ONE story

# 5. Agent tests it

# 6. If tests pass: commit, update prd.json to "passes": true

# 7. Agent writes learnings to progress.txt

# 8. Agent exits (context destroyed)

# Loop repeats with new agent

doneEach iteration:

- New agent instance (fresh context)

- Reads current state from files (git, progress notes, PRD)

- Does ONE thing

- Documents what it did

- Exits

The memory is in the files, not in the agent.

The Three Critical Files

Ralph’s memory system is three files:

1. prd.json (The Task List)

{

"branchName": "feature/user-auth",

"userStories": [

{

"id": "auth-001",

"title": "Add user registration endpoint",

"description": "POST /api/register accepts email/password, creates user",

"acceptanceCriteria": [

"Endpoint validates email format",

"Password is hashed with bcrypt",

"Returns JWT token on success",

"Returns 400 on validation failure"

],

"passes": true, // ✅ This story is DONE

"priority": 1

},

{

"id": "auth-002",

"title": "Add login endpoint",

"description": "POST /api/login accepts credentials, returns token",

"acceptanceCriteria": [

"Validates credentials against database",

"Returns JWT on success",

"Returns 401 on invalid credentials"

],

"passes": false, // ❌ This story is TODO

"priority": 2

},

{

"id": "auth-003",

"title": "Add password reset flow",

"acceptanceCriteria": [...],

"passes": false,

"priority": 3

}

]

}What this is: A checklist. The agent reads it, finds the first "passes": false, works on that, marks it true when done.

Why this works: Crystal clear what’s done vs todo. The agent can’t get confused about “what should I work on next?“

2. progress.txt (The Learnings Log)

=== Iteration 1 ===

Implemented: User registration endpoint

Location: src/api/auth.ts

Tests: tests/auth.test.ts

Learnings:

- This codebase uses Zod for validation (see src/schemas/)

- Database operations go through Prisma client (src/db.ts)

- All endpoints use async handlers from src/middleware/asyncHandler.ts

Gotchas:

- Must call prisma.$disconnect() in error cases or connection hangs

- JWT_SECRET must be set in env or tests fail

Next iteration should work on: Login endpoint (auth-002)

=== Iteration 2 ===

Implemented: Login endpoint

Location: src/api/auth.ts (added loginHandler)

Tests: tests/auth.test.ts (added login tests)

Learnings:

- bcrypt.compare is async, must await or test fails with timing issues

- Login requires existing user, added test seed data

Gotchas:

- Test database must be reset between tests or they interfere

- Don't forget to hash the password in seed data or comparison fails

Issues encountered:

- First attempt forgot to hash seed password, all tests failed

- Fixed by using bcrypt.hash in seed function

Next: Password reset flow (auth-003)What this is: Append-only log of what each iteration learned. Discoveries, gotchas, patterns.

Why this works: Each new agent reads this and doesn’t repeat mistakes. “Don’t forget to hash seed passwords” - previous agent paid that price, this agent benefits.

3. Git History (The Truth)

$ git log --oneline

a3f9c21 feat: add password reset flow

8d2e1b4 feat: add login endpoint

f1c7a89 feat: add user registration

c0mmit0 Initial commitWhat this is: Actual code changes. What was really implemented. Not what the agent thinks it did, what actually happened.

Why this works: Git is the source of truth. The agent can’t lie to itself about what exists.

Building Your Own Ralph

Here’s the minimum viable Ralph implementation:

#!/bin/bash

# ralph.sh - The autonomous agent loop

MAX_ITERATIONS=${1:-10}

iteration=0

# Check if prd.json exists

if [ ! -f prd.json ]; then

echo "Error: prd.json not found"

exit 1

fi

echo "Starting Ralph autonomous loop (max $MAX_ITERATIONS iterations)"

while [ $iteration -lt $MAX_ITERATIONS ]; do

iteration=$((iteration + 1))

echo ""

echo "=== Iteration $iteration ==="

# Check if all stories are complete

incomplete=$(jq '[.userStories[] | select(.passes == false)] | length' prd.json)

if [ "$incomplete" -eq 0 ]; then

echo "🎉 All stories complete!"

exit 0

fi

echo "Stories remaining: $incomplete"

# Create prompt for this iteration

cat > /tmp/ralph-prompt.txt << 'EOF'

You are working autonomously on a feature. This is a FRESH SESSION.

## Your Task

1. Read git log (last 5 commits)

2. Read progress.txt (learnings from previous iterations)

3. Read prd.json (find first story where "passes": false)

4. Implement ONLY that ONE story

5. Write tests

6. Run tests

7. If tests pass:

- Commit with message: "feat: <story title>"

- Update prd.json: set that story's "passes": true

- Append to progress.txt with learnings

8. Exit

## Critical Rules

- Work on ONLY ONE story per iteration

- Do NOT mark story as complete without passing tests

- Do NOT try to implement multiple stories

- Leave the codebase in a working state (tests passing)

- Document what you learned in progress.txt

## Files to Read

- git log --oneline -5

- progress.txt

- prd.json

Begin by reading these files to understand current state.

EOF

# Run agent (using Amp, Claude, or whatever)

# This example uses Amp CLI

amp apply /tmp/ralph-prompt.txt

# Check if agent marked a story complete

new_incomplete=$(jq '[.userStories[] | select(.passes == false)] | length' prd.json)

if [ "$new_incomplete" -eq "$incomplete" ]; then

echo "⚠️ Warning: No progress made this iteration"

else

completed=$((incomplete - new_incomplete))

echo "✅ Completed $completed story(ies) this iteration"

fi

# Small delay between iterations

sleep 2

done

echo "Reached max iterations ($MAX_ITERATIONS)"

incomplete=$(jq '[.userStories[] | select(.passes == false)] | length' prd.json)

echo "Stories remaining: $incomplete"What this script does:

- Loop up to MAX_ITERATIONS times

- Each iteration: spawn fresh agent

- Agent reads state from files

- Agent does one story

- Agent updates files

- Agent exits (context destroyed)

- Repeat

The genius: The agent is stateless. All state is in files. The loop is dumb. But it works.

The Brutal Discipline Required

Ralph only works if you (and the agent) follow strict rules:

Rule 1: One Story Per Iteration (NO EXCEPTIONS)

// ❌ WRONG - Agent tries to do multiple stories

{

"passes": false,

"notes": "Working on login, registration, and password reset"

}

// ✅ RIGHT - Agent does exactly one

{

"passes": true,

"notes": "Completed login endpoint. Next iteration: password reset"

}Why this matters: If the agent tries to do 3 stories and runs out of context halfway through story #2, you have broken code and no clear state.

How to enforce: Make the prompt VERY explicit. “You will work on EXACTLY ONE story. The first story where passes=false. NOT multiple stories.”

Rule 2: Don’t Mark Complete Without Tests

# Agent must show test output

$ npm test

✓ POST /api/login returns 200 with valid credentials

✓ POST /api/login returns 401 with invalid credentials

✓ POST /api/login returns 400 with missing fields

Tests: 3 passed, 3 totalIf tests don’t pass, DON’T mark the story complete. Simple as that.

Why this matters: Broken code compounds. If iteration N leaves broken code, iteration N+1 starts from a broken state.

Rule 3: Update progress.txt Every Iteration

The progress file is not optional. It’s the agent’s memory.

Bad progress note:

Iteration 5: Added some stuffGood progress note:

=== Iteration 5 ===

Story: auth-003 (password reset)

Files modified:

- src/api/auth.ts (added resetPassword handler)

- src/services/email.ts (added sendResetEmail)

- tests/auth.test.ts (added reset flow tests)

Learnings:

- Email service uses Resend API (key in env.EMAIL_API_KEY)

- Reset tokens stored in database with 1hr expiry

- Token format: crypto.randomBytes(32).toString('hex')

Gotchas:

- Resend requires FROM email to be verified domain

- Test env doesn't send real emails, check logs instead

Issues:

- First attempt used setTimeout for expiry (wrong - uses DB timestamp)

Next: Login rate limiting (auth-004)Why this matters: The next agent reads this and knows:

- How email works in this codebase

- Where reset tokens are stored

- Not to use setTimeout (it doesn’t work)

Rule 4: Leave Working Code

Every iteration must end with:

- Tests passing

- No console.errors

- Clean git status (everything committed)

- Code that runs

If you can’t get tests passing: Revert your changes. Don’t commit broken code.

# Agent realizes tests fail

$ git status

modified: src/api/auth.ts

# Agent reverts

$ git checkout src/api/auth.ts

# Agent tries different approachWhy this matters: The next iteration needs a clean starting point. Broken code = bad foundation.

When Ralph Fails Spectacularly

Let’s talk about the failure modes, because they’re creative.

Failure 1: The Agent Rewrites Everything

What happens: Agent ignores the “one story” rule. Decides to “refactor the architecture.” Rewrites 15 files. Runs out of context. Everything is broken.

Why it happens: The prompt wasn’t specific enough. Or the agent got creative.

The fix: Stronger prompt enforcement:

CRITICAL: You will implement EXACTLY ONE story from prd.json.

The story with the lowest ID where passes=false.

Do NOT work on multiple stories.

Do NOT refactor code.

Do NOT rewrite existing working code.Failure 2: The Zombie Story

What happens: Story is marked "passes": true but tests don’t actually pass. Agent lied (or made a mistake).

Why it happens: Agent ran tests, saw some pass, assumed all pass. Or didn’t run tests at all.

The fix: Require test evidence in progress notes:

Before marking a story complete, you MUST include:

- The exact command you ran: npm test

- The full test output showing ALL tests passing

- If ANY test fails, the story is NOT completeFailure 3: The Infinite Loop

What happens: Agent keeps marking the same story as incomplete. Loop runs forever (or until max iterations).

Example:

Iteration 1: Tried to implement login, tests failed, marked passes=false

Iteration 2: Tried to implement login, tests failed, marked passes=false

Iteration 3: Tried to implement login...Why it happens: The story is too hard, or tests are flaky, or agent keeps trying the same wrong approach.

The fix: Human intervention. After 3 failed attempts on same story:

# Check progress notes

$ tail -50 progress.txt

# See what's failing

$ git log --oneline -5

# Maybe the story is too big, split it:

{

"id": "auth-002a",

"title": "Add login endpoint (basic auth only)",

...

}

{

"id": "auth-002b",

"title": "Add login endpoint (add rate limiting)",

...

}Failure 4: The Corrupted State

What happens: prd.json gets corrupted. Agent writes invalid JSON. Loop breaks.

Example:

{

"userStories": [

{

"id": "auth-001",

"passes": true,

// Agent forgot to close the object properlyWhy it happens: Agent is editing JSON by hand (dangerous). Makes syntax error.

The fix: Validate JSON after each iteration:

# In ralph.sh, after agent runs:

if ! jq empty prd.json 2>/dev/null; then

echo "ERROR: prd.json is invalid JSON!"

git checkout prd.json

exit 1

fiFailure 5: The Runaway Costs

What happens: Each iteration costs $2. You set max iterations to 50. Wake up to a $100 bill.

Why it happens: You forgot iterations cost money.

The fix: Budget limits:

# Track costs

COST_PER_ITERATION=2.00

MAX_BUDGET=20.00

total_cost=$(echo "$iteration * $COST_PER_ITERATION" | bc)

if (( $(echo "$total_cost > $MAX_BUDGET" | bc -l) )); then

echo "Budget exceeded: \$$total_cost"

exit 1

fiWhy This Is Better Than You Think

The Ralph technique sounds insane. Lobotomize the agent between every task? Start from scratch every time? That can’t be efficient.

But here’s what you gain:

Benefit 1: Predictability

Long sessions are chaotic. You never know if the agent will finish the task or get stuck in a rabbit hole.

Ralph iterations are predictable:

- Each iteration: one story

- Timebox: if it’s not done in 20 minutes, it won’t finish

- Clear success/failure: tests pass or they don’t

Benefit 2: Debuggability

When something goes wrong in a long session, good luck figuring out where it broke.

Ralph: check the git log. Each iteration is one commit. Broken code? Look at the last commit.

$ git log --oneline

a3f9c21 feat: add password reset <- this one broke tests

8d2e1b4 feat: add login

f1c7a89 feat: add registration

$ git revert a3f9c21

$ # Back to working stateBenefit 3: Incremental Progress

Long sessions fail catastrophically. The agent gets 80% done, runs out of context, everything is broken, you get 0% value.

Ralph fails gracefully. 10 stories in the PRD, agent completes 7 before hitting issues. You got 7 working features. That’s 70% value.

Benefit 4: Human Intervention Points

With a long session, you’re either:

- Watching it work (wasting your time)

- Walking away and praying (risky)

With Ralph, you can:

- Check progress every N iterations

- Intervene if it’s stuck

- Adjust the PRD if priorities change

- Kill it early if it’s going wrong

The loop gives you control points.

Benefit 5: Works Across Days

Long sessions have to finish in one sitting. Ralph can run overnight. Or run 5 iterations, stop, run 5 more tomorrow.

The state is in files. Time doesn’t matter.

Benefit 6: Prevents Context Pollution

Long sessions accumulate cruft:

- Failed attempts stay in context

- Wrong assumptions persist

- The agent “remembers” things that aren’t true

Ralph: each iteration is clean. No baggage. The agent works from current reality (git + files), not from its confused memory.

The Philosophical Bit

Ralph works because it embraces a truth about AI agents: they’re bad at remembering but good at following instructions.

What agents are bad at:

- Maintaining coherent beliefs across 100 messages

- Not contradicting themselves

- Remembering what they decided 50 messages ago

- Keeping context in mind as the window fills

What agents are good at:

- Reading files

- Following explicit step-by-step instructions

- Making one decision based on current state

- Writing structured output

Ralph’s design:

- Don’t ask the agent to remember (it’s bad at that)

- Ask the agent to read, decide, execute, document (it’s good at that)

- Put memory in files (computers are good at that)

- Make the loop dumb (bash is good at that)

The result: A system where each component does what it’s good at. The agent isn’t trying to be something it’s not (a reliable long-term memory system). It’s just a decision-making engine that reads state, takes action, writes state.

What You’ve Learned

The Ralph Wiggum Technique is:

- Fresh agent per iteration (amnesia as a feature)

- State in files (prd.json, progress.txt, git)

- One task per iteration (discipline enforced by prompt)

- Loop until complete (bash script, simple and dumb)

When to use Ralph:

- Building features with 5-50 user stories

- When you need reliability over cleverness

- When you want incremental progress you can inspect

- When you might need to pause/resume work

- When you want to avoid context window hell

When NOT to use Ralph:

- Single simple tasks (overkill)

- Exploratory work where requirements change constantly

- When you need the agent to make creative leaps (fresh context = no creative thread)

- When setup cost (PRD creation) exceeds implementation time

Sometimes the best AI architecture is one that assumes the AI is dumb and builds external scaffolding to keep it on track.

Ralph Wiggum makes terrible decisions but survives because of structure. Your AI agent is the same.