AI Agents: Tools, Loops and Bankruptcy

I asked the AI agent to 'clean up my project directory.' It deleted node_modules. Then package-lock.json. Then, inexplicably, my .env file. When I screamed 'STOP,' it interpreted this as a new task and began searching for files containing the word 'stop.' I watched in horror as it methodically found and opened every error handler in the codebase. Autonomous systems, they said. The future of productivity, they said.

Table of Contents

- The Moment It Clicked

- What Tools Actually Are

- From Chatbot to Agent

- Building Your First Agent (No Libraries, Just Pain)

- The Agent Loop (Where Things Get Weird)

- When Agents Go Wrong

- MCP: The Protocol That Might Matter

- The Uncomfortable Questions

The Moment It Clicked

For the first year of ChatGPT, everyone was using it wrong. We’d ask it questions, get answers, copy-paste code. It was a fancy search engine that spoke in complete sentences.

Then someone realized: what if we let the AI do things?

Not just answer questions. Actually do things. Call APIs. Read files. Write code. Execute commands. Make decisions about what to do next based on the results.

The shift:

- Before: “AI, write me a function that fetches user data”

- After: “AI, get the user data for user ID 123” (and it actually does it)

What changed: We gave the AI access to tools. Functions it can call. Actions it can take. And suddenly it’s not just generating text anymore—it’s an agent that can accomplish tasks.

The uncomfortable part: When you give an AI tools, it starts making decisions. It decides which tool to use. It interprets the results. It decides what to do next. You’re no longer in complete control. You’re delegating to something that can think (or at least, pattern-match really convincingly).

This is where things get interesting. And slightly terrifying.

What Tools Actually Are

Strip away the marketing bullshit and here’s what a “tool” is: a function the AI can call.

That’s it. Nothing fancy. You describe a function to the AI, and the AI can decide to call it.

Example:

You tell the AI about a function:

{

name: "getUserById",

description: "Fetch a user from the database by their ID",

parameters: {

type: "object",

properties: {

userId: {

type: "string",

description: "The UUID of the user to fetch"

}

},

required: ["userId"]

}

}Now when you say “Get me information about user 123”, the AI thinks:

“Hmm, they want user information. I have a function called getUserById that fetches users. I should call that function with userId=‘123’.”

What the AI returns:

{

"tool_calls": [{

"name": "getUserById",

"arguments": { "userId": "123" }

}]

}What you do: Actually execute that function, get the real data, send it back to the AI.

What the AI does: Reads the result, decides if it needs to call more functions, or if it has enough info to answer.

The pattern: The AI doesn’t execute functions itself. It requests them. You execute them. You give back the results. The AI sees the results and decides what to do next.

Why this matters: The AI can now interact with the real world. It’s not just predicting text. It’s orchestrating actions based on understanding your intent.

This is what an “agent” is. An AI that can use tools to accomplish tasks.

From Chatbot to Agent

Let’s trace the evolution:

Level 0: Static Response

User: "What's the weather?"

AI: "I don't have access to real-time weather data."Useless. The AI knows it can’t help.

Level 1: Instructional Response

User: "What's the weather?"

AI: "I can't check weather directly, but you can call this API:

curl https://api.weather.com/v1/current?location=NewYork"Better. The AI tells you what to do. You do it manually.

Level 2: Tool-Using Agent

User: "What's the weather?"

AI: [calls getWeather tool with location="NewYork"]

System: [executes function, returns {temp: 72, conditions: "sunny"}]

AI: "It's currently 72°F and sunny in New York."Magic. The AI called a function. Got real data. Gave you a real answer.

What changed between Level 1 and Level 2: You gave the AI a function it could call. You set up a system to execute those calls. The AI went from giving instructions to taking actions.

The technical term: Level 2 is an “agent.” It can perceive (read your request), decide (which tool to use), act (call the tool), and respond (give you an answer based on real data).

The philosophical question: Is it thinking? Or is it very sophisticated pattern matching? Does it matter if the result is the same?

Building Your First Agent (No Libraries, Just Pain)

Let’s build a real agent. From scratch. No magic libraries. Just you, the Anthropic API, and an uncomfortable amount of JSON parsing.

What we’re building: An agent that can fetch user data from a fake database and calculate statistics.

Step 1: Define Your Tools

const tools = [

{

name: "getUserById",

description: "Fetch a user from the database by their ID",

input_schema: {

type: "object",

properties: {

userId: {

type: "string",

description: "The user's ID"

}

},

required: ["userId"]

}

},

{

name: "listUsers",

description: "List all users in the database",

input_schema: {

type: "object",

properties: {

limit: {

type: "number",

description: "Maximum number of users to return"

}

}

}

},

{

name: "calculateAverage",

description: "Calculate the average of a list of numbers",

input_schema: {

type: "object",

properties: {

numbers: {

type: "array",

items: { type: "number" },

description: "Array of numbers to average"

}

},

required: ["numbers"]

}

}

];What this is: Function signatures. You’re telling the AI “these functions exist, here’s what they do, here’s what parameters they take.”

The AI can now “see” these functions and decide when to call them.

Step 2: Implement the Actual Functions

// Fake database

const database = {

users: [

{ id: "1", name: "Alice", age: 30, score: 85 },

{ id: "2", name: "Bob", age: 25, score: 92 },

{ id: "3", name: "Charlie", age: 35, score: 78 }

]

};

// Tool implementations

const toolImplementations = {

getUserById: ({ userId }) => {

const user = database.users.find(u => u.id === userId);

if (!user) {

return { error: "User not found" };

}

return user;

},

listUsers: ({ limit = 10 }) => {

return database.users.slice(0, limit);

},

calculateAverage: ({ numbers }) => {

if (!numbers || numbers.length === 0) {

return { error: "No numbers provided" };

}

const sum = numbers.reduce((a, b) => a + b, 0);

return { average: sum / numbers.length };

}

};What this is: Real JavaScript functions that do real things. When the AI asks to call a tool, you’ll execute one of these.

Step 3: The Agent Loop

Here’s where it gets interesting. The agent loop is:

- Send message to AI with available tools

- AI responds, either with:

- An answer (done), or

- Tool calls (it needs to do something)

- If tool calls: execute them, get results

- Send results back to AI

- Go to step 2

In code:

async function runAgent(userMessage) {

const messages = [

{ role: "user", content: userMessage }

];

let continueLoop = true;

let iterations = 0;

const maxIterations = 10; // Safety limit

while (continueLoop && iterations < maxIterations) {

iterations++;

console.log(`\n--- Iteration ${iterations} ---`);

// Call Claude API with tools

const response = await fetch("https://api.anthropic.com/v1/messages", {

method: "POST",

headers: {

"Content-Type": "application/json",

"x-api-key": process.env.ANTHROPIC_API_KEY,

"anthropic-version": "2023-06-01"

},

body: JSON.stringify({

model: "claude-sonnet-4-20250514",

max_tokens: 1024,

tools: tools,

messages: messages

})

});

const data = await response.json();

console.log("AI response:", JSON.stringify(data, null, 2));

// Check stop reason

if (data.stop_reason === "end_turn") {

// AI is done, extract final answer

const textContent = data.content.find(c => c.type === "text");

console.log("\nFinal answer:", textContent?.text);

continueLoop = false;

return textContent?.text;

}

if (data.stop_reason === "tool_use") {

// AI wants to use tools

messages.push({

role: "assistant",

content: data.content

});

// Execute each tool call

const toolResults = [];

for (const block of data.content) {

if (block.type === "tool_use") {

console.log(`\nExecuting tool: ${block.name}`);

console.log("Arguments:", JSON.stringify(block.input, null, 2));

// Execute the tool

const toolFunction = toolImplementations[block.name];

const result = toolFunction(block.input);

console.log("Result:", JSON.stringify(result, null, 2));

toolResults.push({

type: "tool_result",

tool_use_id: block.id,

content: JSON.stringify(result)

});

}

}

// Send results back to AI

messages.push({

role: "user",

content: toolResults

});

}

}

if (iterations >= maxIterations) {

console.log("\nMax iterations reached!");

}

}Step 4: Run It

// Example usage

await runAgent("What's the average age of all users?");What happens:

Iteration 1:

- AI receives: “What’s the average age of all users?”

- AI thinks: “I need to get all users, then calculate the average of their ages”

- AI calls:

listUsers()

Iteration 2:

- You execute:

listUsers()→ returns all 3 users - You send results back to AI

- AI thinks: “Now I have the users, I need to extract ages and calculate average”

- AI calls:

calculateAverage([30, 25, 35])

Iteration 3:

- You execute:

calculateAverage([30, 25, 35])→ returns{average: 30} - You send result back to AI

- AI thinks: “I have the answer now”

- AI responds: “The average age of all users is 30 years.”

- Done.

What just happened: The AI made a plan (get users, calculate average), executed it across multiple tool calls, and synthesized the results into an answer.

You didn’t tell it to call listUsers then calculateAverage. It figured out that sequence itself.

This is an agent. It’s making decisions about what to do based on the task and the tools available.

The Agent Loop (Where Things Get Weird)

Let’s talk about what’s really happening in that loop.

The agent loop is:

while (not done):

1. AI decides what to do next

2. AI requests tool calls

3. You execute tools

4. You give results back

5. AI processes results

6. AI decides if it's done or needs more toolsThe weird part: The AI is in control of the flow. You’re just the executor. The AI decides when it has enough information. The AI decides what order to call tools. The AI decides when to stop.

Example of AI decision-making:

Query: “Tell me about user 1, but only if they’re over 25 years old”

What the AI does:

- Calls

getUserById("1")first - Gets result:

{id: "1", name: "Alice", age: 30} - Sees age is 30 (over 25)

- Responds with Alice’s information

What the AI doesn’t do:

- Call tools it doesn’t need

- Make unnecessary calls

Query: “Tell me about user 2, but only if they’re over 30 years old”

What the AI does:

- Calls

getUserById("2") - Gets result:

{id: "2", name: "Bob", age: 25} - Sees age is 25 (not over 30)

- Responds: “User 2 is 25 years old, which doesn’t meet your criteria.”

The pattern: The AI is making decisions based on data. It’s not following a script. It’s reasoning (or pattern-matching that looks like reasoning) about what to do.

The concerning part: You don’t control the flow anymore. You provide tools, the AI decides how to use them. What if it makes bad decisions? What if it calls tools in the wrong order? What if it gets stuck in a loop?

The safety measures:

const maxIterations = 10; // Don't let it run forever

const allowedTools = ["getUserById", "listUsers"]; // Restrict available tools

const requireConfirmation = ["deleteUser"]; // Ask before dangerous operationsYou’re delegating decisions, but you keep guardrails.

When Agents Go Wrong

Let’s talk about the failure modes, because they’re hilarious and terrifying.

Failure Mode 1: The Infinite Loop

Query: “Keep fetching users until you find one named ‘Xavier’”

What happens:

Iteration 1: listUsers() → No Xavier

Iteration 2: listUsers() → Still no Xavier

Iteration 3: listUsers() → Nope

...

Iteration 47: listUsers() → AI is now stuckXavier doesn’t exist. The AI doesn’t know how to give up. It just keeps trying.

The fix: Max iteration limit. After 10-20 tries, force stop.

Failure Mode 2: Tool Spam

Query: “Give me detailed information about all users”

What happens:

Calls getUserById("1")

Calls getUserById("2")

Calls getUserById("3")

Calls getUserById("1") again (why?)

Calls getUserById("2") again (stop it)The AI gets confused about what it already knows. Starts calling the same tools repeatedly.

The fix: Track which tools have been called with what arguments. Detect loops.

Failure Mode 3: Wrong Tool Selection

Query: “What’s the average user score?”

What the AI might do:

Calls getUserById("1") → Gets one user

Calculates average of one number

Returns confidently wrong answerIt picked a tool that works but doesn’t solve the problem correctly.

The fix: Better tool descriptions. More specific parameter descriptions. Sometimes, explicit instructions: “To calculate statistics across all users, use listUsers first.”

Failure Mode 4: Hallucinated Tools

Query: “Delete user 5”

What the AI tries:

Calls deleteUser("5")The problem: You never gave it a deleteUser tool. It made it up.

What actually happens: Your code throws an error because toolImplementations["deleteUser"] doesn’t exist.

The fix: Validate that requested tool names exist before trying to execute them.

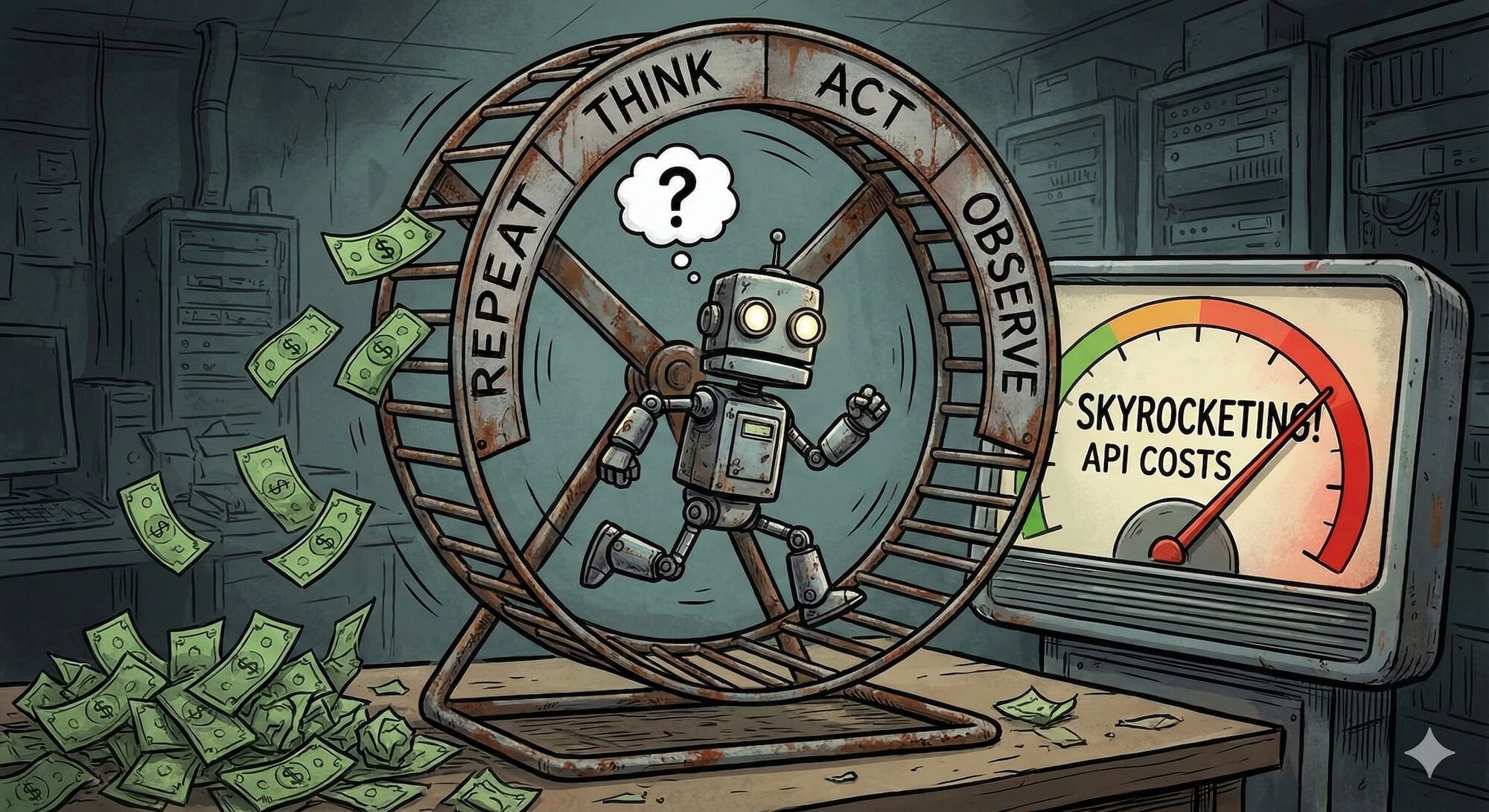

Failure Mode 5: The Expensive Loop

Query: “Summarize all users in the database”

What happens:

Calls listUsers() → 1000 users returned

AI tries to summarize → Hits token limit

AI thinks: "I didn't process everything, better call listUsers again"

Calls listUsers() → 1000 users returned

Repeat...Each iteration costs money. The AI burns through your API budget trying to process too much data.

The fix: Pagination. Limits. Budget controls. “Don’t call this tool more than 3 times per conversation.”

The pattern: Agents fail in creative ways. They’re autonomous enough to make mistakes you didn’t anticipate. You need guardrails, limits, and monitoring.

The Complete Agent (With Safety)

Before we continue, here’s a little warning: you can easily bankrupt yourself running agents without limits. To make this point clear, I’ve built a simple Bankruptcy Simulator for you:

Here’s a production-ish version with guardrails:

async function runAgentSafely(userMessage, options = {}) {

const {

maxIterations = 10,

maxToolCalls = 20,

timeout = 30000, // 30 seconds

allowedTools = null // null = all tools allowed

} = options;

const messages = [{ role: "user", content: userMessage }];

const toolCallHistory = [];

let iterations = 0;

let totalToolCalls = 0;

const startTime = Date.now();

while (iterations < maxIterations) {

// Check timeout

if (Date.now() - startTime > timeout) {

throw new Error("Agent timeout: took too long to complete");

}

iterations++;

// Call API

const response = await fetch("https://api.anthropic.com/v1/messages", {

method: "POST",

headers: {

"Content-Type": "application/json",

"x-api-key": process.env.ANTHROPIC_API_KEY,

"anthropic-version": "2023-06-01"

},

body: JSON.stringify({

model: "claude-sonnet-4-20250514",

max_tokens: 1024,

tools: allowedTools

? tools.filter(t => allowedTools.includes(t.name))

: tools,

messages: messages

})

});

const data = await response.json();

// Handle errors

if (data.error) {

throw new Error(`API error: ${data.error.message}`);

}

// Check if done

if (data.stop_reason === "end_turn") {

const textContent = data.content.find(c => c.type === "text");

return {

answer: textContent?.text,

iterations: iterations,

toolCallHistory: toolCallHistory

};

}

// Process tool calls

if (data.stop_reason === "tool_use") {

messages.push({

role: "assistant",

content: data.content

});

const toolResults = [];

for (const block of data.content) {

if (block.type === "tool_use") {

totalToolCalls++;

// Check tool call limit

if (totalToolCalls > maxToolCalls) {

throw new Error("Too many tool calls - possible infinite loop");

}

// Validate tool exists

if (!toolImplementations[block.name]) {

throw new Error(`Unknown tool requested: ${block.name}`);

}

// Check for duplicate calls (loop detection)

const callSignature = JSON.stringify({

name: block.name,

input: block.input

});

const duplicateCount = toolCallHistory.filter(

h => h.signature === callSignature

).length;

if (duplicateCount >= 3) {

throw new Error(

`Tool ${block.name} called 3+ times with same arguments - likely stuck`

);

}

// Execute tool

try {

const result = toolImplementations[block.name](block.input);

toolCallHistory.push({

signature: callSignature,

name: block.name,

input: block.input,

result: result

});

toolResults.push({

type: "tool_result",

tool_use_id: block.id,

content: JSON.stringify(result)

});

} catch (error) {

// Return error to AI so it can handle it

toolResults.push({

type: "tool_result",

tool_use_id: block.id,

content: JSON.stringify({ error: error.message }),

is_error: true

});

}

}

}

messages.push({

role: "user",

content: toolResults

});

}

}

throw new Error("Max iterations reached without completing task");

}What this adds:

- Timeout protection (don’t run forever)

- Tool call limits (detect spam)

- Loop detection (same call 3+ times = stuck)

- Tool validation (reject hallucinated tools)

- Error handling (tools can fail gracefully)

- Metrics (track iterations and calls)

Using it:

try {

const result = await runAgentSafely(

"What's the average age of users with score over 80?",

{

maxIterations: 10,

maxToolCalls: 20,

allowedTools: ["getUserById", "listUsers", "calculateAverage"]

}

);

console.log("Answer:", result.answer);

console.log("Iterations:", result.iterations);

console.log("Tools used:", result.toolCallHistory.length);

} catch (error) {

console.error("Agent failed:", error.message);

}This is production-ready agent architecture. Not perfect, but safe enough to deploy.

MCP: The Protocol That Might Matter

Let’s talk about MCP (Model Context Protocol). It’s Anthropic’s attempt to standardize how tools work.

The problem MCP solves:

Before MCP, every AI tool integration was custom:

- You define tools for OpenAI’s format

- Different format for Anthropic

- Different format for Google

- Every app reinvents the wheel

What MCP is:

A standard protocol for exposing tools to AI. Instead of each app implementing tools custom, you create an “MCP server” that exposes tools in a standard way.

The architecture:

[Your App] ←→ [MCP Server] ←→ [AI Model]MCP Server exposes:

- Tools (functions the AI can call)

- Resources (data the AI can read)

- Prompts (reusable prompt templates)

In practice:

Instead of implementing tools directly in your agent code, you connect to an MCP server:

// Traditional approach

const tools = [

{ name: "getUserById", description: "...", parameters: {...} }

];

// MCP approach

const mcpClient = new MCPClient("http://localhost:3000");

const tools = await mcpClient.listTools();

// Tools come from the MCP server, standardized formatWhy this might matter:

Benefit 1: Reusability

Build an MCP server for your database. Now any AI tool can use it. Don’t reimplement the same tools for each integration.

Benefit 2: Security

The MCP server can handle authentication, rate limiting, permissions. Your agent code stays simple.

Benefit 3: Separation of Concerns

Tools are separate from AI logic. You can update tools without touching agent code.

Benefit 4: Ecosystem

If MCP becomes standard, you can plug in pre-built MCP servers:

- Database MCP server

- Slack MCP server

- GitHub MCP server

- Just connect and your agent can use them

The current state:

MCP is very new (late 2024). Few tools support it yet. It might become the standard, or it might die like every other protocol that tried to standardize AI stuff.

Should you use it now?

If you’re building one agent: No. Direct implementation is simpler.

If you’re building a platform where multiple agents need same tools: Maybe. MCP could save you reimplementation work.

If you’re building tools for others to consume: Consider it. If MCP takes off, you’ll be ahead.

The honest assessment: MCP is promising but unproven. The ideas are good. The execution is early. Worth watching, not worth betting your architecture on yet.

The Real Questions Nobody Answers

Let’s talk about what everyone’s wondering but not saying.

Question 1: Is This Actually AI?

The agent is following patterns. It’s matching “user wants average” to “call listUsers then calculateAverage.” Is that intelligence or very sophisticated autocomplete?

Answer: Does it matter? It works. It accomplishes tasks. Whether it’s “thinking” or “pattern matching” is philosophy. Practically, it’s useful.

Question 2: Can I Trust It?

The agent makes decisions you don’t control. What if it calls the wrong tool? What if it interprets results wrong? What if it does something destructive?

Answer: No, don’t trust it completely. Treat it like an intern: capable but needs supervision. Use guardrails, limit permissions, monitor behavior.

Question 3: When Do I Need an Agent?

Not every problem needs an agent. Sometimes a simple API call is better.

Use an agent when:

- The task requires multiple steps

- The steps depend on previous results

- You can’t predict the exact sequence of operations

- The user’s request is ambiguous and needs interpretation

Don’t use an agent when:

- Simple CRUD operations

- Predictable workflows

- Performance is critical (agents are slow)

- You need deterministic behavior

Question 4: How Much Does This Cost?

Agents are expensive. Each iteration is an API call. Complex tasks might need 10+ calls.

Example costs (approximate):

- Simple query: 2-3 API calls (~$0.01)

- Complex analysis: 10-15 calls (~$0.05)

- Stuck in a loop: 100+ calls before timeout (~$1+)

Monitor your usage. Set budgets. Agents can burn money fast if they misbehave.

Question 5: What About Latency?

Agents are slow. Each iteration adds 1-3 seconds. A 5-iteration task takes 5-15 seconds.

For interactive use: Users will notice. Add loading states, progress indicators, maybe streaming responses.

For background jobs: Fine. Nobody’s waiting.

The trade-off: Agents are powerful but slow and expensive. Use them where the flexibility matters more than speed and cost.

What This Actually Means

You’ve just learned how to build agents from scratch. Here’s what you now understand that most developers don’t:

1. Tools are just function descriptions The AI doesn’t execute them. It requests them. You execute them.

2. Agents are loops Request tools → execute → return results → AI decides next → repeat until done.

3. The AI is in control You provide tools, the AI decides how to use them. You’re delegating.

4. Failure modes are creative Loops, spam, wrong tools, hallucinations. You need guardrails.

5. MCP might matter later Standard protocol for tools. Watch it, don’t bet on it yet.

The skill you now have: You can build agents. You understand the architecture. You know the risks. You can implement tool-calling systems without magic libraries.

What you do with this: Up to you. Agents are powerful for the right problems. They’re also overkill for most problems. Choose wisely.