Building Your First AI Feature (The Portfolio Project)

Just spent $847 on OpenAI credits building a chatbot that answers questions about my resume. It works perfectly. I've used 4 agents in parallel. It has answered exactly zero questions because no recruiter has ever used it. They just read the resume like normal humans. I mass-applied to 200 jobs with this chatbot link. It was clicked 3 times. By me. Testing it. The ROI on this project is negative $847 and 40 hours of my life. But hey, it looks great on my GitHub.

Table of Contents

- Why You Need This

- What NOT to Build

- What Actually Impresses Interviewers

- The Project: Documentation Search

- RAG Explained Like You’re Not a PhD

- Setting Up the Foundation

- Step 1: Chunking Your Documents

- Step 2: Creating Embeddings

- Step 3: Vector Storage

- Step 4: The Search Function

- Step 5: The AI Response

- Step 6: The API Endpoint

- Deployment Without Bankruptcy

- How to Talk About This in Interviews

- The Honest Limitations

Why You Need This (The Interview Question)

“Have you built anything with AI?”

This question is coming. It’s in every tech interview now. And your answer matters.

Bad answers:

- “I’ve used ChatGPT” (so has my grandmother)

- “I played around with the API” (so did everyone else)

- “I built a chatbot” (which chatbot? what does it do? crickets)

Good answer: “I built a documentation search system using RAG. It indexes our internal docs and lets developers ask questions in natural language. I used OpenAI embeddings for semantic search and GPT-4 for generating answers with citations. The tricky part was chunking documents correctly — too small and you lose context, too large and the embeddings get diluted. I ended up with overlapping chunks of about 500 tokens each.”

See the difference? The second answer shows you actually understand how this stuff works. You made decisions. You hit problems. You solved them.

That’s what this chapter is about: building something real that you can talk about intelligently.

What NOT to Build (The Graveyard of Portfolio Projects)

Before we build something good, let’s talk about what not to build:

The Generic Chatbot

“I built a chatbot that answers questions!”

About what? Using what data? What makes it different from ChatGPT itself? Nothing? Cool, so you wrapped an API call in a UI. Impressive.

Generic chatbots are the “to-do list app” of AI portfolio projects. Everyone has one. Nobody cares.

The “AI Wrapper”

“I built an app that uses AI to summarize articles!”

So… you send text to GPT and display the response? That’s not a project, that’s an API call with extra steps.

The Overly Ambitious Disaster

“I’m building an AI that will revolutionize healthcare/finance/education!”

No you’re not. You’re going to spend three months on it, hit a wall, and have nothing to show. Interviewers have seen this pattern. They’ll ask follow-up questions and discover you never finished it.

The “I Followed a Tutorial” Project

“I built this RAG system following a LangChain tutorial!”

Great, so did 50,000 other people. The code is identical. The approach is identical. You can’t explain why you made any decisions because you didn’t make any.

Following a tutorial isn’t learning — it’s typing. If you can’t rebuild it from scratch without looking at the tutorial, you don’t understand it. If you can’t explain why each piece exists, an interviewer will know.

What Actually Impresses Interviewers

Interviewers want to see three things:

1. You Made Decisions

Not “I used LangChain because the tutorial used it” but “I used LangChain because I needed the document loaders, but I wrote my own chunking logic because theirs didn’t handle code blocks well.”

Decisions show understanding. Anyone can copy code. Explaining WHY you did something shows you actually know what’s happening.

2. You Hit Problems and Solved Them

“The embeddings were returning irrelevant results for short queries, so I added query expansion — the system rephrases the question before searching.”

Problems are good. Everyone hits them. The developers who impress are the ones who can articulate what went wrong and how they fixed it.

3. You Understand the Limitations

“It works well for factual questions but struggles with anything requiring reasoning across multiple documents. Also, if the answer isn’t in the docs, it sometimes makes stuff up — I added a confidence threshold but it’s not perfect.”

This is gold. It shows you actually used the thing and understand where AI falls apart. Interviewers are tired of candidates who think AI is magic.

The Project: Documentation Search That Doesn’t Suck

Here’s what we’re building: a search system for documentation that actually understands questions.

Traditional search: You type “authentication,” it finds documents containing the word “authentication.”

Our search: You type “how do I add login to my app?” and it finds the relevant docs about authentication, OAuth setup, and session management — even if they never use the exact words in your query.

Why this project is good:

- Actually useful (every company has docs nobody can find anything in)

- Demonstrates real AI concepts (embeddings, RAG, prompt engineering)

- Has interesting technical decisions (chunking, retrieval, response generation)

- Cheap to run (doesn’t need massive compute)

- Easy to demo (paste a question, get an answer)

- Extendable (you can add features for extra credit)

Tech stack:

- Node.js/TypeScript (or Python, your choice)

- OpenAI API (embeddings + chat completion)

- Vector database (we’ll use a simple in-memory one, upgrade later)

- Express for the API (or FastAPI for Python)

Total cost to build and demo: ~$5-10 in API credits.

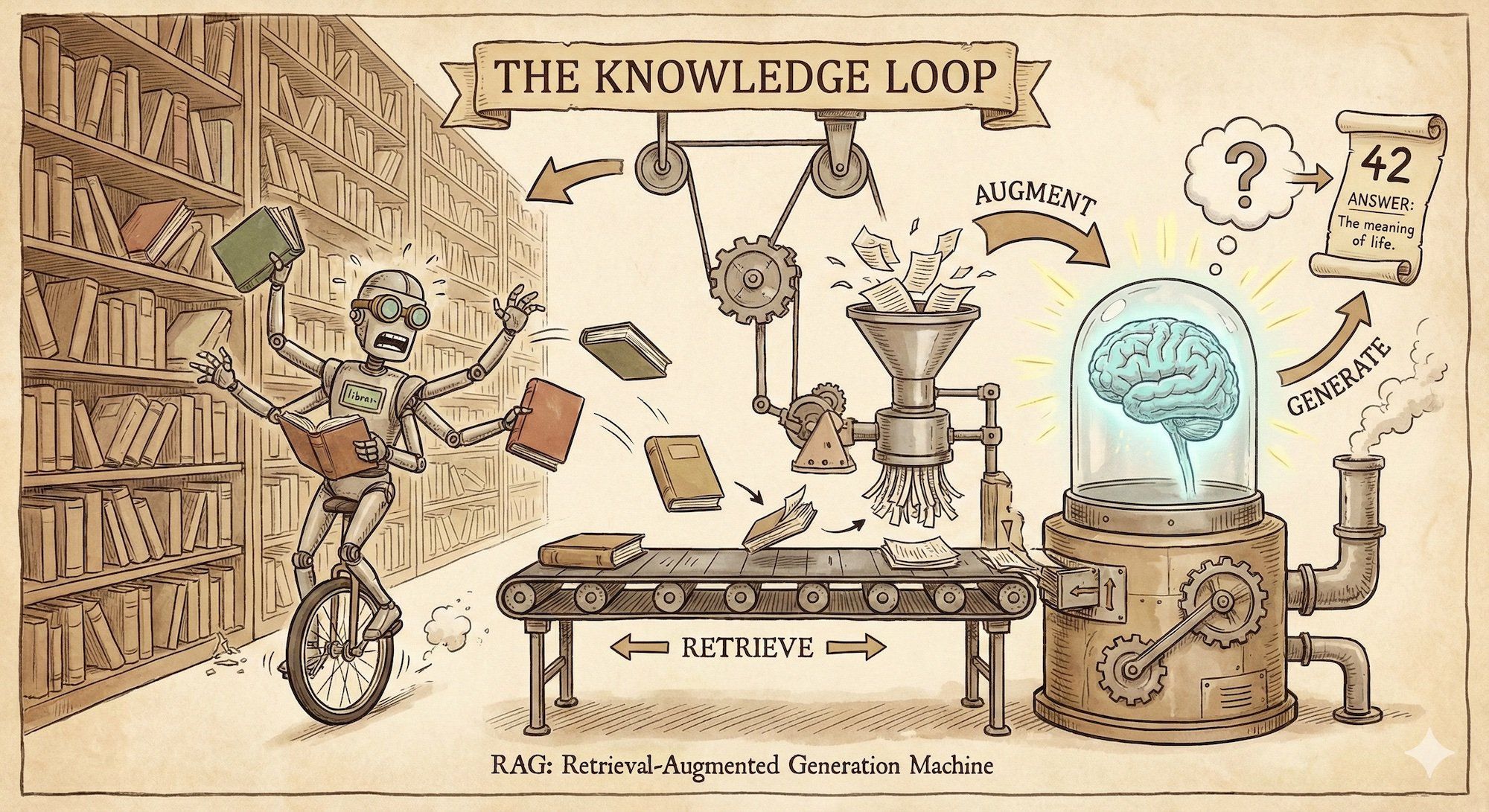

RAG Explained Like You’re Not a PhD

RAG stands for Retrieval Augmented Generation. Fancy name, simple concept:

- User asks a question

- Search for relevant documents (the Retrieval part)

- Give those documents to the AI along with the question (the Augmentation part)

- AI generates an answer based on the documents (the Generation part)

That’s it. You’re teaching the AI about your specific data by shoving relevant context into the prompt.

Why do we need this?

The AI doesn’t know about your company’s internal docs. It wasn’t trained on them. If you just ask “how do I deploy to our staging environment?” it’ll make something up.

But if you FIND the relevant deployment docs first, then ask “based on these documents, how do I deploy to staging?” — now it can give you a real answer.

The magic is in the retrieval. If you retrieve the wrong documents, the AI gives wrong answers. If you retrieve the right documents, the AI looks like a genius.

You might hear about “fine-tuning” as another way to teach AI about your data. Fine-tuning means retraining the model on your data. It’s expensive, slow, and the model can still hallucinate. RAG is cheaper, faster, and you can update the data without retraining. For most use cases, RAG wins.

Setting Up the Foundation

Let’s build this thing. I’ll use TypeScript/Node.js, but the concepts translate to any language.

First, the project structure:

doc-search/

├── src/

│ ├── index.ts # API server

│ ├── chunker.ts # Document chunking

│ ├── embeddings.ts # OpenAI embeddings

│ ├── vectorStore.ts # Vector storage and search

│ ├── rag.ts # RAG pipeline

│ └── types.ts # TypeScript types

├── docs/ # Your documentation files

├── package.json

└── .env # API keys (don't commit this)Install dependencies:

npm init -y

npm install express openai dotenv

npm install -D typescript @types/express @types/node tsxYour .env file:

OPENAI_API_KEY=sk-your-key-hereBasic types (src/types.ts):

export interface DocumentChunk {

id: string;

content: string;

metadata: {

source: string;

title?: string;

section?: string;

};

embedding?: number[];

}

export interface SearchResult {

chunk: DocumentChunk;

score: number;

}

export interface RAGResponse {

answer: string;

sources: string[];

confidence: number;

}Step 1: Chunking Your Documents

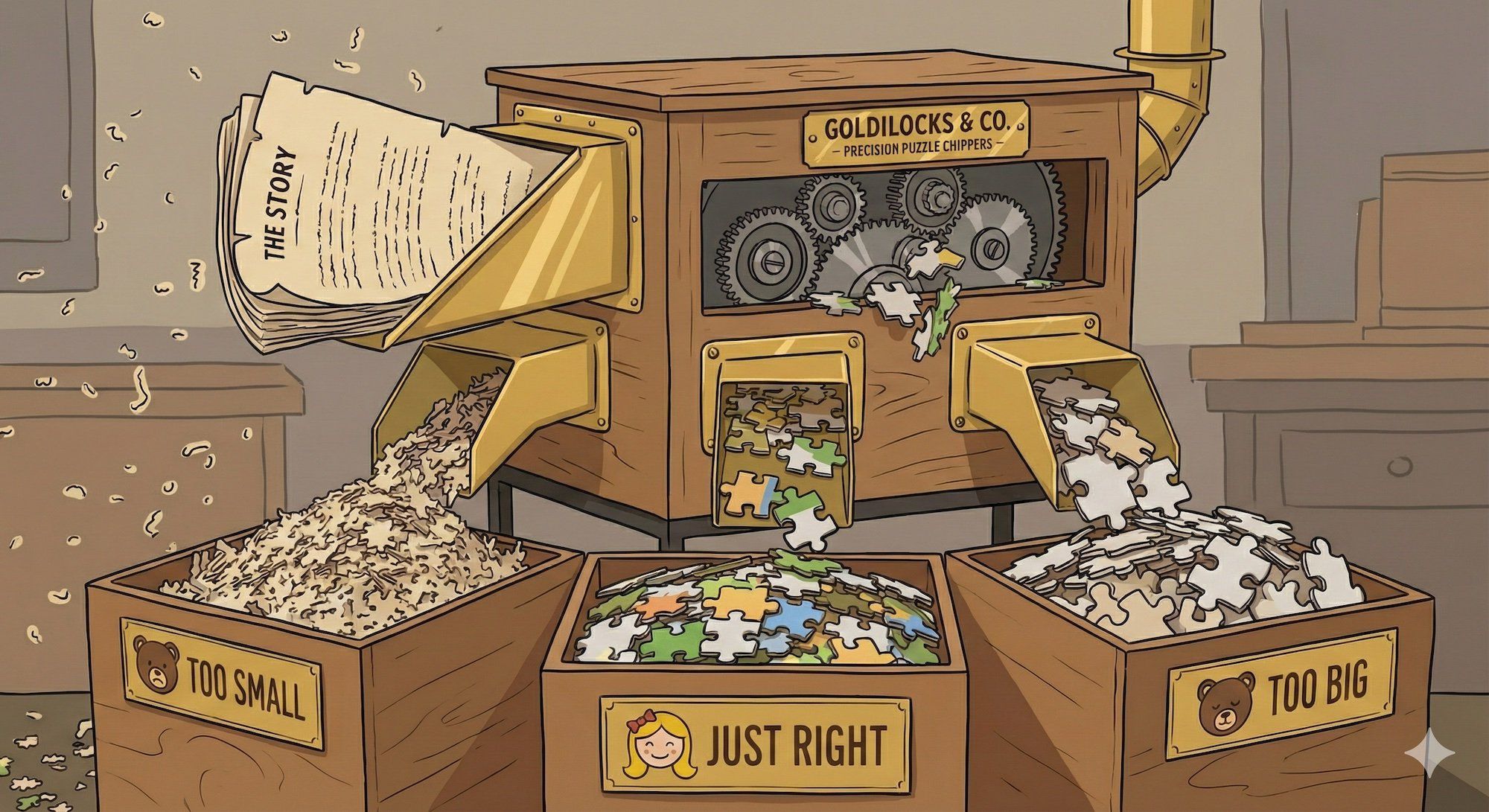

Here’s where most tutorials fail you: they gloss over chunking like it doesn’t matter. It matters a lot.

The problem: You can’t embed an entire 50-page document. The embedding model has limits (8191 tokens for OpenAI’s ada-002). Even if you could, a single embedding for 50 pages would be useless — it’d be a blurry average of everything.

The solution: Split documents into chunks. Each chunk gets its own embedding. When searching, you find the most relevant chunks.

The hard part: How big should chunks be?

- Too small (100 tokens): Chunks lack context. “See the configuration above” — what configuration? It’s in a different chunk now.

- Too large (2000 tokens): Embeddings become diluted. A chunk about authentication AND deployment AND testing matches queries about all three, poorly.

- Just right (300-500 tokens): Enough context to be meaningful, specific enough to be findable.

Here’s a chunker that actually works (src/chunker.ts):

import { DocumentChunk } from './types';

import { randomUUID } from 'crypto';

interface ChunkOptions {

maxTokens: number;

overlap: number;

}

const DEFAULT_OPTIONS: ChunkOptions = {

maxTokens: 500,

overlap: 50

};

// Rough token estimation (accurate enough for chunking)

function estimateTokens(text: string): number {

return Math.ceil(text.length / 4);

}

export function chunkDocument(

content: string,

source: string,

options: ChunkOptions = DEFAULT_OPTIONS

): DocumentChunk[] {

const chunks: DocumentChunk[] = [];

// Split by paragraphs first (preserve natural boundaries)

const paragraphs = content.split(/\n\n+/);

let currentChunk = '';

let currentTokens = 0;

for (const paragraph of paragraphs) {

const paragraphTokens = estimateTokens(paragraph);

// If single paragraph exceeds max, split by sentences

if (paragraphTokens > options.maxTokens) {

// Save current chunk first

if (currentChunk) {

chunks.push(createChunk(currentChunk, source));

currentChunk = '';

currentTokens = 0;

}

// Split large paragraph

const sentences = paragraph.split(/(?<=[.!?])\s+/);

for (const sentence of sentences) {

const sentenceTokens = estimateTokens(sentence);

if (currentTokens + sentenceTokens > options.maxTokens && currentChunk) {

chunks.push(createChunk(currentChunk, source));

// Keep overlap from end of previous chunk

const words = currentChunk.split(' ');

const overlapWords = words.slice(-Math.floor(options.overlap / 2));

currentChunk = overlapWords.join(' ') + ' ' + sentence;

currentTokens = estimateTokens(currentChunk);

} else {

currentChunk += (currentChunk ? ' ' : '') + sentence;

currentTokens += sentenceTokens;

}

}

} else if (currentTokens + paragraphTokens > options.maxTokens) {

// Save current chunk and start new one with overlap

chunks.push(createChunk(currentChunk, source));

// Start new chunk with overlap

const words = currentChunk.split(' ');

const overlapWords = words.slice(-Math.floor(options.overlap / 2));

currentChunk = overlapWords.join(' ') + '\n\n' + paragraph;

currentTokens = estimateTokens(currentChunk);

} else {

// Add paragraph to current chunk

currentChunk += (currentChunk ? '\n\n' : '') + paragraph;

currentTokens += paragraphTokens;

}

}

// Don't forget the last chunk

if (currentChunk) {

chunks.push(createChunk(currentChunk, source));

}

return chunks;

}

function createChunk(content: string, source: string): DocumentChunk {

return {

id: randomUUID(),

content: content.trim(),

metadata: { source }

};

}Why overlap? If the answer to a question spans two chunks, overlap helps. The end of chunk 1 and start of chunk 2 share some content, so either might match the query.

If your docs contain code blocks, don’t split in the middle of them. Add logic to detect code fences (```) and keep code blocks intact. This is the kind of decision interviewers love hearing about.

Step 2: Creating Embeddings

Embeddings turn text into numbers. Specifically, a list of numbers (a vector) that represents the “meaning” of the text.

Similar meanings = similar vectors. “How do I log in?” and “authentication process” have similar embeddings even though they share no words.

Here’s the embedding service (src/embeddings.ts):

import OpenAI from 'openai';

import { DocumentChunk } from './types';

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY

});

export async function createEmbedding(text: string): Promise<number[]> {

const response = await openai.embeddings.create({

model: 'text-embedding-ada-002',

input: text

});

return response.data[0].embedding;

}

export async function embedChunks(

chunks: DocumentChunk[]

): Promise<DocumentChunk[]> {

// Process in batches to avoid rate limits

const batchSize = 20;

const results: DocumentChunk[] = [];

for (let i = 0; i < chunks.length; i += batchSize) {

const batch = chunks.slice(i, i + batchSize);

const response = await openai.embeddings.create({

model: 'text-embedding-ada-002',

input: batch.map(c => c.content)

});

for (let j = 0; j < batch.length; j++) {

results.push({

...batch[j],

embedding: response.data[j].embedding

});

}

// Be nice to the API

if (i + batchSize < chunks.length) {

await new Promise(resolve => setTimeout(resolve, 100));

}

}

return results;

}Cost check: text-embedding-ada-002 costs $0.0001 per 1K tokens. Embedding 100 pages of docs (~50K tokens) costs about $0.005. Basically nothing.

Step 3: Vector Storage

Now we need to store these embeddings and search them. For a portfolio project, let’s start simple: in-memory storage with cosine similarity search.

// src/vectorStore.ts

import { DocumentChunk, SearchResult } from './types';

export class VectorStore {

private chunks: DocumentChunk[] = [];

add(chunks: DocumentChunk[]): void {

this.chunks.push(...chunks);

}

search(queryEmbedding: number[], topK: number = 5): SearchResult[] {

const results: SearchResult[] = this.chunks

.filter(chunk => chunk.embedding) // Safety check

.map(chunk => ({

chunk,

score: cosineSimilarity(queryEmbedding, chunk.embedding!)

}))

.sort((a, b) => b.score - a.score)

.slice(0, topK);

return results;

}

// For persistence (save to file)

serialize(): string {

return JSON.stringify(this.chunks);

}

// Load from file

static deserialize(data: string): VectorStore {

const store = new VectorStore();

store.chunks = JSON.parse(data);

return store;

}

}

function cosineSimilarity(a: number[], b: number[]): number {

let dotProduct = 0;

let normA = 0;

let normB = 0;

for (let i = 0; i < a.length; i++) {

dotProduct += a[i] * b[i];

normA += a[i] * a[i];

normB += b[i] * b[i];

}

return dotProduct / (Math.sqrt(normA) * Math.sqrt(normB));

}For production, you’d use a real vector database: Pinecone, Weaviate, Qdrant, or pgvector. They handle millions of vectors, persist to disk, and search faster. For a portfolio project with a few hundred chunks, in-memory is fine and simpler to explain.

Step 4: The Search Function

Now we wire it together — take a query, embed it, search for similar chunks:

// src/rag.ts

import { createEmbedding } from './embeddings';

import { VectorStore } from './vectorStore';

import { SearchResult } from './types';

export async function searchDocuments(

query: string,

store: VectorStore,

topK: number = 5

): Promise<SearchResult[]> {

// Embed the query

const queryEmbedding = await createEmbedding(query);

// Search for similar chunks

const results = store.search(queryEmbedding, topK);

// Filter low-confidence results

const threshold = 0.7;

return results.filter(r => r.score > threshold);

}The threshold is important. Without it, you always return results even if nothing matches. A query about “quantum physics” shouldn’t return your deployment docs just because they’re the “closest” match (they’re all far).

Step 5: The AI Response

Here’s where the “generation” in RAG happens. We take the retrieved chunks and ask the AI to answer based on them:

// src/rag.ts (continued)

import OpenAI from 'openai';

import { RAGResponse, SearchResult } from './types';

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY

});

export async function generateAnswer(

query: string,

searchResults: SearchResult[]

): Promise<RAGResponse> {

// No relevant docs found

if (searchResults.length === 0) {

return {

answer: "I couldn't find any relevant documentation for your question.",

sources: [],

confidence: 0

};

}

// Build context from search results

const context = searchResults

.map((r, i) => `[${i + 1}] ${r.chunk.content}`)

.join('\n\n---\n\n');

const sources = [...new Set(searchResults.map(r => r.chunk.metadata.source))];

const systemPrompt = `You are a helpful documentation assistant. Answer questions based ONLY on the provided documentation excerpts.

Rules:

- Only use information from the provided excerpts

- If the excerpts don't contain the answer, say so clearly

- Cite your sources using [1], [2], etc.

- Be concise and direct

- If you're not confident in the answer, say so`;

const userPrompt = `Documentation excerpts:

${context}

---

Question: ${query}

Answer based only on the documentation above:`;

const response = await openai.chat.completions.create({

model: 'gpt-4-turbo-preview',

messages: [

{ role: 'system', content: systemPrompt },

{ role: 'user', content: userPrompt }

],

temperature: 0.3, // Lower = more focused on the docs

max_tokens: 500

});

const answer = response.choices[0].message.content || 'No response generated';

// Rough confidence based on search scores

const avgScore = searchResults.reduce((sum, r) => sum + r.score, 0) / searchResults.length;

return {

answer,

sources,

confidence: avgScore

};

}Key decisions to talk about in interviews:

- Temperature 0.3 — We want the AI to stick to the docs, not get creative

- Citation requirement — Makes it traceable, users can verify

- “Only use provided excerpts” — Reduces hallucination

- Confidence score — Lets the UI show uncertainty

Step 6: The API Endpoint

Finally, let’s expose this as an API:

// src/index.ts

import express from 'express';

import { config } from 'dotenv';

import { VectorStore } from './vectorStore';

import { searchDocuments, generateAnswer } from './rag';

import { chunkDocument } from './chunker';

import { embedChunks } from './embeddings';

import * as fs from 'fs';

import * as path from 'path';

config(); // Load .env

const app = express();

app.use(express.json());

let store: VectorStore;

// Initialize: load docs and create embeddings

async function initialize() {

console.log('Initializing document store...');

const storePath = './data/store.json';

// Try to load existing store

if (fs.existsSync(storePath)) {

console.log('Loading existing store...');

const data = fs.readFileSync(storePath, 'utf-8');

store = VectorStore.deserialize(data);

console.log('Store loaded.');

return;

}

// Build new store from docs

store = new VectorStore();

const docsDir = './docs';

if (!fs.existsSync(docsDir)) {

console.log('No docs directory found. Creating empty store.');

return;

}

const files = fs.readdirSync(docsDir).filter(f => f.endsWith('.md'));

console.log(`Found ${files.length} documentation files.`);

for (const file of files) {

console.log(`Processing ${file}...`);

const content = fs.readFileSync(path.join(docsDir, file), 'utf-8');

const chunks = chunkDocument(content, file);

const embeddedChunks = await embedChunks(chunks);

store.add(embeddedChunks);

}

// Save for next time

fs.mkdirSync('./data', { recursive: true });

fs.writeFileSync(storePath, store.serialize());

console.log('Store built and saved.');

}

// Search endpoint

app.post('/api/search', async (req, res) => {

try {

const { query } = req.body;

if (!query || typeof query !== 'string') {

return res.status(400).json({ error: 'Query is required' });

}

const searchResults = await searchDocuments(query, store);

const response = await generateAnswer(query, searchResults);

res.json(response);

} catch (error) {

console.error('Search error:', error);

res.status(500).json({ error: 'Search failed' });

}

});

// Health check

app.get('/health', (req, res) => {

res.json({ status: 'ok' });

});

const PORT = process.env.PORT || 3000;

initialize().then(() => {

app.listen(PORT, () => {

console.log(`Server running on port ${PORT}`);

});

});Deployment Without Bankruptcy

You built it. Now you need to host it somewhere interviewers can see it.

Free/cheap options:

| Platform | Cost | Pros | Cons |

|---|---|---|---|

| Railway | Free tier, then ~$5/mo | Easy, supports Node | Limited free hours |

| Render | Free tier | Simple deploy | Sleeps after 15min inactivity |

| Fly.io | Free tier | Good performance | Slightly more setup |

| Vercel | Free | Great for Next.js | Serverless, might need tweaks |

For a portfolio project: Railway or Render. Push to GitHub, connect the repo, deploy. Done in 10 minutes.

Cost of running:

- Hosting: $0-5/month

- OpenAI API: ~$0.10-1.00/month for demo traffic

- Total: Under $10/month

Don’t use proprietary docs for your demo. Use something public: React docs, Node.js docs, or write fake company docs. You want interviewers to be able to test it without NDA concerns.

How to Talk About This in Interviews

When they ask “have you built anything with AI?”, here’s your framework:

1. Start with the problem: “Documentation search is terrible. You search for ‘authentication’ and get 50 results. I built a system where you can ask ‘how do I add Google login?’ and it finds the relevant docs and summarizes them.”

2. Explain the approach: “It uses RAG — I chunk the documents, create embeddings with OpenAI, store them in a vector database, then retrieve relevant chunks when someone asks a question. The AI generates an answer based only on the retrieved docs.”

3. Talk about a decision you made: “The hardest part was chunking. Too small and you lose context, too big and search quality drops. I ended up with 500-token chunks with 50-token overlap. I also had to handle code blocks specially — you can’t split in the middle of a code example.”

4. Mention a limitation: “It works well for factual questions with clear answers in the docs. It struggles with questions that need reasoning across multiple sections, and if the answer isn’t in the docs, it sometimes makes things up despite my prompt engineering. I added a confidence score but it’s not perfect.”

5. If they ask for more: “I’d like to add conversation history so follow-up questions work better, and maybe re-ranking with a cross-encoder to improve retrieval quality.”

This shows you understand the tech, made real decisions, know the limitations, and have ideas for improvement. That’s what interviewers want to see.

The Honest Limitations

Your project has limitations. Know them before the interviewer asks:

1. Single-turn only Each query is independent. “What about authentication?” doesn’t know you were just asking about deployment. Fix: add conversation history.

2. Retrieval quality varies Short queries often miss. “Login” might not find “authentication” docs. Fix: query expansion or hybrid search.

3. Can still hallucinate Despite instructions, the AI sometimes invents details not in the docs. Fix: more aggressive prompting, confidence thresholds, human review.

4. Doesn’t handle updates well Change a doc, need to re-embed. Fix: incremental embedding updates, track file hashes.

5. No access control Can’t restrict which docs different users see. Fix: add user context to search filtering.

The point: Know these exist. Mention them before they’re discovered. Shows maturity.

Extensions for Extra Credit

If you want to go further:

- Conversation history — Remember previous questions, handle “what about X?”

- Hybrid search — Combine vector search with keyword search for better recall

- Re-ranking — Use a cross-encoder to re-score results

- Streaming — Stream the AI response for better UX

- Analytics — Track what questions people ask, which go unanswered

- Feedback loop — Let users rate answers, use for improvement

Don’t do all of these. Pick one, do it well, and be able to talk about why you chose it.

What You Actually Have Now

You built a real AI feature. Not a wrapper. Not a tutorial copy-paste. Something with:

- Real technical decisions (chunking size, overlap, threshold)

- Real components (embeddings, vector search, RAG pipeline)

- Real limitations (that you understand and can discuss)

- Real code (that you wrote and can explain)

When someone asks “have you worked with AI?”, you can say yes without lying. You can explain how RAG works. You can discuss trade-offs. You can point to deployed code.

That’s the whole point.

Now go build it, deploy it, and practice explaining it until you can do it in your sleep. The interview is coming.