Debugging and Learning: Your New Stack Overflow

I pasted a 47-line stack trace into Claude. It immediately identified the problem: a null pointer exception three layers deep in a library I'd never heard of, caused by a race condition in my initialization code. It explained why, showed me the fix, and predicted the exact circumstances that would trigger it. I had been debugging this for six hours. Six hours of Stack Overflow, GitHub issues, and increasingly desperate Google searches. The AI solved it in eleven seconds. I felt grateful and also like my entire skill set had been rendered obsolete. These two feelings now coexist permanently.

Table of Contents

- The Old Way vs The New Way

- Why AI is Surprisingly Good at This

- The Error Message Translator

- The Legacy Code Archaeologist

- When the AI Becomes a Rubber Duck

- The “What the Fuck is This?” Workflow

- Learning New Frameworks Without Reading Docs

- The Limits of AI Debugging

- Your New Debugging Loop

The Old Way vs The New Way

The old way of debugging:

2:47 AM. Production is down. Error logs are cryptic. You Google the error message. First result: Stack Overflow from 2014. The accepted answer says “just restart the server” (useless). Second answer has code but it’s for a completely different framework. Third answer is someone saying “I have the same problem!” with no solution.

You check the GitHub issues. The relevant issue was closed in 2019 with “works for me.” You read the documentation. It’s either outdated or doesn’t cover your specific case. You try random solutions from various blog posts. Nothing works.

3:42 AM. You finally find a Reddit comment from someone who had the exact issue. Their solution is hacky but it works. You implement it. Production is back up. You have no idea why it broke or why the fix worked. You document nothing because you’re too tired.

The new way:

2:47 AM. Production is down. You copy the error message and stack trace. You paste it into ChatGPT/Claude along with the relevant code.

AI: “This error occurs when X tries to Y but Z is null. Looking at your code, the issue is on line 47 where you’re accessing user.profile without checking if profile exists. The reason this just started happening is probably because you recently deployed code that allows users without profiles.”

2:51 AM. You add a null check. Deploy. Production is back up. You understand what broke and why.

The difference: You debugged in 4 minutes instead of an hour. You actually understand what went wrong. You can prevent it next time.

This isn’t hypothetical. This is happening right now. The developers who figured this out are sleeping better than you are.

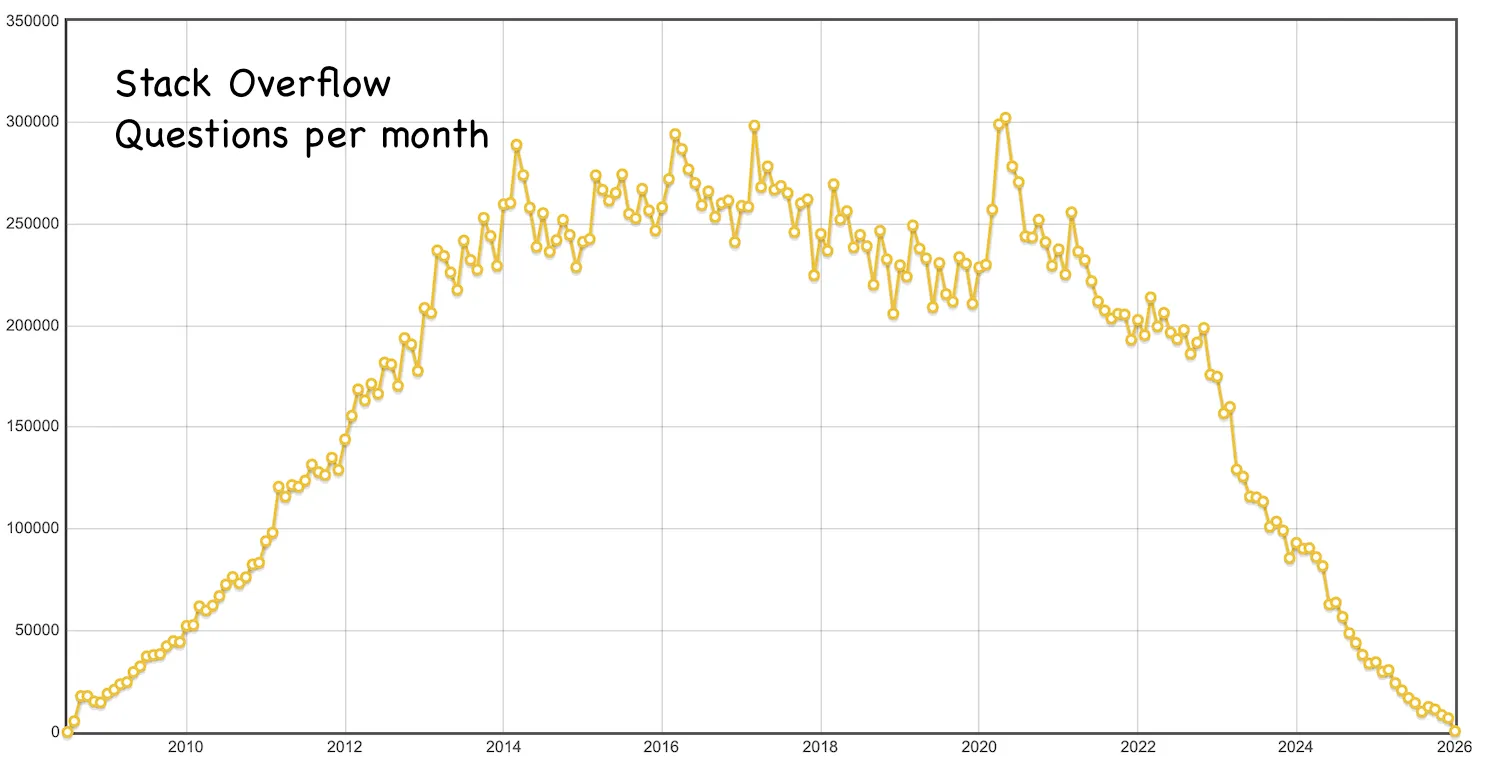

By the way, did you know your beloved Stack Overflow is dying a slow, painful death?

Why AI is Surprisingly Good at This

Here’s something weird: AI is often better at debugging than generating code.

Why? Because debugging is pattern matching. “I’ve seen this error 10,000 times, and 90% of the time it means X.” The AI doesn’t need to be creative or understand your business logic. It just needs to recognize patterns.

The AI has seen:

- Millions of error messages and their solutions

- Thousands of “why doesn’t this work?” Stack Overflow questions

- Countless bug fix commits with before/after code

- Every common mistake and how to fix it

When you paste an error, the AI is thinking: “I’ve seen ‘Cannot read property of undefined’ 50,000 times. In this context (Node.js, async function, database call), it’s almost always one of three things.”

The beautiful part: The AI doesn’t get tired. It doesn’t get frustrated. It doesn’t say “works on my machine” and give up. It looks at your code and your error and suggests fixes until something works.

The ugly part: Sometimes the AI confidently suggests a fix that’s wrong. You try it. Doesn’t work. Try the second suggestion. Still wrong. By the fourth suggestion, you realize the AI doesn’t actually know and is just throwing common solutions at the wall.

But even when it’s wrong, it’s often helpful. It gives you ideas. It makes you think about the problem differently. It’s like pair programming with someone who’s seen every bug ever but occasionally hallucinates.

The Error Message Translator

Error messages are written by computers for computers. They’re technically accurate but humanly incomprehensible.

Example:

TypeError: Cannot read properties of undefined (reading 'map')

at processUsers (/app/services/user.js:47:23)

at async validateData (/app/controllers/data.js:89:15)What you see: Word salad. Something about ‘map’ and ‘undefined’. Line 47 in user.js.

What you do: You paste this into the AI along with the code around line 47:

function processUsers(users) {

return users.map(user => ({

id: user.id,

name: user.name

}));

}What the AI tells you:

“The error ‘Cannot read properties of undefined (reading ‘map’)’ means users is undefined when processUsers is called. The function expects an array but is receiving undefined.

Looking at the stack trace, this is being called from validateData. Check your validateData function - it’s probably passing undefined instead of an array. This often happens when:

- An async function doesn’t await before passing data

- A database query returns null/undefined when no results found

- A function returns early without a value

Check line 89 in data.js where processUsers is called.”

What just happened: The AI translated computer speak into human speak. It explained what the error means, why it’s happening, and where to look next.

You go to line 89. Sure enough:

async function validateData() {

const users = getUsers(); // Missing await!

return processUsers(users);

}Fixed in 30 seconds. Without AI, you might have spent 10 minutes adding console.logs to figure out that users was a Promise object, not an array.

The pattern: Error messages tell you WHAT broke. The AI tells you WHY it broke and HOW to fix it. That’s the value.

The Legacy Code Archaeologist

Every codebase has that file. You know the one. The file that nobody wants to touch because nobody understands what it does. 500 lines of dense logic written by someone who left the company three years ago. No comments. Variable names like tmp and data2. Functions that do six things.

You need to modify it. You’re terrified.

The old approach:

- Read through it line by line (takes an hour)

- Try to understand the flow (give up after 30 minutes)

- Make your change (hope for the best)

- Break something unrelated (spend two hours debugging)

- Revert your change (cry)

The new approach:

Paste the entire function into the AI:

function processData(data, opts) {

const tmp = data.filter(d => d.v > opts.min);

const grouped = tmp.reduce((acc, item) => {

const key = opts.useAlt ? item.alt : item.prim;

if (!acc[key]) acc[key] = [];

acc[key].push(item);

return acc;

}, {});

return Object.entries(grouped)

.map(([k, v]) => ({

id: k,

items: v,

total: v.reduce((sum, i) => sum + i.val, 0)

}))

.filter(g => g.total > opts.threshold);

}Ask: “What does this function do? Explain it step by step with better variable names.”

AI response:

“This function filters, groups, and aggregates data. Here’s what it does:

- Filter: Remove items below a minimum value threshold

- Group: Group remaining items by either their ‘alt’ or ‘prim’ property (depending on opts.useAlt flag)

- Aggregate: For each group, calculate the total sum of ‘val’ properties

- Filter again: Only return groups where the total exceeds opts.threshold

Here’s the same logic with clearer names:

function filterAndGroupData(items, options) {

// Step 1: Filter items above minimum value

const itemsAboveMin = items.filter(item => item.v > options.min);

// Step 2: Group by either alternate or primary key

const groupedByKey = itemsAboveMin.reduce((groups, item) => {

const groupKey = options.useAlt ? item.alt : item.prim;

if (!groups[groupKey]) groups[groupKey] = [];

groups[groupKey].push(item);

return groups;

}, {});

// Step 3: Transform to array with totals

const groupsWithTotals = Object.entries(groupedByKey)

.map(([key, groupItems]) => ({

id: key,

items: groupItems,

total: groupItems.reduce((sum, item) => sum + item.val, 0)

}));

// Step 4: Filter to groups above threshold

return groupsWithTotals.filter(group => group.total > options.threshold);

}This appears to be aggregating items by category and only keeping categories with significant totals.”

What just happened: The AI reverse-engineered the intent from the implementation. It gave you a mental model. Now you understand what the function does and can safely modify it.

Pro tip: After the AI explains it, add comments to the original code. Future you (and your teammates) will thank you.

When the AI Becomes a Rubber Duck

You know rubber duck debugging? Where you explain your problem out loud to an inanimate object and suddenly realize the solution?

The AI is a rubber duck that talks back.

Real scenario: You’re stuck on a bug for 45 minutes. You’ve tried everything. Nothing works. You’re about to give up and ask a coworker (but you don’t want to look stupid).

Instead, you explain the problem to the AI:

“I’m trying to update a user’s profile. The API call succeeds (I can see it in the network tab) and returns 200 OK. But when I refresh the page, the changes aren’t saved. I’ve checked the database - the record isn’t updated. I’ve added logging - the update function is definitely being called. What am I missing?”

The AI asks clarifying questions:

“A few things to check:

- Are you updating the right user? Verify the userId being sent matches the logged-in user

- Is the update wrapped in a transaction that’s not being committed?

- Are you accidentally updating a cache instead of the database?

- Is there middleware that’s reverting changes?

- Does your database have triggers that might be interfering?”

Reading question 2, you suddenly remember: you wrapped the update in a transaction last week for a different feature. You never committed it. That’s the bug.

What happened: You didn’t even need the AI to solve it. Explaining the problem forced you to think systematically. The AI’s questions nudged you in the right direction.

The advantage over a real rubber duck: The AI asks good questions. It suggests things you might have missed. It’s like a senior developer helping you debug, except it’s 3 AM and the senior developer is asleep.

The “What the Fuck is This?” Workflow

You’re reviewing a PR. You see this:

const result = data.reduce((acc, curr) =>

acc.concat(curr.items.filter(item =>

item.status === 'active' && item.priority > 5

).map(item => ({

...item,

computed: item.value * curr.multiplier

}))), []);You stare at it. Your brain hurts. What does this even do? Is it correct? Should you approve this?

Old way:

- Spend 10 minutes mentally parsing it

- Maybe trace through it with example data

- Probably just approve it and hope for the best

New way: Copy to AI: “Explain what this code does and if there are any potential issues.”

AI response:

“This code flattens and transforms nested data. Specifically:

- For each item in

data - Filter that item’s

itemsarray for active items with priority > 5 - Transform those filtered items by adding a

computedfield (item.value * parent multiplier) - Flatten all results into a single array

Potential issues:

- No null checks: If

curr.itemsis undefined, this will crash - No validation: If

item.valueorcurr.multiplieraren’t numbers, you get NaN - Performance: This is O(n*m) complexity. For large datasets, consider a different approach

- Readability: This should probably be multiple lines with intermediate variables

Suggested refactor:

const result = data.flatMap(parent => {

if (!parent.items) return [];

return parent.items

.filter(item => item.status === 'active' && item.priority > 5)

.map(item => ({

...item,

computed: (item.value || 0) * (parent.multiplier || 1)

}));

});Now you understand the code AND have concrete feedback for the PR. You comment with the AI’s suggestions (without mentioning AI, because there’s still that stigma).

The time saved: Maybe 15 minutes. The confidence gained: knowing you actually understand what you’re reviewing instead of rubber-stamping it.

Learning New Frameworks Without Reading Docs

You need to use a library you’ve never touched before. The documentation is 100 pages. You have an hour.

Old way:

- Skim the docs (miss important details)

- Find a tutorial (it’s outdated)

- Copy example code (doesn’t quite fit your use case)

- Spend an hour debugging your frankenstein implementation

New way:

“I need to use Prisma ORM to query a User table with relations to Posts. Show me how to:

- Set up the Prisma schema

- Query users with their posts

- Create a new user with posts in a transaction

- Handle errors properly

I’m using Node.js with TypeScript.”

AI gives you working examples for your specific use case. You still need to understand what it’s doing (don’t blindly copy), but you’ve skipped the “reading 100 pages to find the 3 pages that matter” phase.

The pattern: Use AI to get to working code fast. Use documentation to understand the details and edge cases. This is backwards from the old way (read docs first, then code), but it’s more efficient.

The AI might suggest outdated patterns or deprecated APIs. Always verify with the official docs that the suggested approach is current. The AI knows patterns, not release dates.

Provide links / docs to updated info when possible.

The Limits of AI Debugging

Let’s be honest about what AI can’t do:

1. Debug problems it can’t see

If your bug involves:

- Race conditions that only appear under load

- Environment-specific issues (works on dev, breaks on prod)

- Hardware problems

- Network issues

- Timing-sensitive code

The AI can suggest things to check, but it can’t reproduce the issue. You’re on your own.

2. Understand your domain logic

“Why is this discount calculation giving the wrong result?”

The AI can check your math and logic, but if the business rule is “legacy customers get 10% extra on Tuesdays unless it’s a holiday,” the AI doesn’t know that. You need to verify against the actual business requirements.

3. Debug distributed systems

“Why are these microservices not communicating?”

The AI can help with individual services, but distributed system issues require understanding the entire system. The AI sees one piece at a time. You need to understand the whole picture.

4. Know what’s in production

“Why is this breaking in production but working in dev?”

The AI doesn’t know what code is deployed, what environment variables are set, what data is in the production database. It can suggest common prod vs dev issues, but you need to investigate the actual state.

5. Debug when you can’t share the code

If you’re working on proprietary code with sensitive business logic, you can’t paste it into ChatGPT. The AI can help with general patterns, but not your specific implementation.

The takeaway: AI is great for common bugs, error messages, and learning. It’s less useful for complex, system-level, or context-dependent issues.

Your New Debugging Loop

Here’s what debugging actually looks like now:

Step 1: Hit the bug Something’s broken. Error message or unexpected behavior.

Step 2: Quick attempt Try the obvious fix. If it works, great. If not, continue.

Step 3: Ask the AI Copy error + relevant code to AI. “What’s wrong and how do I fix it?”

Step 4: Evaluate the response

- Does it make sense?

- Is it relevant to your specific case?

- Is it suggesting something you haven’t tried?

Step 5: Try the fix Implement the AI’s suggestion. Test it.

Step 6a: If it works Great! Make sure you understand WHY it worked. Don’t just move on.

Step 6b: If it doesn’t work Tell the AI what happened. “I tried that, now I get this error.” Keep iterating.

Step 7: If AI stops being useful After 3-4 iterations with no progress, the AI probably doesn’t know. Time to:

- Search for similar issues

- Ask a human

- Deep dive into the source code

- Set up a minimal reproduction

The key: AI is your first debugging partner, not your only one. It saves you time on common issues so you can spend more time on the hard problems.

The Uncomfortable Part

Using AI for debugging makes you faster. It also makes you lazier.

Before AI: You’d spend 20 minutes deep in the stack trace, really understanding the call flow, learning the internals of the library you’re using.

After AI: You paste the error, get a fix, move on. You’re faster, but did you actually learn anything?

The developers who succeed long-term:

- Use AI to get unstuck fast

- Then take time to understand why they were stuck

- Learn the underlying concepts

- Build mental models

The developers who struggle:

- Use AI as a magic fix generator

- Don’t understand their own code

- Can’t debug when the AI doesn’t know

- Get stuck on novel problems the AI hasn’t seen

AI makes you faster at debugging. Whether it makes you better at debugging is up to you.

The Reality of AI-Assisted Debugging

You’re not going to stop using Google. Stack Overflow isn’t dead. The documentation still matters. But AI has become the first stop, not the last resort.

When you hit a bug, your instinct is now “paste it into Claude” instead of “Google it.” That’s the shift. It’s faster, more personalized, and often more helpful.

But you still need to verify. The AI is confidently wrong often enough that blind trust will bite you. It’s a tool that makes you faster if you use it smartly, and makes you ship bugs if you use it blindly.

What This Means Practically

Before AI debugging:

- Hit a bug

- Spend 15 minutes Googling

- Try 3-4 Stack Overflow solutions

- Eventually figure it out (or give up and ask a coworker)

- Time: 30-60 minutes

With AI debugging:

- Hit a bug

- Ask AI (2 minutes)

- Try the fix (5 minutes)

- Either works or iterate

- Time: 10-20 minutes

The speedup is real. You’re not 10x faster, but you’re maybe 2-3x faster. That adds up. That’s the difference between shipping on Friday or working the weekend.

The question isn’t “should I use AI for debugging?” You already are, or you will be soon. The question is “how do I use it well enough that I’m debugging faster without becoming dependent on it for things I should know?”

The answer: use it, but understand it. Speed up the grunt work. Slow down for the learning.

Just remember:

You can lose your job or you’ll have problems getting a job if you’re not better than AI! It’s that simple. — Maximilian